latform for

latform for

apid

apid

nvestigation of

nvestigation of

cientific Computing &

cientific Computing &

achine Learning

achine Learning

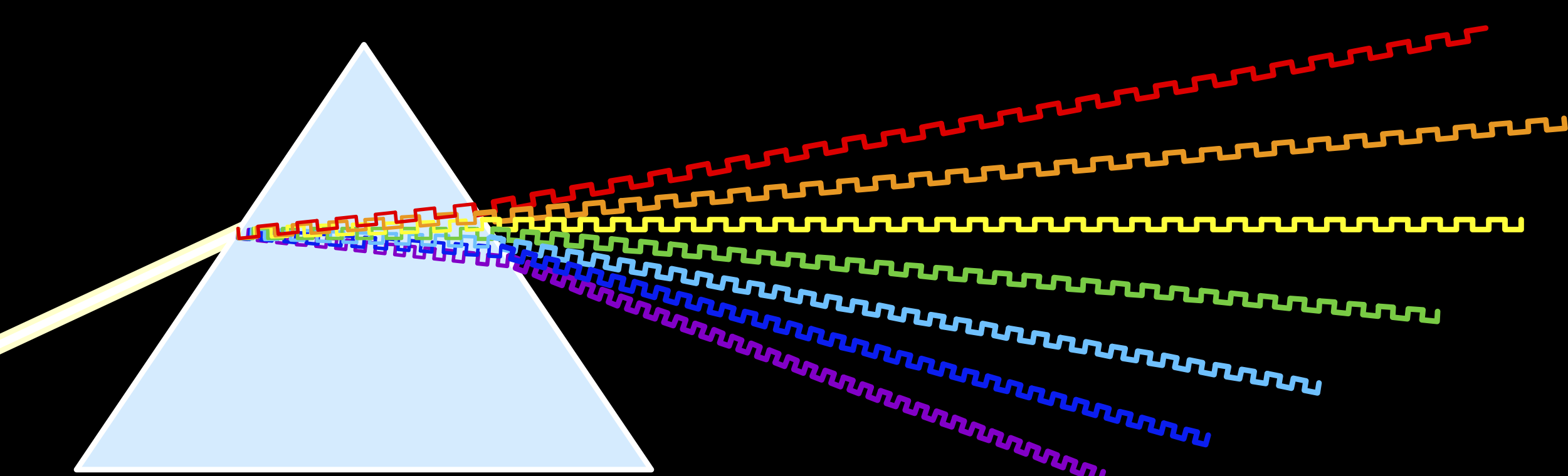

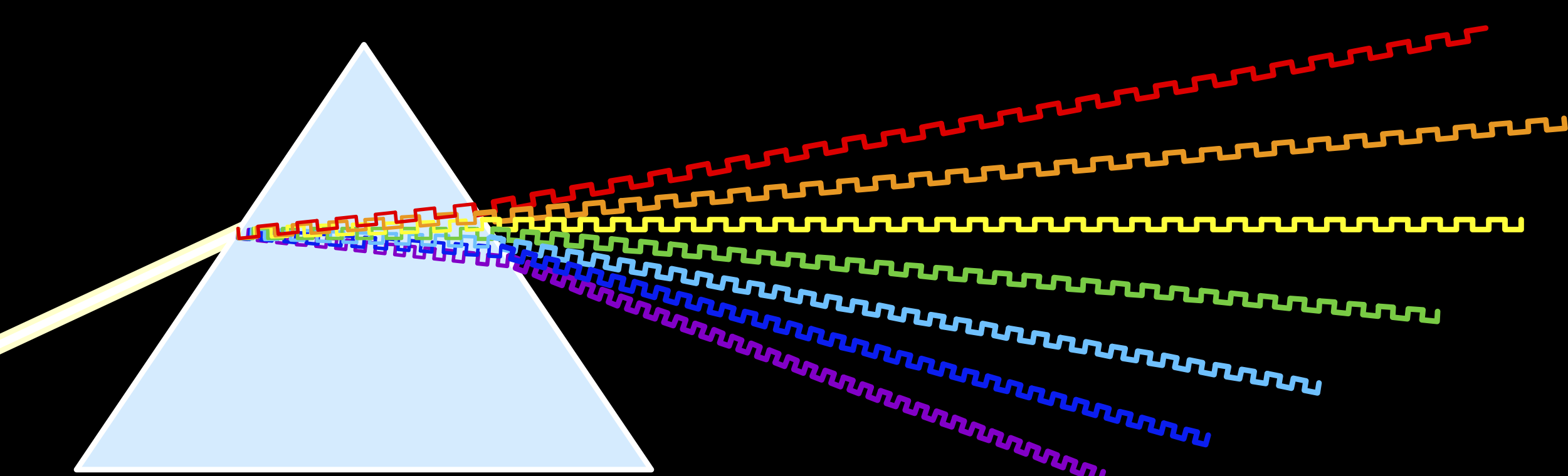

Today's systems demand acceleration in processing and learning using massive datasets. Unfortunately, because of poor energy scaling and power limits, performance and power improvements due to technology scaling and instruction level parallelism in general-purpose processors have ended. It is well known that full custom, application-specific hardware accelerators can provide orders-of-magnitude improvements in energy/op for a variety of application domains. Therefore, there is a special interest in systems that can optimize and accelerate the building blocks of machine learning and data science routines. Many of these building blocks share the same characteristics as building blocks of high performance computing kernels working on matrices.

Such application specific solutions rely on joint optimization of algorithms and the hardware, but cost hundreds of millions of dollars. PRISM (Platform for Rapid Investigation of efficient Scientific- computing and Machine-learning accelerators) is proposed to amortize these costs. PRISM enables application designers to get rapid feedback about both the available parallelism and locality of their algorithm, and the efficiency of the resulting application/hardware design. PRISM platform consists of two coupled tools that incorporate design knowledge at both the hardware and algorithm level. This knowledge enables the tool to give application designers the ability to quickly evaluate the performance of their applications on the proposed/existing hardware, without the application designer needing to be an expert at hardware or algorithms. This platform will leverage tools created from the team's prior research.

Initially, these tools will be used to create an efficient solution for each application, followed by a comparison of the resulting hardware designs. The possibility of creating platforms that span multiple classes of algorithms can then be explored. Finally, a comparison of these new architectures to existing heterogeneous architectures with GPUs and FPGAs will be made, to gain understanding about what modifications are necessary for these architectures to achieve higher levels of efficiency when supporting these classes of algorithms. The work on key applications will lead to better insight about the computation and communication intrinsic to these computations, and provide algorithms for these applications that will be effective on conventional and new architectures.

Papers

- Yuanfang Li and Ardavan Pedram:

"CATERPILLAR: Coarse Grain Reconfigurable Architecture for Accelerating the Training of Deep Neural Networks,"

The 28th IEEE International Conference on Application-specific Systems, Architectures and Processors (ASAP2017).

Best Paper Award

- Ardavan Pedram, Stephen Richardson, Sameh Galal, Shahar Kvatinsky, and Mark A. Horowitz:

"Dark Memory and Accelerator-Rich System Optimization in the Dark Silicon Era"

IEEE Design and Test Magazine April, 2017.

Also Presented In International Symposium on Circuits and Systems (ISCAS) 2017.

Watch The Presentation

latform for

latform for

latform for

latform for apid

apid nvestigation of

nvestigation of  cientific Computing &

cientific Computing &  achine Learning

achine Learning