PCA (rank selection, testing, probabilistic)

How many principal components is “enough”?

Is there an elbow?

Is there an elbow? Tuzhilina, Hastie & Segal (2022)

Do we need to pick a rank?

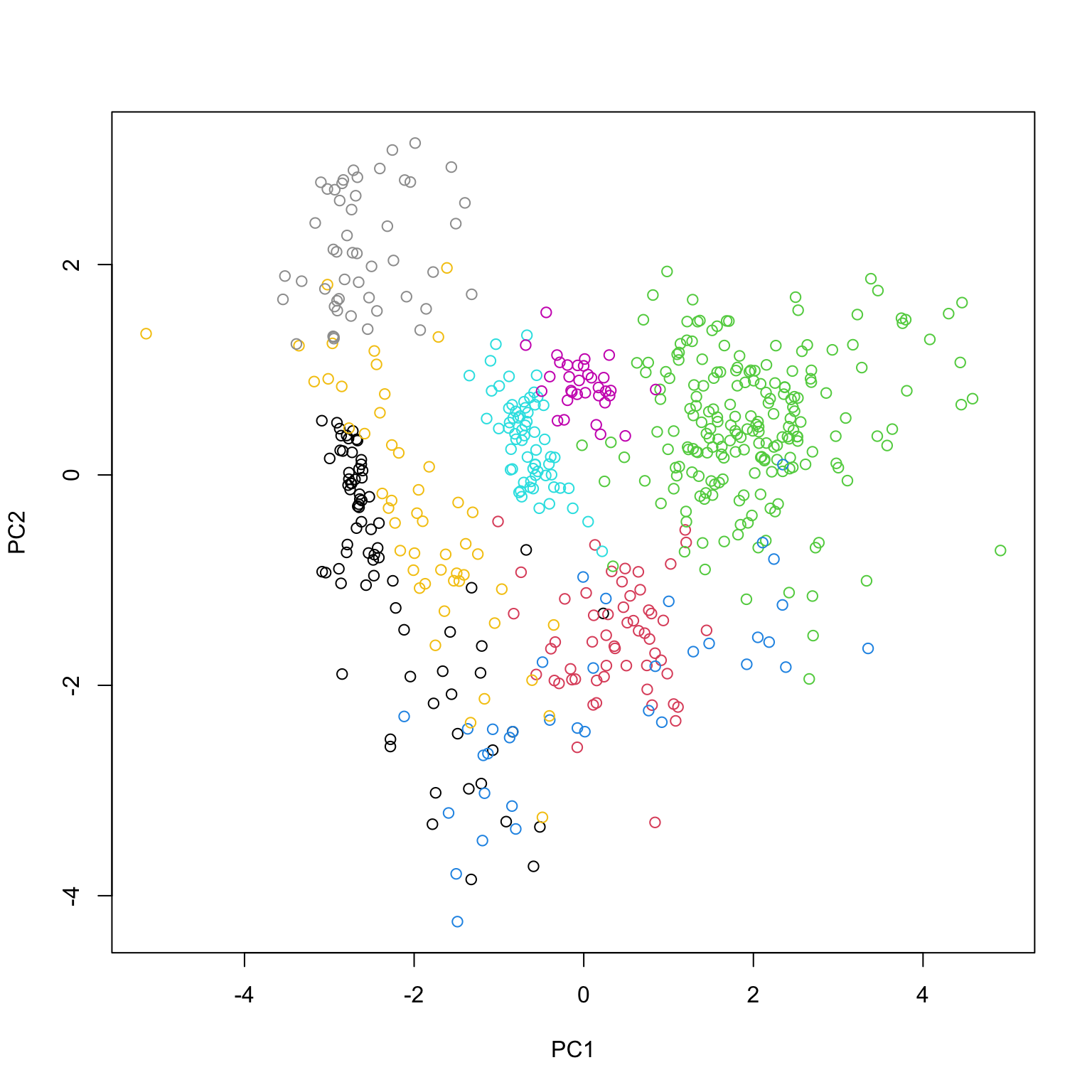

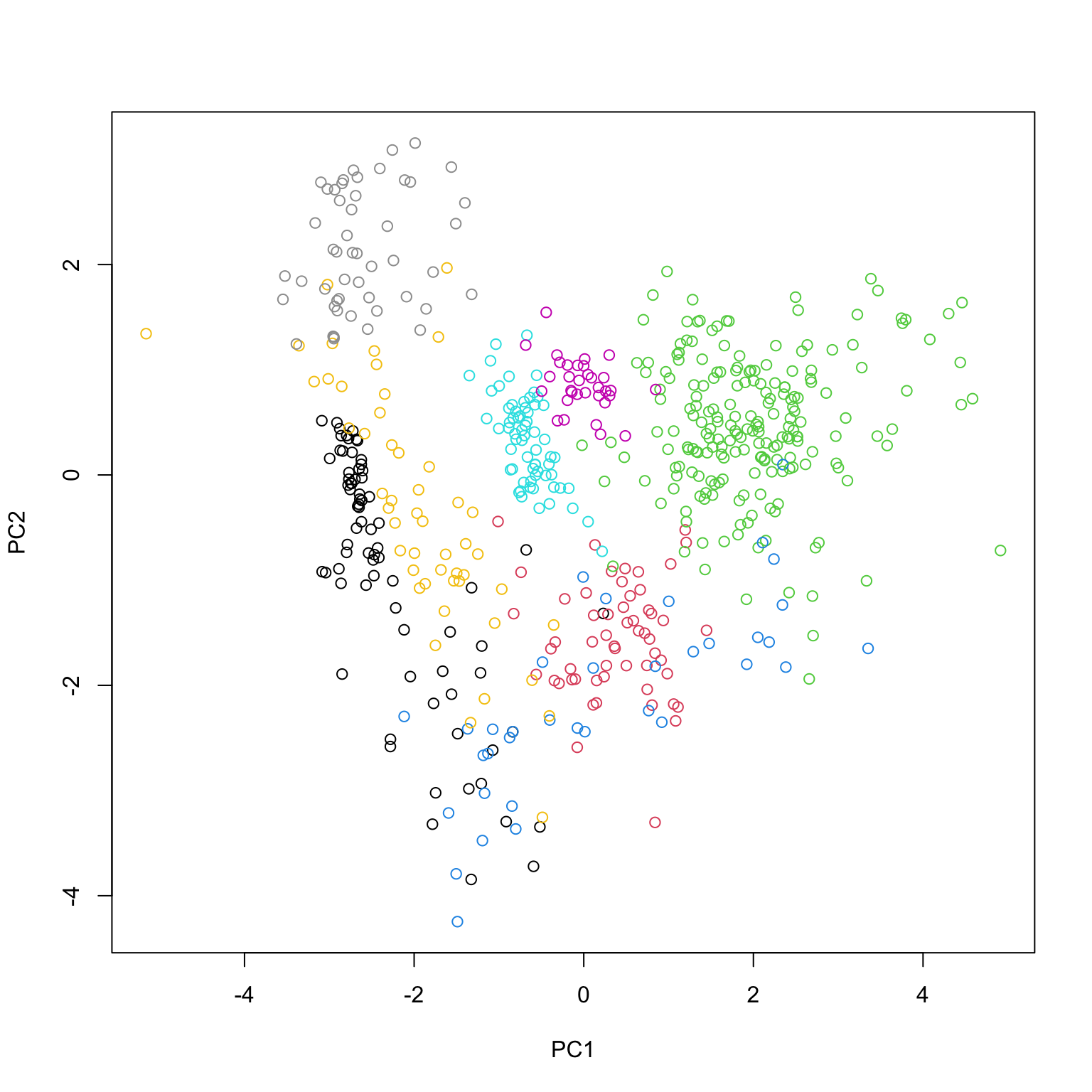

Visualization

Many applied settings use PCA simply to visualize the data.

For this, typically only the first few PCs are used. No need to really pick a rank…

Testing

Suppose we believe \(X \sim N(0, I \otimes \Sigma)\).

If we think \(\Sigma = \sigma^2 I + VV^T\) then we might want to decide if \(V=0\) or not… what rank is \(V\)?

Note: \(\text{rank}(V)\) is not the rank of \(\Sigma\)…

Do we need to pick a rank?

Modeling

The matrix \(X\) may show up later in downstream analyses using its PCs for regression. \(\implies\) can use CV to pick ranks. number of PCs.

We may want to fit a generative model to \(X\) itself: probabilistic PCA of Bishop & Tittering (1999)

Estimation

- Suppose we believe \(X \sim N(\mu, I \otimes I)\) and want to estimate \(\mu\) by hard-thresholding the singular values

\[ \hat{\mu} = \sum_i 1_{d_i > c} d_i \cdot u_i v_i^T \]

- How do we pick \(c\)?

Testing

Classical theory

Suppose \(X_{n \times p} \sim N(0, I_{n \times n} \otimes \Sigma)\)

Often (e.g. as presented in MKB) assumes \(n \to \infty\) and \(p\) essentially fixed.

Allows (with extra conditions) for MLE analysis of eigendecomposition of \(\Sigma=U\Lambda U^T\)

Notes there is ambiguity when there are ties in \(\Lambda\): variance of MLE of \(U[,i]\) depends on gaps \(\lambda_{i-1}-\lambda_i\) and \(\lambda_i - \lambda_{i+1}\)…

Could it be used for testing \(H_0:\Sigma=\sigma^2 \cdot I\) vs. \(H_a:\Sigma=\sigma^2 \cdot I + vv^T\) for \(v \in \mathbb{R}^p\)? (wlog \(\sigma^2=1\) going forward)

We know LRT here would be based on \(\log \det(\hat{\Sigma})\).

As all gaps are 0 under the null, at face value this is a context where much of \(\Sigma\) is poorly estimable…

Largest eigenvalue

- Maybe better motivated to use largest eigenvalue

\[ d_1 = \max_{u \in \mathbb{R}^p} \frac{1}{n} u'X'Xu \]

- How do eigenvalues behave under \(H_0\), particularly with \(n, p\) comparable?

Eigenvalues of large Wishart matrices

Set \(p/n=\gamma\) and consider the eigenvalues of \(X \sim N(0, I_{n \times n} \otimes I_{p \times p})\).

The population eigenvalues of \(\mathbb{E}[\Sigma]\) are all (essentially) 1…

What about the sample eigenvalues?

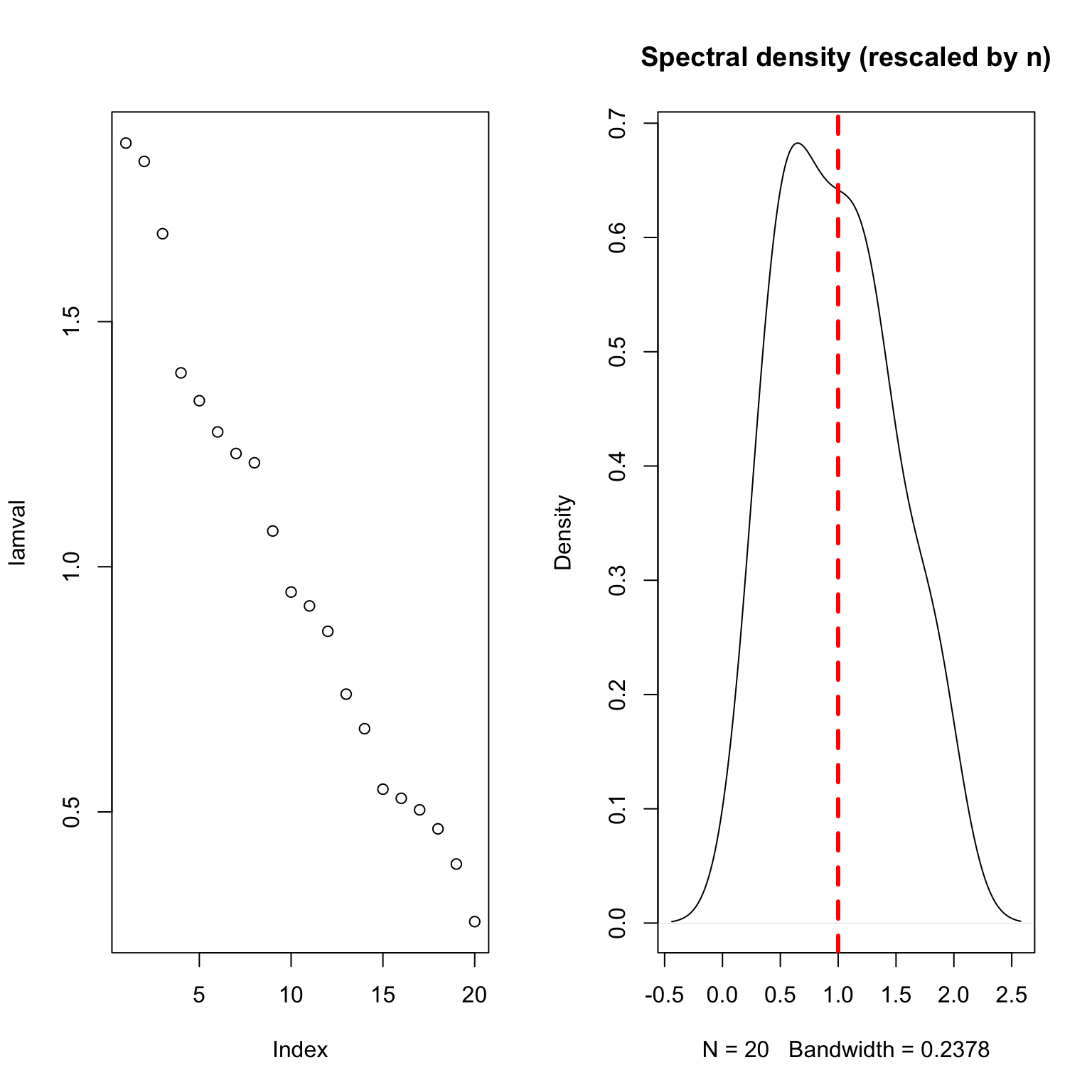

Eigenvalues of large-ish Wishart matrices \(n=80, p=20\)

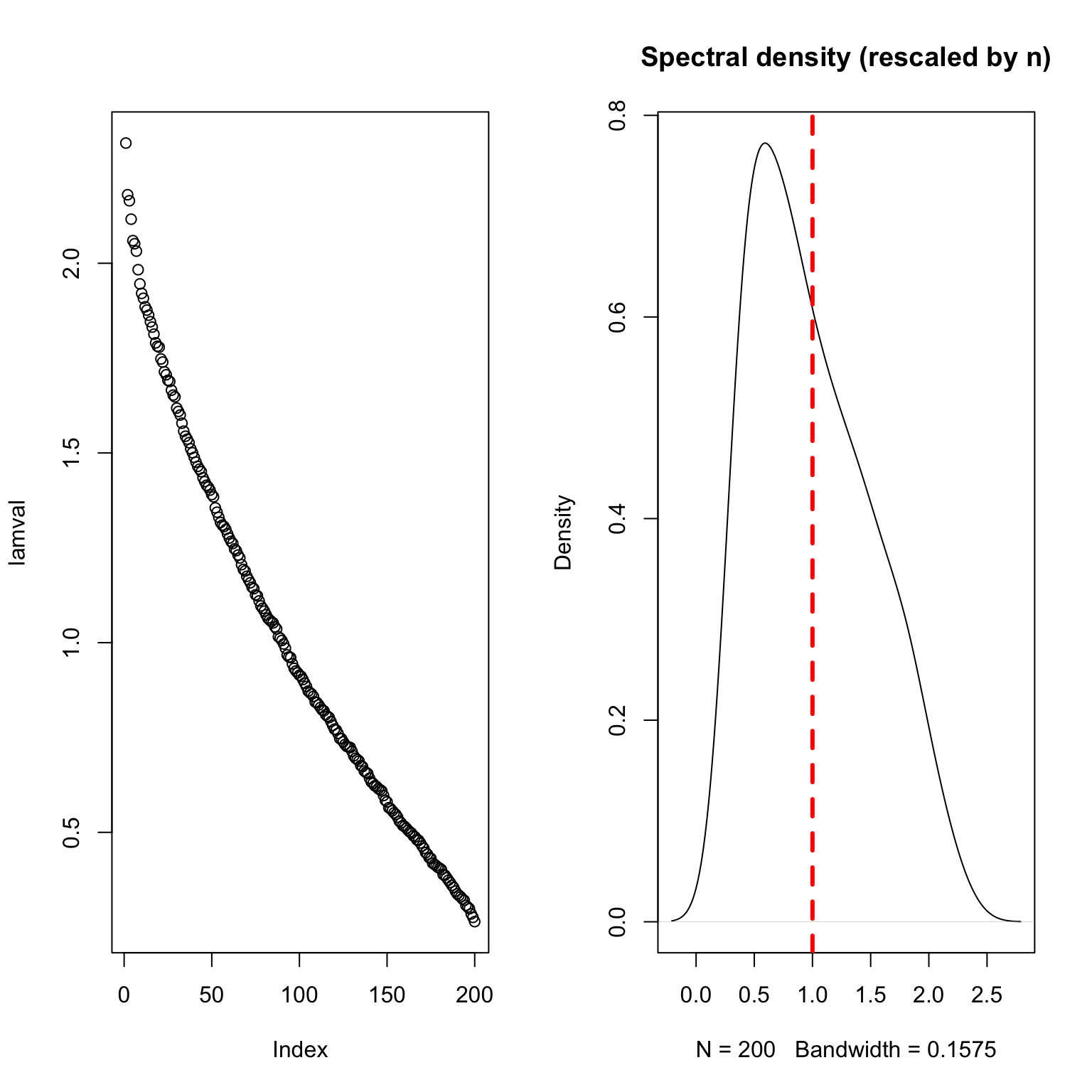

Eigenvalues of large-ish Wishart matrices \(n=800, p=200\)

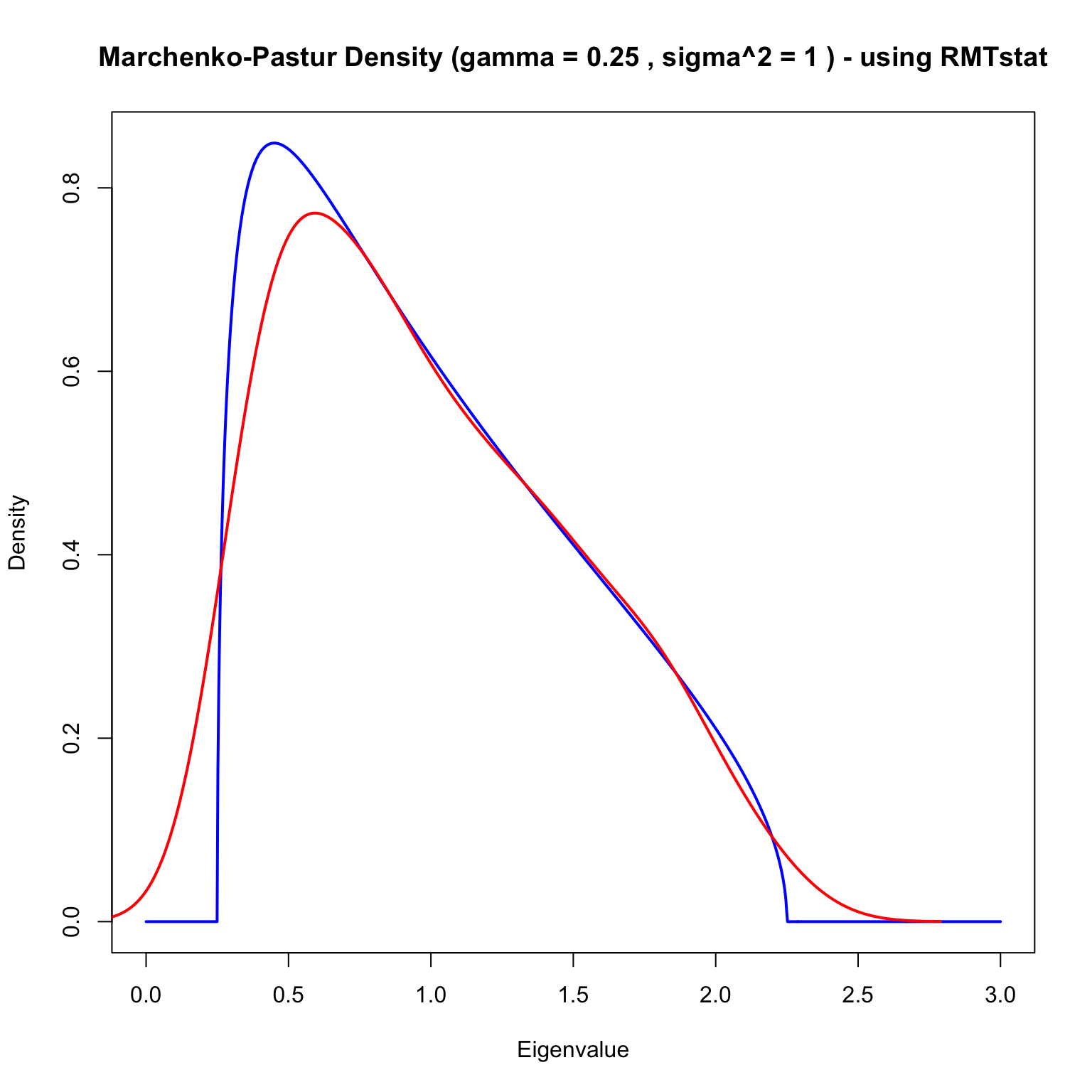

Marchenko-Pastur \(\gamma=1/4\)

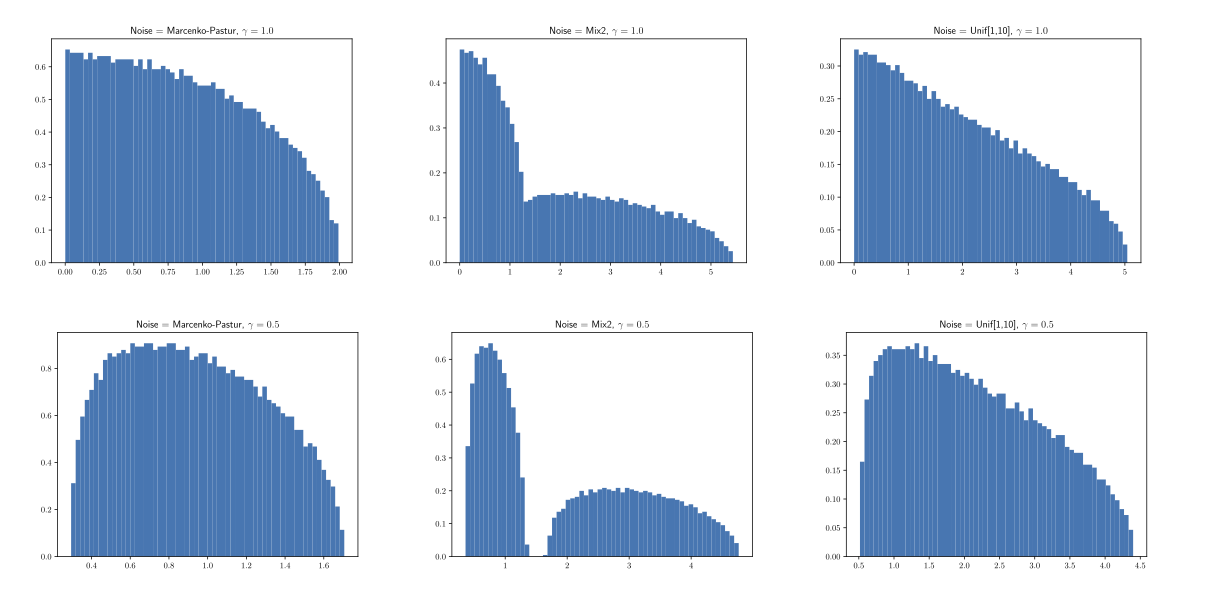

Marchenko-Pastur

- With \(W \sim \text{Wishart}(n, I_{p \times p})\) M&P derived the (limiting) density of the spectral measure

\[ \frac{1}{p} \sum_{j=1}^p \delta_{\lambda_i(W)/n} \]

- With \(\gamma=p/n\) the support is \((1 \pm \sqrt{\gamma})^2\) so if \(\gamma > 0\) then there is spread around the “population” spectrum \(\delta_1\)…

Baik-Ben Arous-Peche

- For \(H_a: \Sigma = I + vv^T\) unless \(1 + \|v\|^2 > (1 + \sqrt{\gamma})^2\) the perturbed eigenvalue will not be visible in this limiting spectrum…

Largest eigenvalue

- \(\implies\) for testing, we might look at the behavior near \((1 + \sqrt{\gamma})^2\)…

Tracy-Widom and PCA

- Johnstone (2001) derived limiting law under \(H_0\):

\[ \frac{d_1/n - \mu_{n,p}}{\sigma_{n,p}} \Rightarrow TW_1 \]

Scaling and centering \((\sigma^2=1)\)

\[ \begin{aligned} \mu_{n,p} &= \frac{\left(\sqrt{n-1} + \sqrt{p}\right)^2}{n} \\ & \approx (1 + \sqrt{\gamma})^2 \\ \sigma_{n,p} &= \frac{\left(\sqrt{n-1} + \sqrt{p}\right)}{n} \left(\frac{1}{\sqrt{n-1}} + \frac{1}{\sqrt{p}}\right)^{1/3} \\ &\approx n^{-2/3} (1 + \sqrt{\gamma})\left(1 + \frac{1}{\sqrt{\gamma}} \right)^{1/3}. \end{aligned} \]

Example

library(RMTstat)

X = matrix(rnorm(500), 50, 10)

eigvals_hat = svd(X)$d^2 / (nrow(X) - 1)

P_c = diag(rep(1, 50)) - matrix(1, 50, 50) / 50

Sigma_hat = t(X) %*% P_c %*% X / (nrow(X) - 1)

noise_level = sum(diag(Sigma_hat)) / ncol(X)

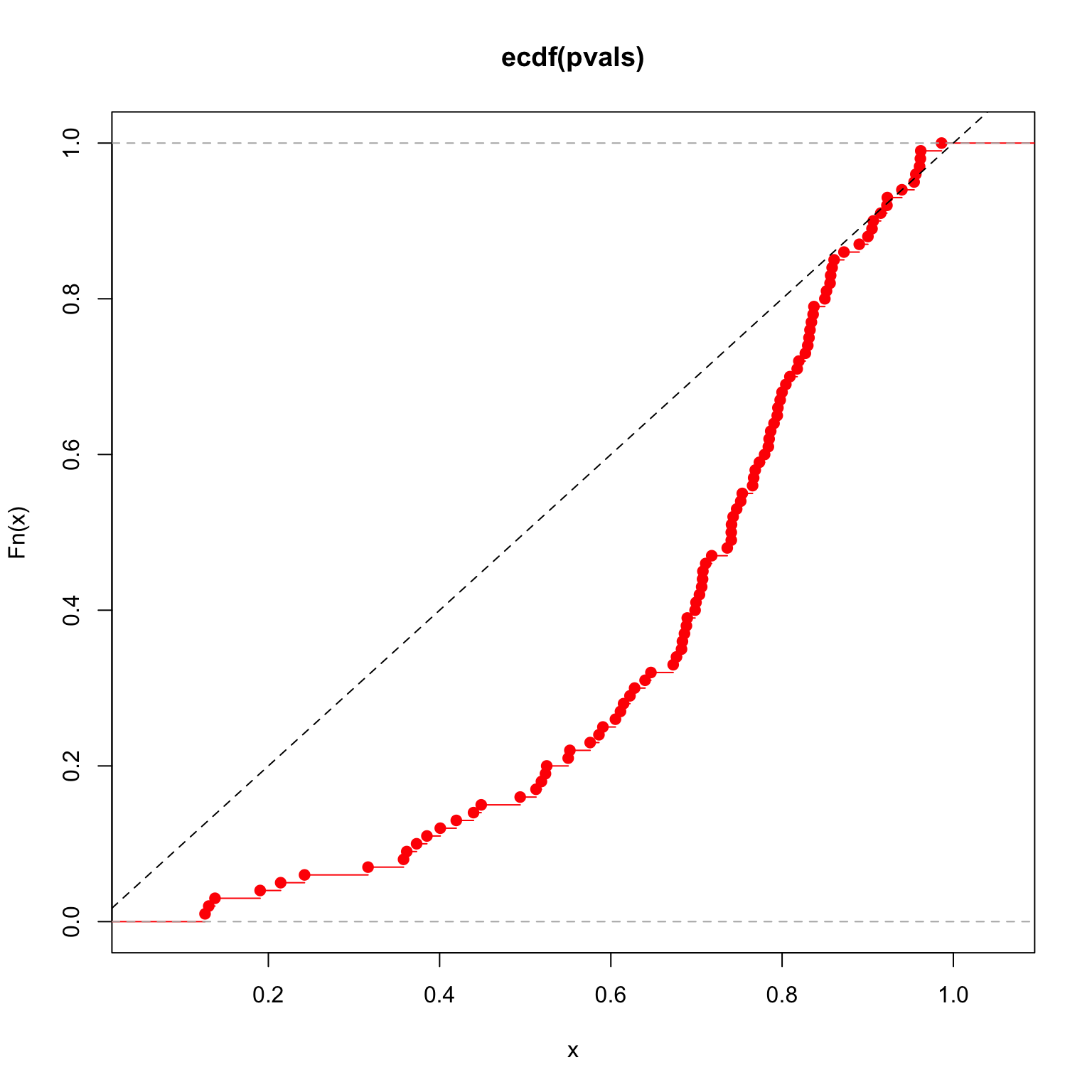

pWishartMax(eigvals_hat[1], nrow(X) - 1, ncol(X), var=noise_level, lower.tail=FALSE)[1] 0.1194141Run a few simulations \(n=50, p=10\)

Run a few simulations \(n=300, p=200\)

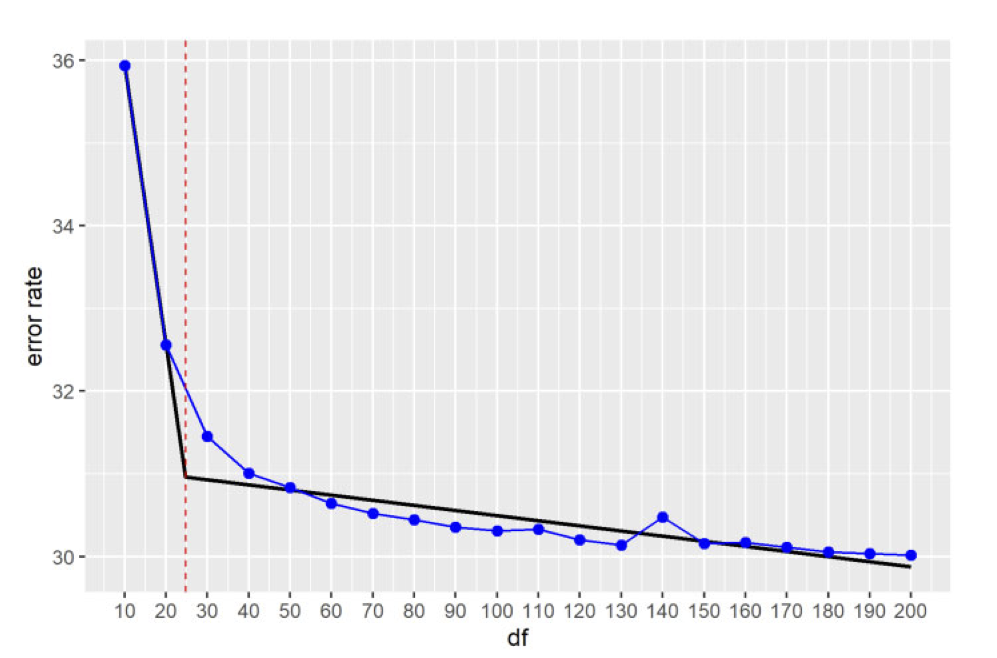

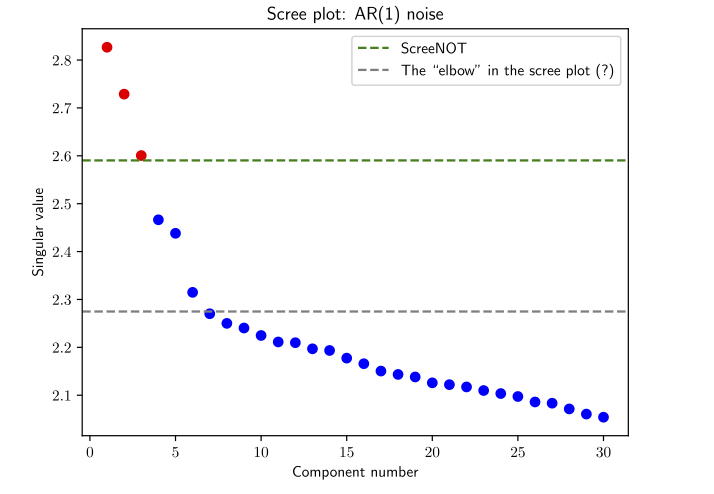

Picking a rank

Given a test statistic for the largest eigenvalue, there’s a natural “forward stepwise” procedure: starting at \(k=0\)

Compare \(d_{k+1}\) to appropriate quantile (\(1-\alpha\) ?) of Tracy-Widom (\(TW_1\)).

If larger, then \(k += 1\) and return to 1. Else stop.

Basis of pseudorank of Krichtman and Nadler (2008)

A little more refined (and involved) model Choi et al. (2017)

Estimation

A different goal…

- Suppose now \(X \sim N(\mu, I \otimes I)\) and our goal is to recover \(\mu\)

\[ \hat{\mu} = \sum_{i:d_i > c} d_i \cdot u_i v_i^T \]

- Quality is to be judged by MSE:

\[ \|\hat{\mu} - \mu\|^2_F \]

In testing context, BPP phenomenon says only large enough (properly scaled) eigenvalues will surpass the edge \((1 + \sqrt{\gamma})^2\)

Suggests hard thresholding eigenvalues at \((1 + \sqrt{\gamma})^2\): a formal elbow rule….

Optimal estimation

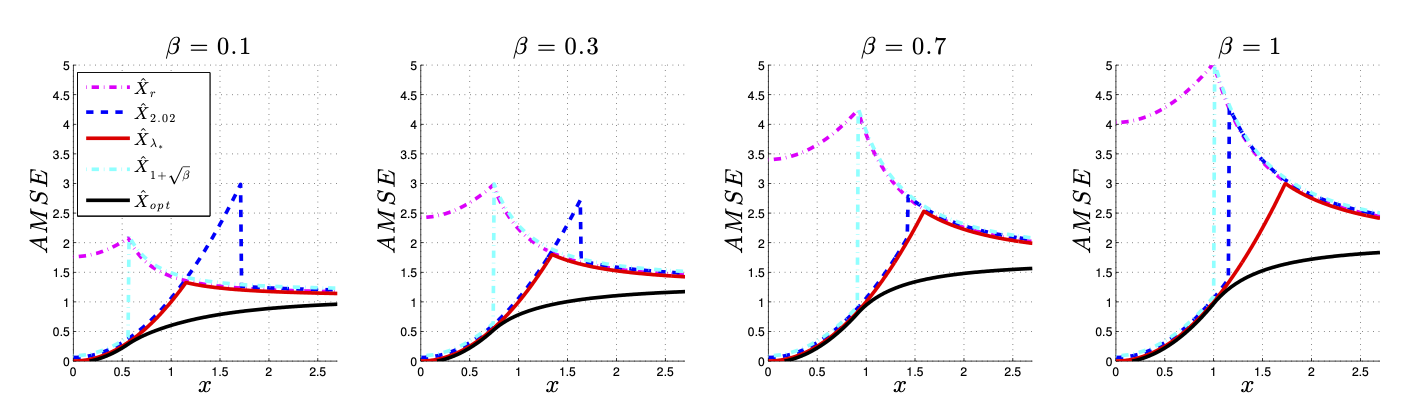

Gavish and Donoho (2013) show that this choice is not asymptotically minimax optimal one in terms of MSE even if it can be used to properly determine the rank of \(V\) in \(H_a:I + VV'\)…

This work has been extended beyond standard Wishart in ScreeNOT (2022)

Rough approach: given the limiting spectrum of “noise”, they can derive an asymptotically optimal thresholding function.

Gavish and Donoho

ScreeNOT

ScreeNOT - noise spectra

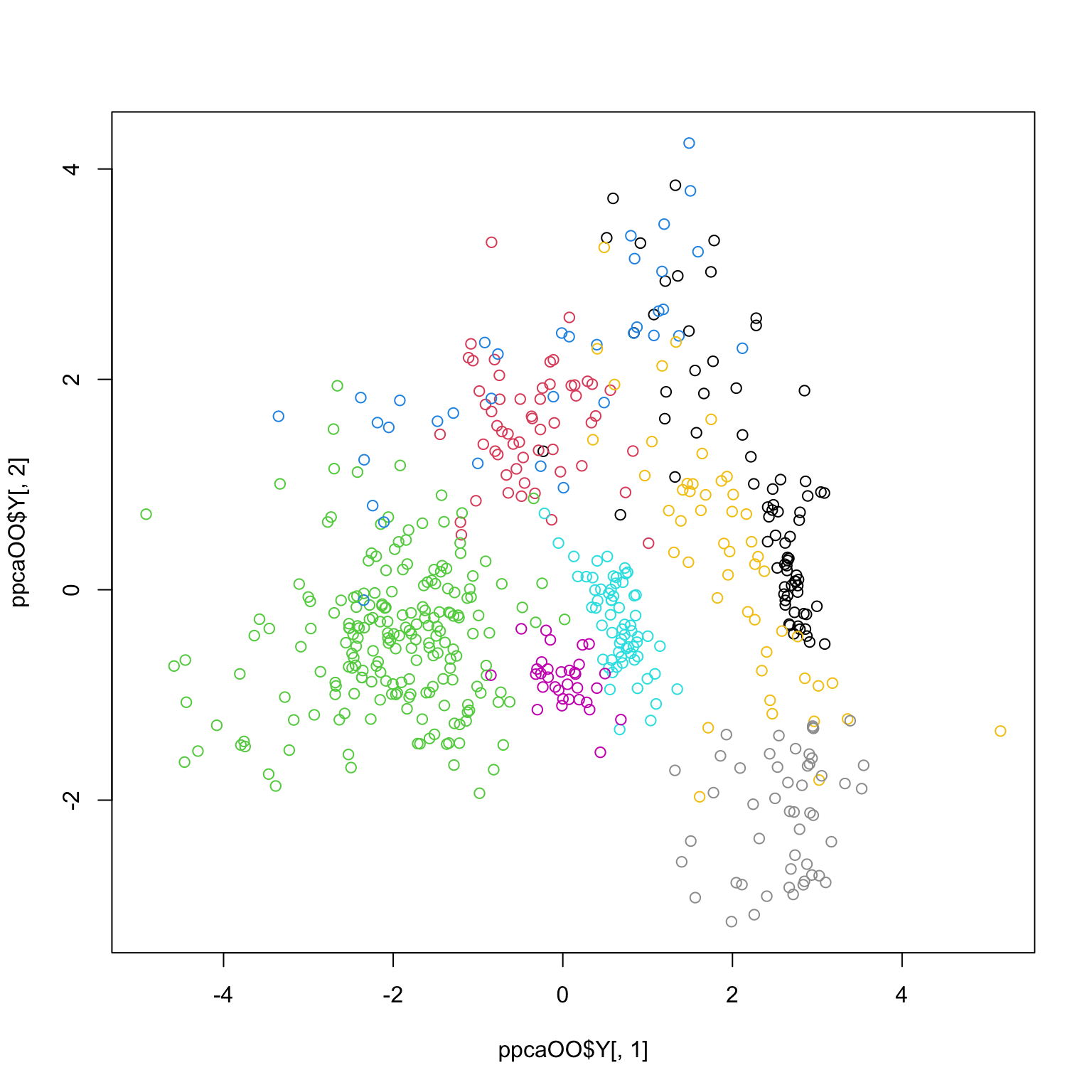

Probabilistic PCA

Probabilistic PCA model

We talked about models of the form \(I + VV'\) as alternatives in testing \(H_0:\Sigma=I\).

This model is essentially Probabilistic PCA: \(q < p\)

\[ \begin{aligned} Z & \sim N(0, I_{q \times q}) \\ X|Z &\sim N(VZ, \sigma^2 I_{p \times p}) \end{aligned} \]

- Makes assumption that the noise in \(X\) are all on the same scale… scaling \(X\) doesn’t magically fix this…

What PPCA gives us

It models a low-rank structure within the covariance, not as the the covariance.

It gives us a likelihood, this allows:

AIC / BIC / CV for rank selection

Mixtures, Bayesian modeling etc (at least in theory)

Latent scores \(Z|X=x\)

Rank \(k\) MLE \(n > p\)

Let \(\hat{\Sigma}_{MLE} = U D U'\).

Then, estimate \(\sigma^2\) as

\[ \hat{\sigma}^2 = \frac{1}{p-k} \sum_{i=k+1}^p d_i^2 \]

- To estimate \(V\), deflate by \(\sigma^2\):

\[ \hat{V} = U_k \text{diag}(d_k-\hat{\sigma}^2)^{1/2} R \]

where \(R_{k \times k}\) is an arbitrary rotation.

PPCA via EM

Complete (negative) log-likelihood

\[ \frac{np}{2} \log \sigma^2 + \sum_{i=1}^n \left[\frac{1}{2\sigma^2} \|X_i - VZ_i\|^2_2 + \frac{1}{2} \|Z_i\|^2_2 \right] \]

- Note that \(Z|X \sim N\left(V'(VV' + \sigma^2 I)^{-1} X, I - V'(VV' + \sigma^2 I)^{-1}V\right)\)

Expected value given \(X\) at \((V_{\text{cur}}, \sigma^2_{\text{cur}}) = (V_c, \sigma^2_c)\) (ignoring irrelevant terms)

\[ \frac{np}{2} \log \sigma^2 + \sum_{i=1}^n \frac{1}{2 \sigma^2} \left[\|X_i\|^2_2 - 2 \text{Tr}(V\mathbb{E}_{V_c, \sigma^2_c}[Z_i|X_i]X_i') + \text{Tr}(V'V\mathbb{E}_{V_c,\sigma^2_c}[Z_iZ_i'|X_i]) \right] \]

- Estimation of new \((V_{\text{new}}, \sigma^2_{\text{new}})\): multi response regression with covariance \(I_{n \times n} \otimes (\sigma^2 I_{p \times p})\)…

Example

[1] 0.4187987

Did we gain much here?