Rye-GPU

From FarmShare

(→software) |

|||

| (27 intermediate revisions not shown) | |||

| Line 12: | Line 12: | ||

ssh rye02.stanford.edu | ssh rye02.stanford.edu | ||

</source> | </source> | ||

| + | |||

| + | === hardware and software === | ||

| + | |||

| + | rye01 and rye02 are Intel CPU systems with following config: | ||

| + | |||

| + | rye01: | ||

| + | *8 core (2x E5620) cpu | ||

| + | *48GB ram | ||

| + | *250GB local disk | ||

| + | *6x C2070 | ||

| + | *Ubuntu 13.10 | ||

| + | *CUDA 6.0 | ||

| + | |||

| + | rye02: | ||

| + | *8 core (2x E5620) cpu | ||

| + | *48GB ram | ||

| + | *250GB local disk | ||

| + | *8x GTX 480 | ||

| + | *Ubuntu 14.04 | ||

| + | *CUDA 7.0 | ||

| + | |||

| + | FarmShare would like to extend special thanks to Jon Pilat, Brian Tempero and Margot Gerritsen for their support. | ||

| + | |||

| + | === software === | ||

| + | |||

| + | Cuda is installed along with the toolkit samples (in /usr/local/cuda) | ||

== example 1 == | == example 1 == | ||

| - | An easy first thing to try is to login, load the | + | An easy first thing to try is to login, load the cudasamples module and run deviceQuery to see what kind of cuda device it is. Then we run a matrix multiply sample that comes with the cuda toolkit. |

<source lang="sh"> | <source lang="sh"> | ||

| - | + | Welcome to Ubuntu 13.10 (GNU/Linux 3.11.0-20-generic x86_64) | |

| - | rye01.stanford.edu - Ubuntu 13. | + | Linux rye01.stanford.edu x86_64 GNU/Linux |

| + | rye01.stanford.edu - Ubuntu 13.10, amd64 | ||

8-core Xeon E5620 @ 2.40GHz (FT72-B7015, empty); 47.16GB RAM, 10GB swap | 8-core Xeon E5620 @ 2.40GHz (FT72-B7015, empty); 47.16GB RAM, 10GB swap | ||

| - | Puppet environment: rec_master; kernel 3. | + | Puppet environment: rec_master; kernel 3.11.0-20-generic (x86_64) |

--*-*- Stanford University Research Computing -*-*-- | --*-*- Stanford University Research Computing -*-*-- | ||

| Line 35: | Line 62: | ||

### | ### | ||

## | ## | ||

| - | # new to | + | # welcome to corn-new |

| - | # | + | # please report any problems to research-computing-support@stanford.edu |

| - | # https://www.stanford.edu/group/farmshare/cgi-bin/wiki/index.php/ | + | # |

| + | # new features: ubuntu 13.10, matlab2013b, matlab2014a, intel c/c++/fortran compilers, cuda 6.0 | ||

| + | # Check out https://www.stanford.edu/group/farmshare/cgi-bin/wiki/index.php/Ubuntu1310 | ||

| + | # | ||

## | ## | ||

### | ### | ||

| - | Last login: Sun | + | Last login: Sun May 11 14:31:56 2014 from c-24-130-183-161.hsd1.ca.comcast.net |

your cuda device is: | your cuda device is: | ||

| - | CUDA_VISIBLE_DEVICES= | + | CUDA_VISIBLE_DEVICES=5 |

| - | device last used: | + | device last used: Tue May 13 09:54:05 2014 |

| - | + | bishopj@rye01:~$ module load cuda cudasamples | |

| - | bishopj@rye01:~$ module load cuda | + | bishopj@rye01:~$ deviceQuery |

| - | bishopj@rye01:~$ | + | deviceQuery Starting... |

| - | + | ||

CUDA Device Query (Runtime API) version (CUDART static linking) | CUDA Device Query (Runtime API) version (CUDART static linking) | ||

| Line 57: | Line 86: | ||

Device 0: "Tesla C2070" | Device 0: "Tesla C2070" | ||

| - | CUDA Driver Version / Runtime Version | + | CUDA Driver Version / Runtime Version 6.0 / 6.0 |

CUDA Capability Major/Minor version number: 2.0 | CUDA Capability Major/Minor version number: 2.0 | ||

Total amount of global memory: 5375 MBytes (5636554752 bytes) | Total amount of global memory: 5375 MBytes (5636554752 bytes) | ||

| Line 85: | Line 114: | ||

Device has ECC support: Enabled | Device has ECC support: Enabled | ||

Device supports Unified Addressing (UVA): Yes | Device supports Unified Addressing (UVA): Yes | ||

| - | Device PCI Bus ID / PCI location ID: | + | Device PCI Bus ID / PCI location ID: 131 / 0 |

Compute Mode: | Compute Mode: | ||

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) > | < Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) > | ||

| - | deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = | + | deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 6.0, CUDA Runtime Version = 6.0, NumDevs = 1, Device0 = Tesla C2070 |

Result = PASS | Result = PASS | ||

| + | |||

| + | bishopj@rye01:~$ matrixMulCUBLAS | ||

| + | [Matrix Multiply CUBLAS] - Starting... | ||

| + | GPU Device 0: "Tesla C2070" with compute capability 2.0 | ||

| + | |||

| + | MatrixA(320,640), MatrixB(320,640), MatrixC(320,640) | ||

| + | Computing result using CUBLAS...done. | ||

| + | Performance= 492.46 GFlop/s, Time= 0.266 msec, Size= 131072000 Ops | ||

| + | Computing result using host CPU...done. | ||

| + | Comparing CUBLAS Matrix Multiply with CPU results: PASS | ||

| + | |||

</source> | </source> | ||

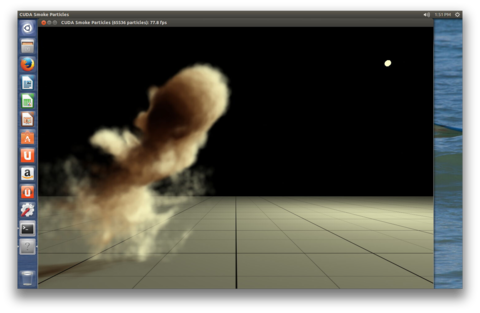

| - | == example 2 == | + | == example 2 - smokeParticles cuda sample program == |

| + | |||

| + | [[Image:smokeparticlesstill1.small.png|frame]] | ||

| + | |||

| + | * login to rye01 or rye02 | ||

| + | * run [[FarmVNC]] | ||

| + | * module load cuda cudasamples | ||

| + | * cd /usr/local/cuda/samples/bin/x86_64/linux/release | ||

| + | * smokeParticles | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | == example 3 - matlab == | ||

| + | |||

| + | We can also login, load the cuda module and run the same deviceQuery and matrix multiply sample in matlab | ||

| + | |||

| + | it has its own page, click [[MatlabGPUDemo1]] | ||

| + | |||

| + | == example 4 - R == | ||

| + | |||

| + | Example of using cuda enabled R library to do [[HierRegressions|Hierarchical Linear Regressions]] | ||

| + | |||

| + | == example 5 - PyMOL == | ||

| + | |||

| + | [[Image:pymolgiffy2.gif|frame]] | ||

| + | [[Image:pymolgiffy.gif|frame]] | ||

| - | [[ | + | * login to rye02.stanford.edu |

| + | * start a VNC session as described in [[FarmVNC]] | ||

| + | * from the menu search pymol and then click on it | ||

| + | * from file menu open /farmshare/software/examples/pymol/4GD3.pdb | ||

| + | * click the box to full-size the model and then press the down arrow to start the animation | ||

| + | * I also clicked the (from upper right) S -> surface to draw the surface version (it shows up better here) | ||

Latest revision as of 16:59, 31 May 2015

Contents |

Nvidia GPU

Farmshare has GPU's via two systems, rye01 and rye02. You can use these two systems as you would a corn system:

ssh rye01.stanford.edu

or

ssh rye02.stanford.edu

hardware and software

rye01 and rye02 are Intel CPU systems with following config:

rye01:

- 8 core (2x E5620) cpu

- 48GB ram

- 250GB local disk

- 6x C2070

- Ubuntu 13.10

- CUDA 6.0

rye02:

- 8 core (2x E5620) cpu

- 48GB ram

- 250GB local disk

- 8x GTX 480

- Ubuntu 14.04

- CUDA 7.0

FarmShare would like to extend special thanks to Jon Pilat, Brian Tempero and Margot Gerritsen for their support.

software

Cuda is installed along with the toolkit samples (in /usr/local/cuda)

example 1

An easy first thing to try is to login, load the cudasamples module and run deviceQuery to see what kind of cuda device it is. Then we run a matrix multiply sample that comes with the cuda toolkit.

Welcome to Ubuntu 13.10 (GNU/Linux 3.11.0-20-generic x86_64) Linux rye01.stanford.edu x86_64 GNU/Linux rye01.stanford.edu - Ubuntu 13.10, amd64 8-core Xeon E5620 @ 2.40GHz (FT72-B7015, empty); 47.16GB RAM, 10GB swap Puppet environment: rec_master; kernel 3.11.0-20-generic (x86_64) --*-*- Stanford University Research Computing -*-*-- _____ ____ _ | ___|_ _ _ __ _ __ ___ / ___|| |__ __ _ _ __ ___ | |_ / _` | '__| '_ ` _ \\___ \| '_ \ / _` | '__/ _ \ | _| (_| | | | | | | | |___) | | | | (_| | | | __/ |_| \__,_|_| |_| |_| |_|____/|_| |_|\__,_|_| \___| http://farmshare.stanford.edu ### ## # welcome to corn-new # please report any problems to research-computing-support@stanford.edu # # new features: ubuntu 13.10, matlab2013b, matlab2014a, intel c/c++/fortran compilers, cuda 6.0 # Check out https://www.stanford.edu/group/farmshare/cgi-bin/wiki/index.php/Ubuntu1310 # ## ### Last login: Sun May 11 14:31:56 2014 from c-24-130-183-161.hsd1.ca.comcast.net your cuda device is: CUDA_VISIBLE_DEVICES=5 device last used: Tue May 13 09:54:05 2014 bishopj@rye01:~$ module load cuda cudasamples bishopj@rye01:~$ deviceQuery deviceQuery Starting... CUDA Device Query (Runtime API) version (CUDART static linking) Detected 1 CUDA Capable device(s) Device 0: "Tesla C2070" CUDA Driver Version / Runtime Version 6.0 / 6.0 CUDA Capability Major/Minor version number: 2.0 Total amount of global memory: 5375 MBytes (5636554752 bytes) (14) Multiprocessors, ( 32) CUDA Cores/MP: 448 CUDA Cores GPU Clock rate: 1147 MHz (1.15 GHz) Memory Clock rate: 1494 Mhz Memory Bus Width: 384-bit L2 Cache Size: 786432 bytes Maximum Texture Dimension Size (x,y,z) 1D=(65536), 2D=(65536, 65535), 3D=(2048, 2048, 2048) Maximum Layered 1D Texture Size, (num) layers 1D=(16384), 2048 layers Maximum Layered 2D Texture Size, (num) layers 2D=(16384, 16384), 2048 layers Total amount of constant memory: 65536 bytes Total amount of shared memory per block: 49152 bytes Total number of registers available per block: 32768 Warp size: 32 Maximum number of threads per multiprocessor: 1536 Maximum number of threads per block: 1024 Max dimension size of a thread block (x,y,z): (1024, 1024, 64) Max dimension size of a grid size (x,y,z): (65535, 65535, 65535) Maximum memory pitch: 2147483647 bytes Texture alignment: 512 bytes Concurrent copy and kernel execution: Yes with 2 copy engine(s) Run time limit on kernels: No Integrated GPU sharing Host Memory: No Support host page-locked memory mapping: Yes Alignment requirement for Surfaces: Yes Device has ECC support: Enabled Device supports Unified Addressing (UVA): Yes Device PCI Bus ID / PCI location ID: 131 / 0 Compute Mode: < Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) > deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 6.0, CUDA Runtime Version = 6.0, NumDevs = 1, Device0 = Tesla C2070 Result = PASS bishopj@rye01:~$ matrixMulCUBLAS [Matrix Multiply CUBLAS] - Starting... GPU Device 0: "Tesla C2070" with compute capability 2.0 MatrixA(320,640), MatrixB(320,640), MatrixC(320,640) Computing result using CUBLAS...done. Performance= 492.46 GFlop/s, Time= 0.266 msec, Size= 131072000 Ops Computing result using host CPU...done. Comparing CUBLAS Matrix Multiply with CPU results: PASS

example 2 - smokeParticles cuda sample program

- login to rye01 or rye02

- run FarmVNC

- module load cuda cudasamples

- cd /usr/local/cuda/samples/bin/x86_64/linux/release

- smokeParticles

example 3 - matlab

We can also login, load the cuda module and run the same deviceQuery and matrix multiply sample in matlab

it has its own page, click MatlabGPUDemo1

example 4 - R

Example of using cuda enabled R library to do Hierarchical Linear Regressions

example 5 - PyMOL

- login to rye02.stanford.edu

- start a VNC session as described in FarmVNC

- from the menu search pymol and then click on it

- from file menu open /farmshare/software/examples/pymol/4GD3.pdb

- click the box to full-size the model and then press the down arrow to start the animation

- I also clicked the (from upper right) S -> surface to draw the surface version (it shows up better here)