|

I am a PhD candidate at the Computer Science department of Stanford University, advised by Prof. Daniel Yamins in the Stanford Neuroscience and AI Lab. Before joining Stanford, I was a visiting research assistant in the Center of Brains, Minds and Machines (CBMM) at MIT, advised by Prof. Tomaso Poggio. I received my B.S. in Mathematics of Computation from University of California, Los Angeles, advised by Prof. Demetri Terzopoulos. |

|

Selected ResearchI am interesed in vision foundation model, world modeling, self-supervised learning from large-scale video data, and physical scene understanding. |

|

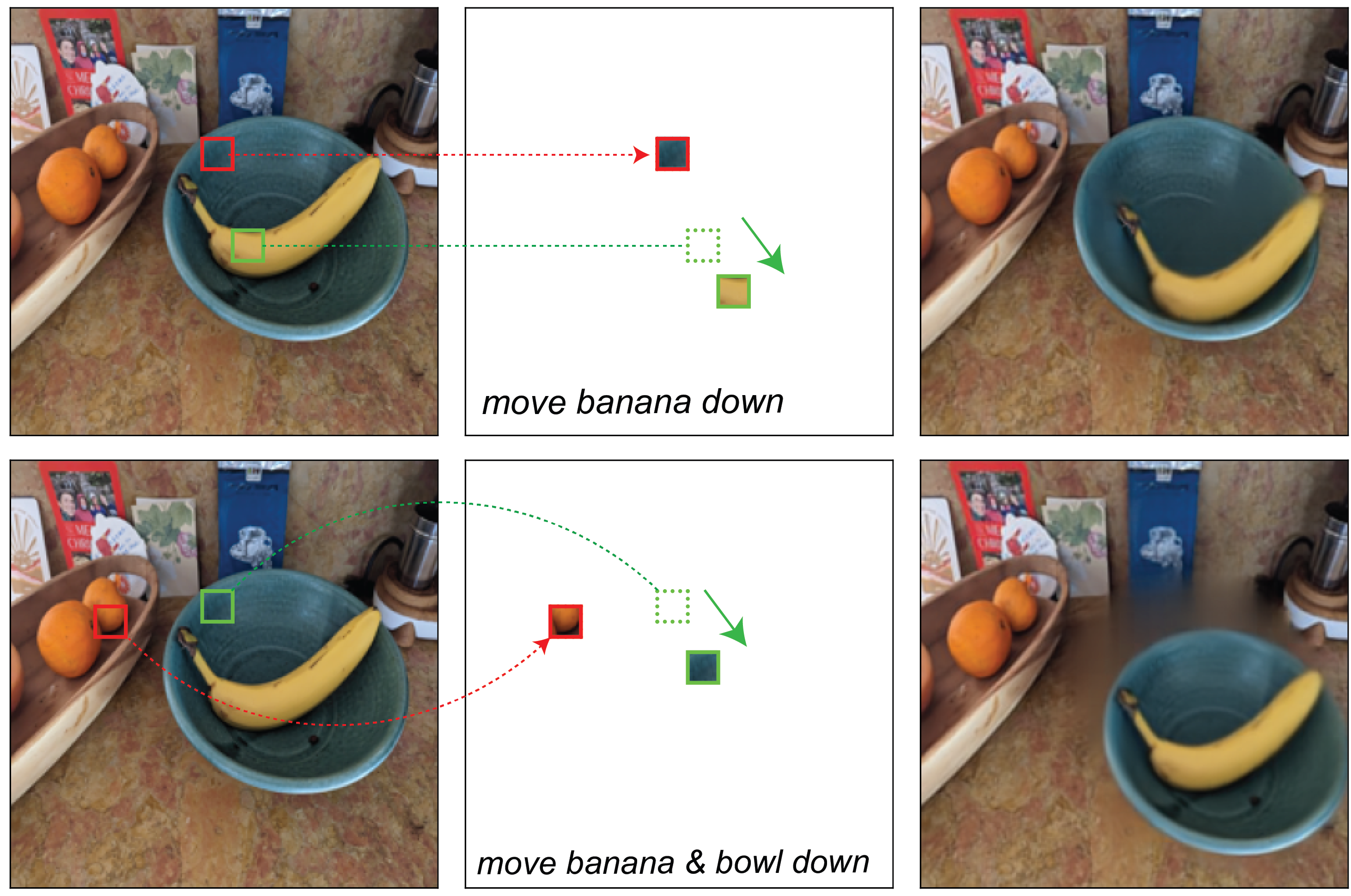

Counterfactual World Modeling for Physical Dynamics Understanding

Rahul Venkatesh*, Honglin Chen*, Kevin Feigelis*, Khaled Jedoui, Klemen Kotar, Felix Binder, Wanhee Lee, Sherry Liu, Kevin Smith, Judith Fan, Daniel Yamins (* equal contribution) ECCV 2024 / Paper / Website We propose a simple and scalable framework for learning world models from large-scale Internet videos. The pre-trained models support patch-level prompting for scene manipulation and zero-shot extractions of vision structures such as optical flow and segmentation. |

|

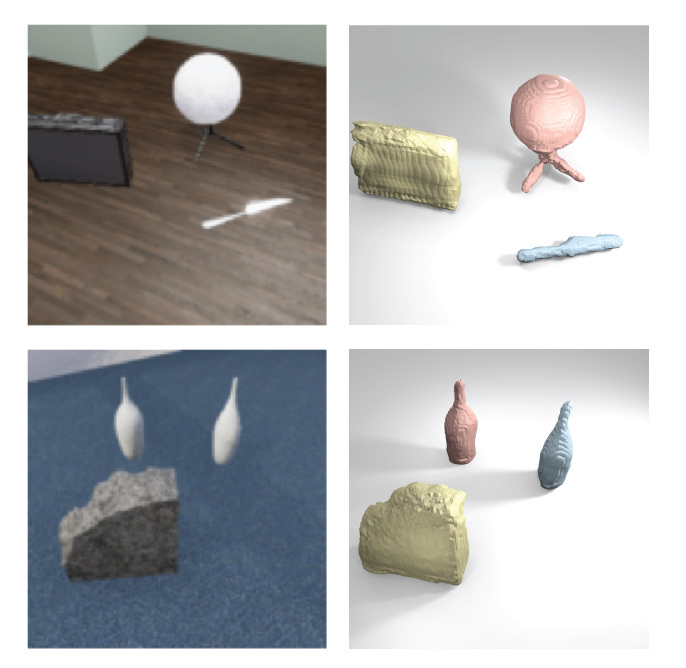

Unsupervised 3d scene representation learning via movable object inference

Honglin Chen*, Wanhee Lee*, Koven Hong-Xing Yu, Rahul Venkatesh, Joshua Tenenbaum, Daniel Bear, Jiajun Wu, Daniel Yamins (* equal contribution) TMLR 2023 / Paper We propose a 3D object-centric representation learning method that is scalable to complex scenes with diverse object categories. We lift a 2D movable object inference module that can be unsupervisedly pretrained on monocular videos for downstream 3D representation learning. |

|

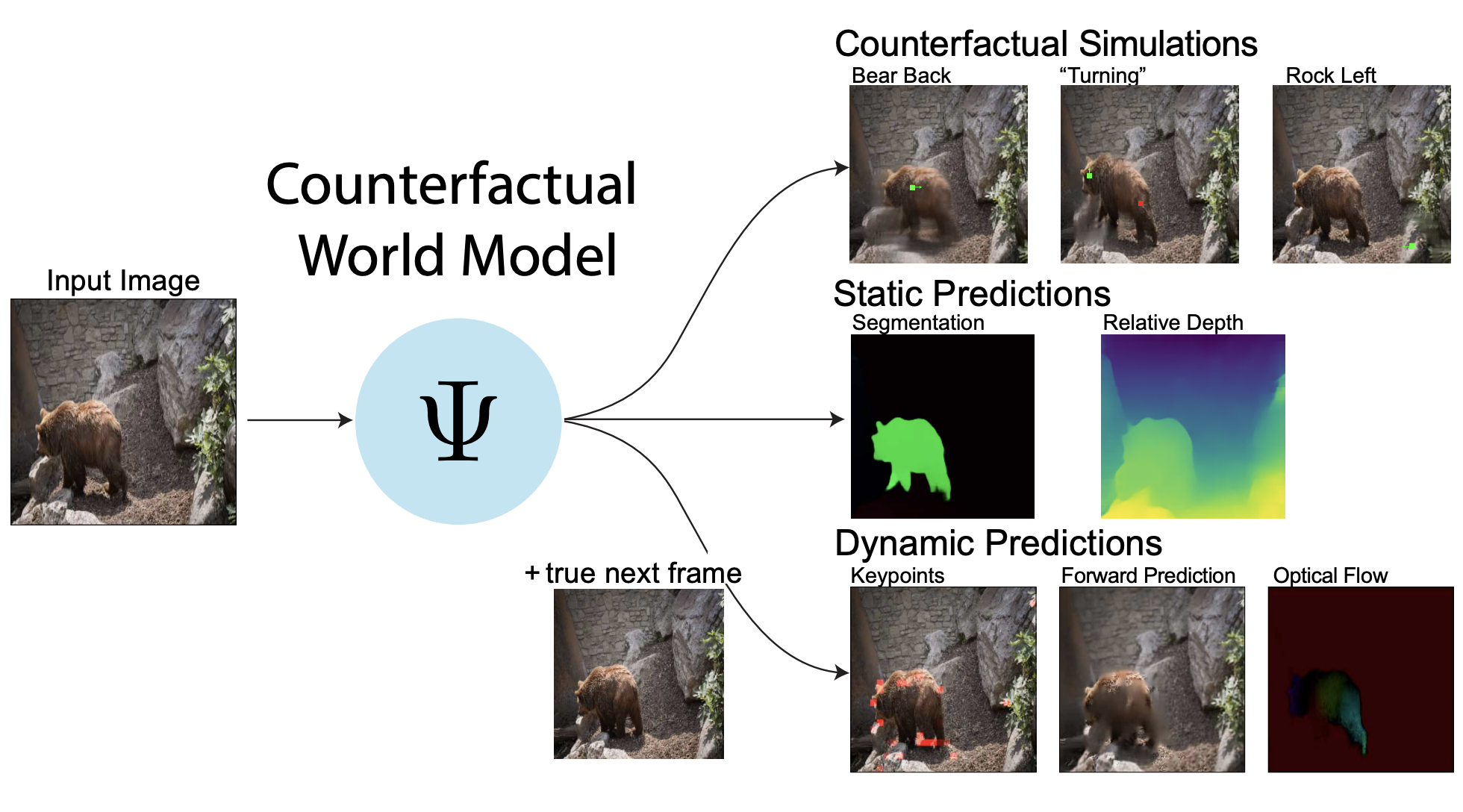

Unifying (Machine) Vision via Counterfactual World Modeling

Daniel Bear, Kevin Feigelis, Honglin Chen, Wanhee Lee, Rahul Venkatesh, Klemen Kotar, Alex Durango, Daniel Yamins Theory paper / Paper / Github We propose a unified, unsupervised network that can be prompted to perform a wide variety of visual computations. |

|

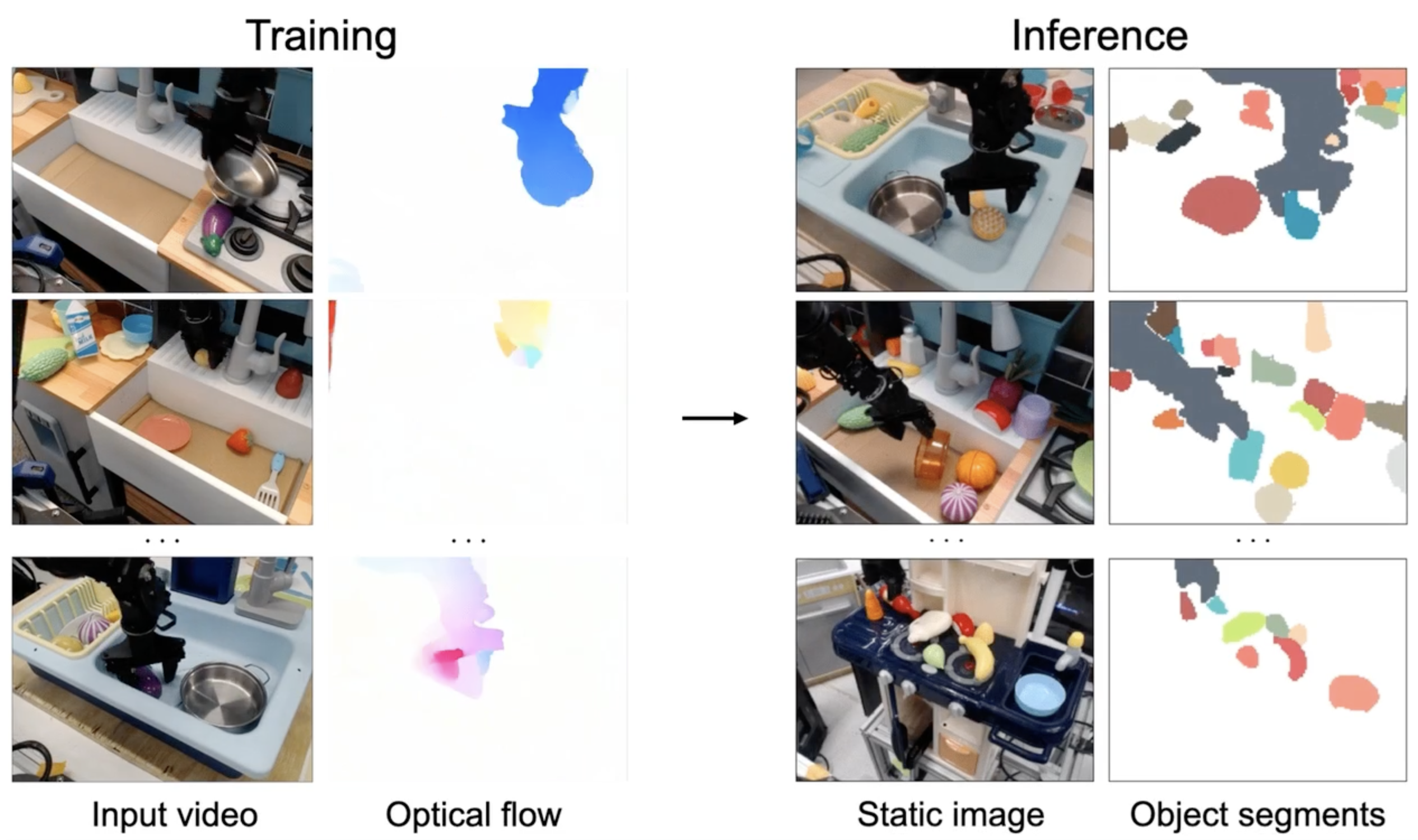

Unsupervised Segmentation in Real-World Images via Spelke Object Inference

Honglin Chen, Rahul Venkatesh, Yoni Friedman, Jiajun Wu, Joshua Tenenbaum, Daniel Yamins*, Daniel Bear* ECCV 2022 / Project page / Video / Paper / Github (Oral Presentation, top 2.7%) We introduce an unsupervised method for perceptually grouping objects in static images by predicting which parts of a scene would move as cohesive wholes. |

|

Biologically-plausible learning algorithms can scale to large datasets

Will Xiao, Honglin Chen, Qianli Liao, Tomaso Poggio ICLR, 2019 / Paper / Github We show that biologically plausible learning algorithms, particularly sign-symmetry, work well on ImageNet |

Past Research TopicsIn the past, I worked on biomimetic perception and biomechanical simulation in graphics, as well as neuronal dynamics simulation in neuromorphic hardware. |

|

PDEs on graphs for semi-supervised learning in first-person activity recognition in body-worn vide

Hao Li, Honglin Chen, Matt Haberland, Andrea L. Bertozzi,P. Jeffrey Brantingham Discrete and Continuous Dynamical Systems, 2021 / Paper We propose a PDE-based graph semi-supervised method for body-worn video classification with a lack of annotation due to sensitivity of the information |

|

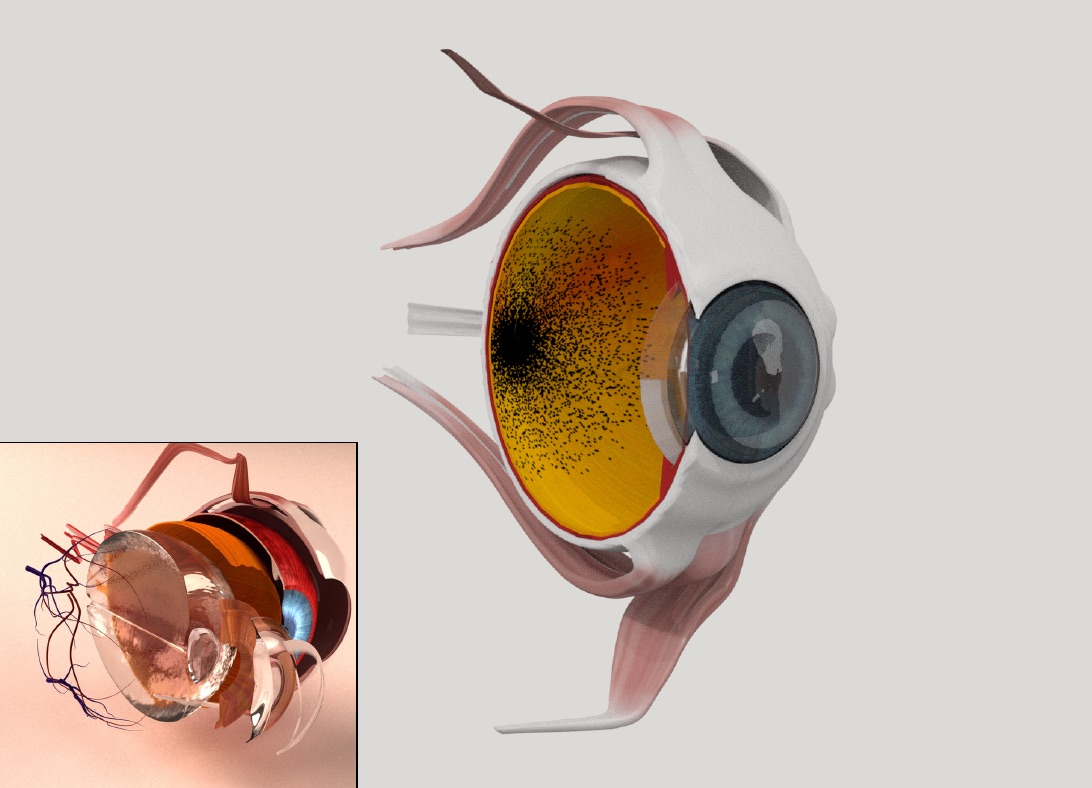

Biomimetic Eye Modeling & Deep Neuromuscular Oculomotor Control

Masaki Nakada, Arjun Lakshmipathy, Honglin Chen, Nina Ling, Tao Zhou, Demetri Terzopoulos ACM Transactions on Graphics, 2019 / Paper / Video We present a novel, biomimetic model of the eye for realistic virtual human animation, along with a deep learning approach to oculomotor control that is compatible with our biomechanical eye model. |

|

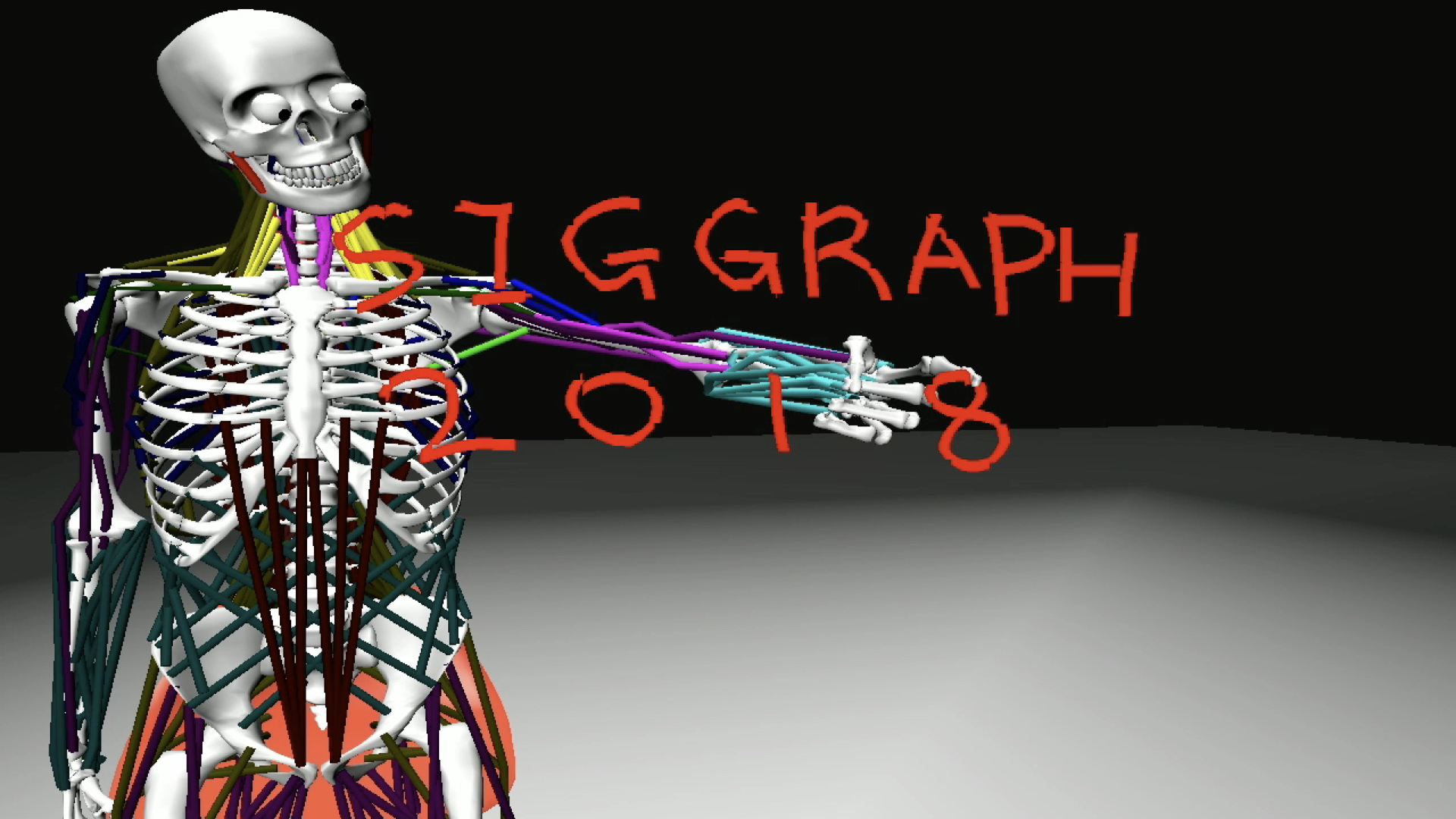

Deep learning of biomimetic sensorimotor control for biomechanical human animation

Masaki Nakada, Tao Zhou, Honglin Chen, Tomer Weiss, Demetri Terzopoulos ACM Transactions on Graphics, 2018 / Paper / Video We introduce a biomimetic framework for human sensorimotor control, which features a biomechanically simulated human musculoskeletal model. |

|

Biomimetic Perception Learning for Human Sensorimotor Control

Masaki Nakada, Honglin Chen, Demetri Terzopoulos Proceedings of the International Symposium on Visual Computing, 2018 / Paper We present a simulation framework for biomimetic human perception, which demonstrates voluntary foveation and visual pursuit of target objects coupled with visually-guided reaching actions to intercept the moving targets. |

|

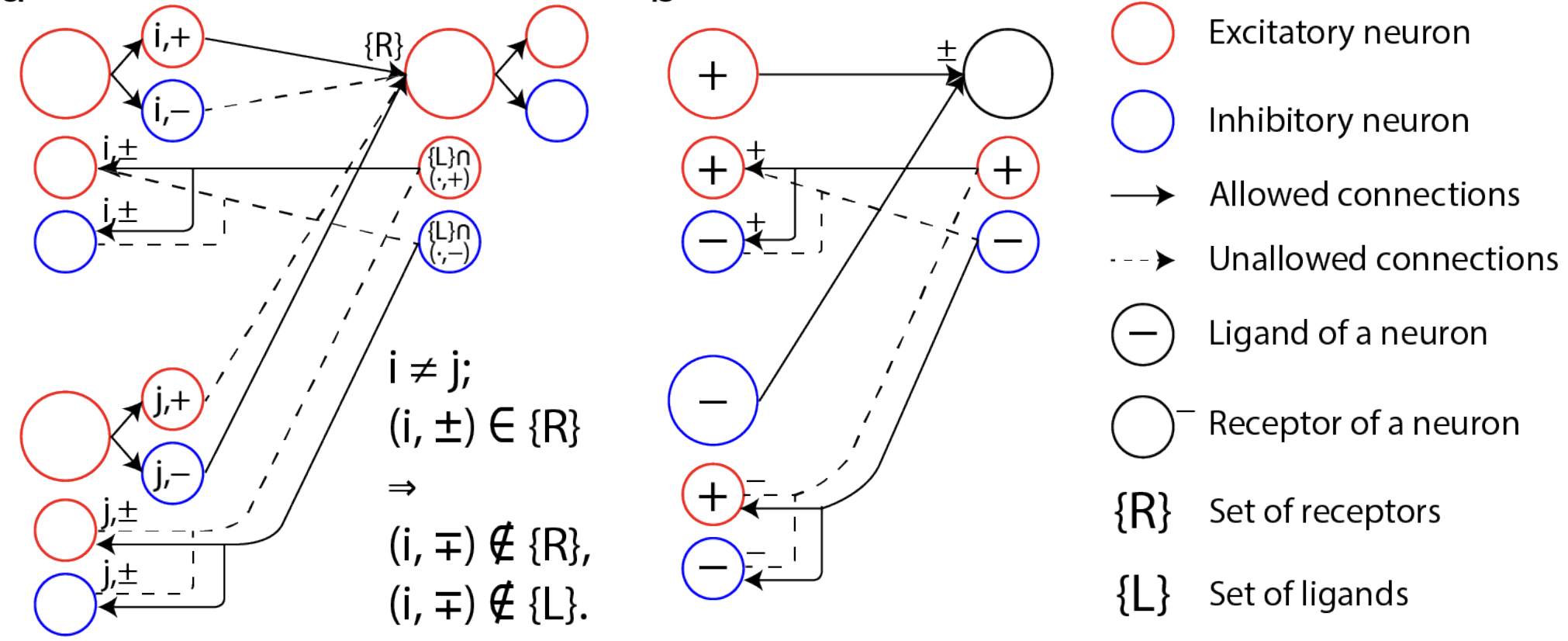

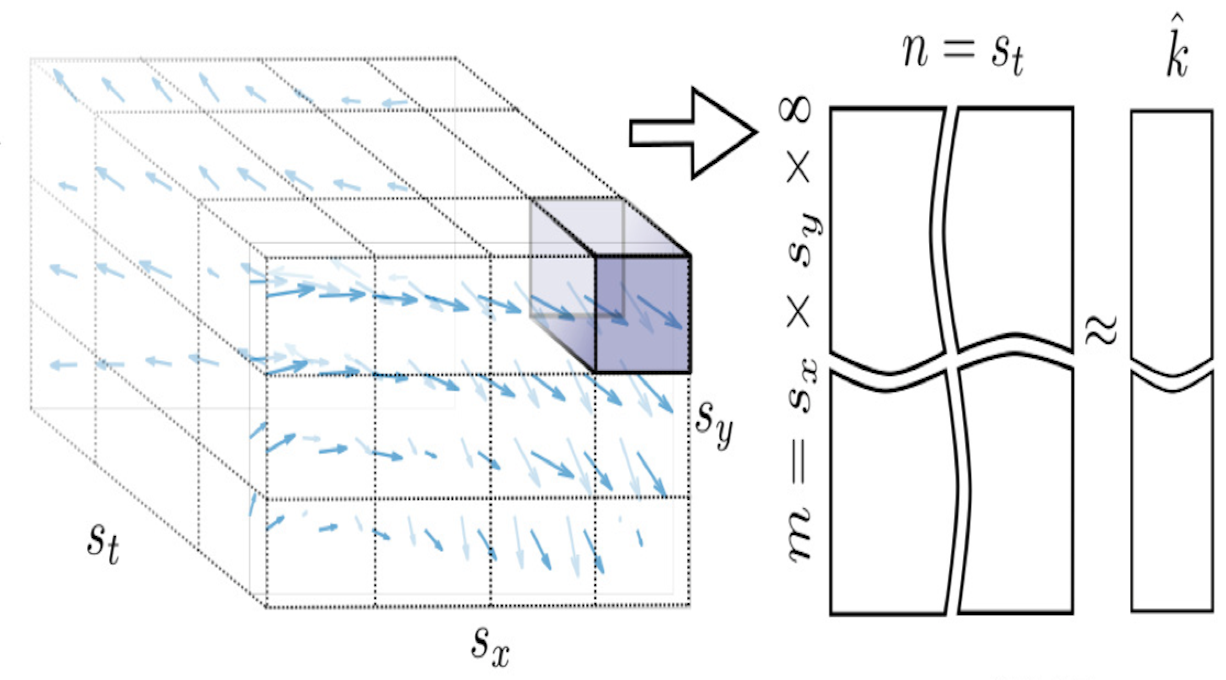

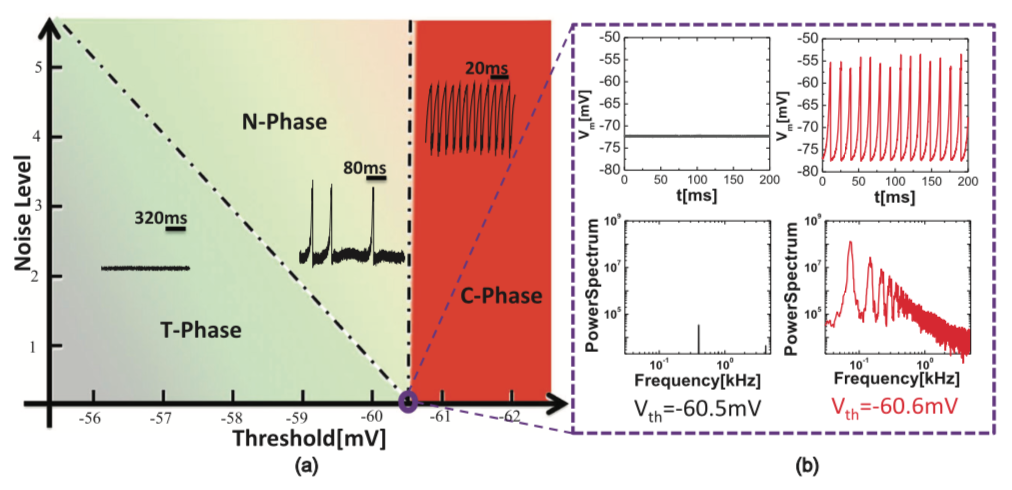

A Basic Phase Diagram of Neuronal Dynamics

Wenyuan Li, Igor V. Ovchinnikov, Honglin Chen, Zhe Wang, Albert Lee, Houchul Lee, Carlos Cepeda, Robert N. Schwartz, Karlheinz Meier and Kang L. Wang Neural Computation, 2018 / Paper We study the criticality hypothesis in neuronal dynamics and demonstrate that a noise-induced chaotic phase grows with the noise intensity |

Teaching |

|

CS131 Computer Vision: Foundations and Applications, Stanford, Winter 2024

CS 236 Deep Generative Models , Stanford, Autumn 2023 CS 375 Large-Scale Neural Network Models for Neuroscience, Stanford, Spring 2023 |