I'm currently a research scientist in the Shah Lab at Stanford University. Previously I was a CS postdoc in Stanford's Mobilize Center, mentored by Chris Ré and Scott Delp.

I'm interested in tools and methods that enable domain experts to rapidly build and modify machine learning models. I'm most passionate about medical application of machine learning, where obtaining large-scale, expert-labeled training data is a significant challenge. My research focuses on weakly supervised machine learning, training foundation models for medicine, and methods for data-centric AI.

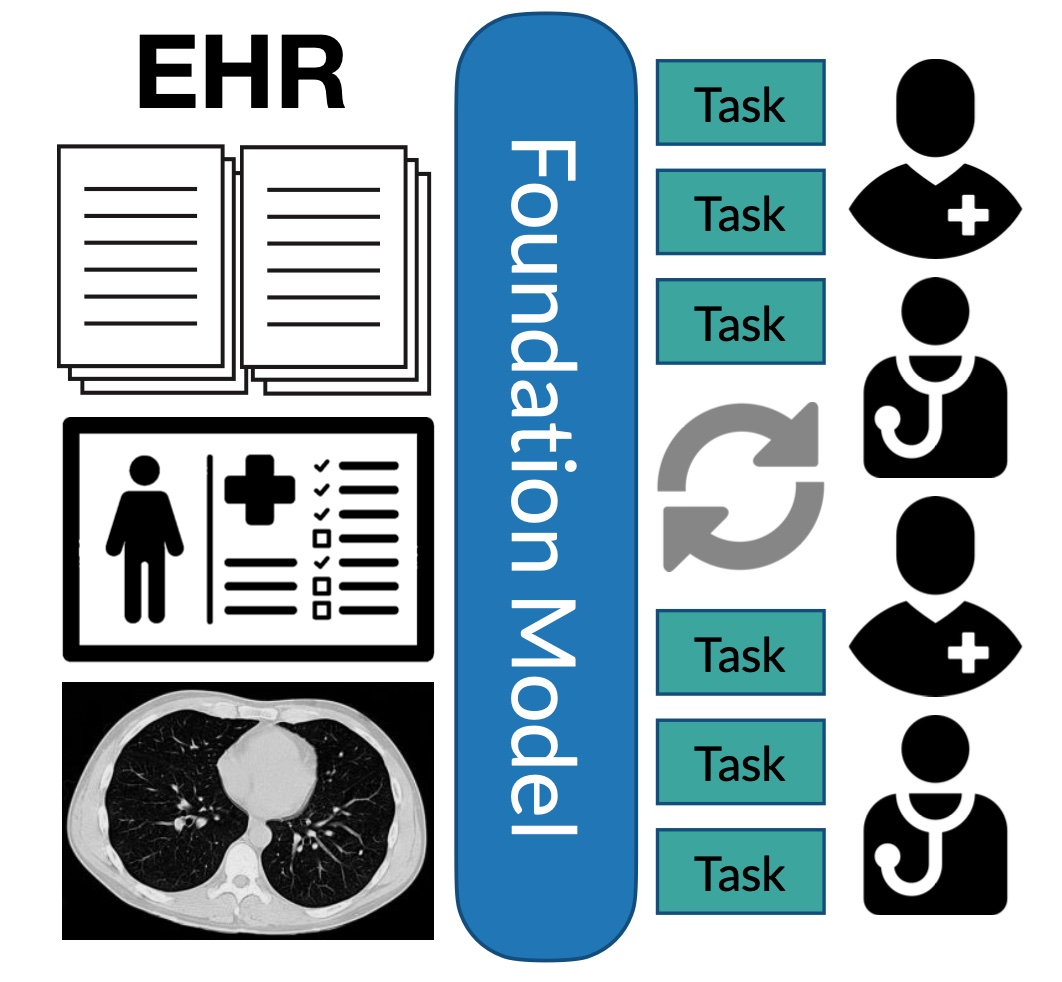

Healthcare Foundation Models: Electronic health records (EHR) capture complex, heterogenous data about patient health, including structured medical codes, unstructured patient notes, imaging and more. EHR foundation models offer a promising path towards combining these data streams to improve model performance, adaptability, and facilitate novel approaches for clinician-in-the-loop AI systems.

Large Language Models: The BigScience 2021 Workshop: The Summer of Language Models is an excited international collaboration aimed at training a very large and open language model for the research community. I'm co-chair of the biomedical working group and participant in the modeling working group. We are currently putting together a collection of over 150 expert-labeled biomedical datasets tailored for use in large-scale language modeling.

Weak Supervision: I am a co-developer and contributor to Stanford's weak supervision framework Snorkel. I have papers on weakly supervised biomedical concept tagging, machine reading in the electronic health record (EHR), and classifying rare aortic valve diseases in cardiac MRI videos from the UK Biobank, an open, population-scale health dataset.