This research page is not updated anymore. Please visit the website of the Stanford Computational Imaging Group for more information on our latest research: www.computationalimaging.org

2014

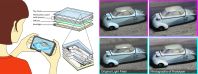

Eyeglasses-free Display: Towards Correcting Visual Aberrations with Computational Light Field Displays

Millions of people worldwide need glasses or contact lenses to see or read properly. We introduce a computational display technology that predistorts the presented content for an observer, so that the target image is perceived without the need for eyewear. By designing optics in concert with prefiltering algorithms, the proposed display architecture achieves significantly higher resolution and contrast than prior approaches to vision-correcting image display. We demonstrate that inexpensive light field displays driven by efficient implementations of 4D prefiltering algorithms can produce

the desired vision-corrected imagery, even for higher-order aberrations that are difficult to be corrected with glasses. The proposed computational display architecture is evaluated in simulation and with a low-cost prototype device.

F. Huang, G. Wetzstein, B. Barsky, R. Raskar "Eyeglasses-free Display: Towards Correcting Visual Aberrations with Computational Light Field Displays". ACM Trans. Graph. (SIGGRAPH) 2014.

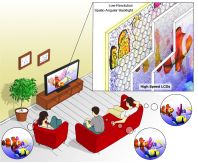

A Compressive Light Field Projection System

For about a century, researchers and experimentalists have strived to bring glasses-free 3D experiences to the big screen. Much progress has been made and light field projection systems are now commercially available. Unfortunately, available display systems usually employ dozens of devices making such setups costly, energy inefficient, and bulky. We present a compressive approach to light field synthesis with projection devices. For this purpose, we propose a novel, passive screen design that is inspired by angle-expanding Keplerian telescopes. Combined with high-speed light field projection and nonnegative light field factorization, we demonstrate that compressive light field projection is possible with a single device. We build a prototype light field projector and angle-expanding screen from scratch, evaluate the system in simulation, present a variety of results, and demonstrate that the projector can alternatively achieve super-resolved and high dynamic range 2D image display when used with a conventional screen.

M. Hirsch, G. Wetzstein, R. Raskar "A Compressive Light Field Projection System". ACM Trans. Graph. (SIGGRAPH) 2014, Technical Paper and Emerging Technologies Demo. Featured in Engadget, Gizmag, Kurzweil, MIT News, Adafruit, Physics World, and many more!

Simultaneous whole-animal 3D imaging of neuronal activity using light-field microscopy

High-speed, large-scale three-dimensional (3D) imaging of neuronal activity poses a major challenge in neuroscience. Here we demonstrate simultaneous functional imaging of neuronal activity at single-neuron resolution in an entire Caenorhabditis elegans and in larval zebrafish brain. Our technique captures the dynamics of spiking neurons in volumes of ~700 ?m × 700 ?m × 200 ?m at 20 Hz. Its simplicity makes it an attractive tool for high-speed volumetric calcium imaging.

R. Prevedel, Y. Yoon, M. Hoffmann, N. Pak, G. Wetzstein, S. Kato, T. Schodel, R. Raskar, M. Zimmer, E. Boyden, A. Vaziri "Simultaneous whole-animal 3D imaging of neuronal activity using light-field microscopy". Nature Methods, Brief Communication, 2014.

Focus 3D: Compressive Accommodation Display

We present a glasses-free 3D display design with the potential to provide viewers with nearly correct accommodative depth cues, as well as motion parallax and binocular cues. Building on multilayer attenuator and directional backlight architectures, the proposed design achieves the high angular resolution needed for accommodation by placing spatial light modulators about a large lens: one conjugate to the viewer's eye, and one or more near the plane of the lens. Nonnegative tensor factorization is used to compress a high angular resolution light field into a set of masks that can be displayed on a pair of commodity LCD panels. By constraining the tensor factorization to preserve only those light rays seen by the viewer, we effectively steer narrow high resolution viewing cones into the user's eyes, allowing binocular disparity, motion parallax, and the potential for nearly correct accommodation over a wide field of view. We verify the design experimentally by focusing a camera at different depths about a prototype display, establish formal upper bounds on the design's accommodation range and diffraction-limited performance, and discuss practical limitations that must be overcome to allow the device to be used with human observers.

A. Maimone, G. Wetzstein, D. Lanman, M. Hirsch, R. Raskar, H. Fuchs "Focus 3D: Compressive Accommodation Display". ACM Trans. Graph. 2013, presented at ACM SIGGRAPH 2014.

Transparent Object Reconstruction via Coded Transport of Intensity

Capturing and understanding visual signals is one of the core interests of computer vision. Much progress has been made w.r.t. many aspects of imaging, but the reconstruction of refractive phenomena, such as turbulence, gas and heat flows, liquids, or transparent solids, has remained a challenging problem. In this paper, we derive an intuitive formulation of light transport in refractive media using light fields and the transport of intensity equation. We show how coded illumination in combination with pairs of recorded images allow for robust computational reconstruction of dynamic two and three-dimensional refractive phenomena.

C. Ma, X. Lin, J. Suo, Q. Dai, G. Wetzstein "Transparent Object Reconstruction via Coded Transport of Intensity". IEEE CVPR 2014 Oral

Dual-coded Compressive Hyper-spectral Imaging

This Letter presents a new snapshot approach to hyperspectral imaging via dual-optical coding and compressive computational reconstruction. We demonstrate that two high-speed spatial light modulators, located conjugate to the image and spectral plane, respectively, can code the hyperspectral datacube into a single sensor image such that the high-resolution signal can be recovered in postprocessing. We show various applications by designing different optical modulation functions, including programmable spatially varying color filtering, multiplexed hyperspectral imaging, and high-resolution compressive hyperspectral imaging.

X. Ling, G. Wetzstein, Y. Liu, Q. Dai "Dual-coded Compressive Hyper-spectral Imaging", OSA Optics Letters (Journal), 39 (7)

Compressive Multi-mode Superresolution Display

Compressive displays are an emerging technology exploring the co-design of new optical device configurations and compressive computation. Previously, research has shown how to improve the dynamic range of displays and facilitate high-quality light field or glasses-free 3D image synthesis. In this paper, we introduce a new multi-mode compressive display architecture that supports switching between 3D and high dynamic range (HDR) modes as well as a new super-resolution mode. The proposed hardware consists of readily-available components and is driven by a novel splitting algorithm that computes the pixel states from a target high-resolution image. In effect, the display pixels present a compressed representation of the target image that is perceived as a single, high resolution image.

F. Heide, J. Gregson, G. Wetzstein, R. Raskar, W. Heidrich "Compressive multi-mode superresolution display", OSA Optics Express (Journal)

A Switchable Light Field Camera Architecture with Angle Sensitive Pixels and Dictionary-based Sparse Coding

We propose a flexible light field camera architecture that is at the convergence of optics, sensor electronics, and applied mathematics. Through the co-design of a sensor that comprises tailored, Angle Sensitive Pixels and advanced reconstruction algorithms, we show that—contrary to light field cameras today—our system can use the same measurements captured in a single sensor image to recover either a high-resolution 2D image, a low-resolution 4D light field using fast, linear processing, or a high-resolution light field using sparsity-constrained optimization.

M. Hirsch, S. Sivaramakrishnan, S. Jayasuriya, A. Wang, A. Molnar, R. Raskar, G. Wetzstein "A Switchable Light Field Camera Architecture with Angle Sensitive Pixels and Dictionary-based Sparse Coding", IEEE International Conference on Computational Photography 2014, Best Paper Award!

Wide-Field-of-View Compressive Light-Field Display Using a Multilayered Architecture and Viewer Tracking

In this paper, we discuss a simple extension to existing

compressive multilayer light field displays that greatly extends

their field of view and depth of field. Rather than optimizing these

displays to create a moderately narrow field of view at the center

of the display, we constrain optimization to create narrow view

cones that are directed to the viewer’s eyes, allowing the

available display bandwidth to be utilized more efficiently. These

narrow view cones follow the viewer, creating a wide apparent

field of view. Imagery is also recalculated for the viewer’s exact

position, creating a greater depth of field. The view cones can be

scaled to match the positional error and latency of the tracking

system. Using more efficient optimization and commodity tracking

hardware and software, we demonstrate a real-time, glasses-free

3D display that offers a 110x45 degree field of view.

A. Maimone, R. Chen, H. Fuchs, R. Raskar, G. Wetzstein "Wide-Field-of-View Compressive Light-Field Display Using a Multilayered Architecture and Viewer Tracking", Society of Information Displays, Symposium Digest 2014

2013

Compressive Light Field Photography

Light field photography has gained a significant research interest in the last two decades; today, commercial light field cameras are widely available. Nevertheless, most existing acquisition approaches either multiplex a low-resolution light field into a single 2D sensor image or require multiple photographs to be taken for acquiring a high-resolution light field. We propose a compressive light field camera architecture that allows for higher-resolution light fields to be recovered than previously possible from a single image. The proposed architecture comprises three key components: light field atoms as a sparse representation of natural light fields, an optical design that allows for capturing optimized 2D light field projections, and robust sparse reconstruction methods to recover a 4D light field from a single coded 2D projection. In addition, we demonstrate a variety of other applications for light field atoms and sparse coding, including 4D light field compression and denoising.

Marwah, K., Wetzstein, G., Bando, K., Raskar, R. "Compressive Light Field Photography using Overcomplete Dictionaries and Optimized Projections". ACM Trans. Graph. (SIGGRAPH) 2013.

Adaptive Image Synthesis for Compressive Displays

Recent years have seen proposals for exciting new computational display technologies that are compressive in the sense that they generate high resolution images or light fields with relatively few display parameters. Image synthesis for these types of displays involves two major tasks: sampling and rendering high-dimensional target imagery, such as light fields or time-varying light fields, as well as optimizing the display parameters to provide a good approximation of the target content. In this paper, we introduce an adaptive optimization framework for compressive displays that generates high quality images and light fields using only a fraction of the total plenoptic samples. We demonstrate the framework for a large set of display technologies, including several types of auto-stereoscopic displays, high dynamic range displays, and high-resolution displays. We achieve significant performance gains, and in some cases are able to process data that would be infeasible with existing methods.

Heide, F., Wetzstein, G., Raskar, R., Heidrich, W. "Adaptive Image Synthesis for Compressive Displays". ACM Trans. Graph. (SIGGRAPH) 2013.

Plenoptic Multiplexing and Reconstruction

Photography has been striving to capture an ever increasing amount of visual information in a single image. Digital sensors, however, are limited to recording a small subset of the desired information at each pixel. A common approach to overcoming the limitations of sensing hardware is the optical multiplexing of high-dimensional data into a photograph. While this is a well-studied topic for imaging with color filter arrays, we develop a mathematical framework that generalizes multiplexed imaging to all dimensions of the plenoptic function. This framework unifes a wide variety of existing approaches to analyze and reconstruct multiplexed data in either the spatial or the frequency domain. We demonstrate many practical applications of our framework including high-quality light feld reconstruction, the first comparative noise analysis of light field attenuation masks, and an analysis of aliasing in multiplexing applications.

Wetzstein, G., Ihrke, I., Heidrich, W. "On Plenoptic Multiplexing and Reconstruction". International Journal of Computer Vision (IJCV), Volume 101, Issue 2, Pages 384-400

Display adaptive 3D content remapping

Glasses-free automultiscopic displays are on the verge of becoming a standard technology in consumer products. These displays are capable of producing the illusion of 3D content without the need of any additional eyewear. However, due to limitations in angular resolution, they can only show a limited depth of field, which translates into blurred-out areas whenever an object extrudes beyond a certain depth. Moreover, the blurring is device-specific, due to the different constraints of each display. We introduce a novel display-adaptive light field retargeting method, to provide high-quality, blur-free viewing experiences of the same content on a variety of display types, ranging from hand-held devices to movie theaters. We pose the problem as an optimization, which aims at modifying the original light field so that the displayed content appears sharp while preserving the original perception of depth. In particular, we run the optimization on the central view and use warping to synthesize the rest of the light field. We validate our method using existing objective metrics for both image quality (blur) and perceived depth. The proposed framework can also be applied to retargeting disparities in stereoscopic image displays, supporting both dichotomous and non-dichotomous comfort zones.

Masia, B., Wetzstein, G., Aliaga, C., Raskar, R., Gutierrez, D. "Display adaptive 3D content remapping". Computers and Graphics, Volume 37, Issue 8, Pages 983-996

Coded Focal Stack Photography

We present coded focal stack photography as a computational

photography paradigm that combines a focal sweep

and a coded sensor readout with novel computational algorithms.

We demonstrate various applications of coded focal

stacks, including photography with programmable nonplanar

focal surfaces and multiplexed focal stack acquisition.

By leveraging sparse coding techniques, coded focal

stacks can also be used to recover a full-resolution depth

and all-in-focus (AIF) image from a single photograph.

Coded focal stack photography is a significant step towards

a computational camera architecture that facilitates highresolution

post-capture refocusing, flexible depth of field,

and 3D imaging.

Ling, X., Suo, J., Wetzstein, G., Dai, Q., Raskar, R. "Coded Focal Stack Photography". International Conference on Computational Photography (ICCP)

High-rank Coded Aperture Projection for Extended Depth of Field

Projectors require large apertures to maximize light

throughput. Unfortunately, this leads to shallow depths

of field (DOF), hence blurry images, when projecting on

non-planar surfaces, such as cultural heritage sites, curved

screens, or when sharing visual information in everyday

environments. We introduce high-rank coded aperture

projectors - a new computational display technology that

combines optical designs with computational processing to

overcome depth of field limitations of conventional devices.

In particular, we employ high-speed spatial light modulators

(SLMs) on the image plane and in the aperture of modified

projectors. The patterns displayed on these SLMs are

computed with a new mathematical framework that uses

high-rank light field factorizations and directly exploits the

limited temporal resolution and contrast sensitivity of the

human visual system. With an experimental prototype projector,

we demonstrate significantly increased DOF as compared

to conventional technology.

Ma, C., Suo, J., Dai, Q., Raskar, R., Wetzstein, G. "High-rank Coded Aperture Projection for Extended Depth of Field". International Conference on Computational Photography (ICCP)

2012

Tensor Displays: Compressive Light Field Synthesis using Multilayer Displays with Directional Backlighting

We introduce tensor displays: a family of compressive light field displays comprising all architectures employing a stack of time-multiplexed, light-attenuating layers illuminated by uniform or directional backlighting (i.e., any low-resolution light field emitter). We show that the light field emitted by an N-layer, M-frame tensor display can be represented by an Nth-order, rank-M tensor. Using this representation we introduce a unified optimization framework, based on nonnegative tensor factorization (NTF), encompassing all tensor display architectures. This framework is the first to allow joint multilayer, multiframe light field decompositions, significantly reducing artifacts observed with prior multilayer-only and multiframe-only decompositions; it is also the first optimization method for designs combining multiple layers with directional backlighting. We verify the benefits and limitations of tensor displays by constructing a reconfigurable prototype using modified LCD panels and a custom integral imaging backlight. Our efficient, GPU-based NTF implementation enables interactive applications. Through simulations and experiments we show that tensor displays reveal practical architectures with greater depths of field, wider fields of view, and thinner form factors, compared to prior automultiscopic displays.

Wetzstein, G., Lanman, D., Hirsch, M., Raskar, R. "Tensor Displays: Compressive Light Field Synthesis using Multilayer Displays with Directional Backlighting". ACM Trans. Graph. (SIGGRAPH) 2012.

ACM SIGGRAPH 2012 Emerging Technologies (demo)

Aspects of Compressive Light Field Display Design

We explore different aspects of compressive light field display design and fabrication, including real-time GPU implementation of nonnegative matrix and tensor factorizations, 4D frequency analysis of multilayer displays for depth of field analysis, and fabrication and calibration of LCD-based multilayer ligth field displays, in the following papers:

Wetzstein, G., Lanman, D., Hirsch, M., Raskar, R. "Real-time Image Generation for Compressive Light Field Displays". OSA Int. Symposium on Display Holography 2012.

Lanman, D., Wetzstein, G., Hirsch, M., Raskar, R. "Depth of Field Analysis for Multilayer Automultiscopic Displays". OSA Int. Symposium on Display Holography 2012.

Hirsch, M., Lanman, D., Wetzstein, G., Raskar, R. "Construction and Calibration of LCD-based Multi-Layer Light Field Displays". OSA Int. Symposium on Display Holography 2012.

Overview of Compressive Light Field Displays

The following articles discuss state-of-the-art approaches to light field display design. Through the co-design of display optics and computational processing, these computational and compressive light field displays overcome fundamental limitations of purely optical designs or image processing alone.

Wetzstein, G., Lanman, D., Hirsch, M., Heidrich, W., Raskar, R. "Compressive Light Field Displays". Computer Graphics & Applications, Volume 32, Number 5, 2012.

Lanman, D., Wetzstein, G., Hirsch, M., Heidrich, W., Raskar, R. "Applying Formal Optimization Methods to Multi-Layer Automultiscopic Displays". SPIE Stereoscopic Displays and Applications XXIII 2012.

Ultra-fast Lensless Computational Imaging through 5D Frequency Analysis of Time-resolved Light Transport

Light transport has been extensively analyzed in both the spatial and the frequency domain; the latter allows for intuitive interpretations of effects introduced by propagation in free space and optical elements as well as for optimal designs of computational cameras capturing specific visual information. We relax the common assumption that the speed of light is infinite and analyze free space propagation in the frequency domain considering spatial, temporal, and angular light variation. Using this analysis, we derive analytic expressions for cross-dimensional information transfer and show how this can be exploited for designing a new, time-resolved bare sensor imaging system.

Wu, D., Wetzstein, G., Barsi, C., Willwacher, T., O'Toole, M., Naik, N., Dai, Q., Kutulakos, K., Raskar, R. "Frequency Analysis of Transient Light Transport with Applications in Bare Sensor Imaging". European Conference on Computer Vision (ECCV) 2012.

Supplemental document including derivations of expression in the paper and extended equations (ECCV 2012).

Computational Cellphone Microscopy

Within the last few years, cellphone subscriptions have widely spread and now cover even the remotest parts of the planet. Adequate access to healthcare, however, is not widely available, especially in developing countries. We propose a new approach to converting cellphones into

low-cost scientific devices for microscopy. Cellphone microscopes have the potential to revolutionize health-related screening and analysis for a variety of applications, including

blood and water tests. Our optical system is more flexible than previously proposed mobile microscopes and allows for wide field of view panoramic imaging, the acquisition of parallax, and coded background illumination, which optically enhances the contrast of transparent and refractive specimens.

Arpa, A., Wetzstein, G., Lanman, D., Raskar, R. "Single Lens Off-Chip Cellphone Microscopy". IEEE International Workshop on Projector-Camera Systems (PROCAMS) 2012. Best Paper Honorable Mention!

Movie: Technology (~11MB) Movie: Technology (~11MB)

2011

Computational Plenoptic Image Acquisition and Display

In my thesis, I explore a number of approaches to joint optical multiplexing and computational reconstruction of the dimensions of the plenoptic function.

For this purpose, a new mathematical theory is introduced, providing a crucial step toward the "ultimate" computational camera.

The combined design of optical light modulation and computational processing is not only useful for photography, displays benefit from similar ideas.

Within this scope, three different approaches to computational displays are explored in my thesis: glasses-free 3D displays for entertainment and

scientific visualization, optical see-through displays enhancing the capabilities of the human visual system, and computational probes-displays designed for

computer vision applications rather than for direct view.

The thesis contains many additional experiments and derivations, as compared to the published papers.

Wetzstein, G. "Computational Plenoptic Image Acquisition and Display". PhD Thesis, University of British Columbia 2011.

Alain Fournier PhD Dissertation Award!

Polarization Fields: Dynamic Light Field Display using Multi-Layer LCDs

We introduce polarization field displays as an optically-efficient design for dynamic light field display using multi-layered LCDs.

Such displays consist of a stacked set of liquid crystal panels with a single pair of crossed linear polarizers. Each layer is modeled as a

spatially-controllable polarization rotator, as opposed to a conventional spatial light modulator that directly attenuates light. Color

display is achieved using field sequential color illumination with monochromatic LCDs, mitigating severe attenuation and moire occurring

with layered color filter arrays. We demonstrate such displays can be controlled, at interactive refresh rates, by adopting the

SART algorithm to tomographically solve for the optimal spatially-varying polarization state rotations applied by each layer. We validate

our design by constructing a prototype using modified off-the-shelf panels. We demonstrate interactive display using a GPU-based

SART implementation supporting both polarization-based and attenuation-based architectures. Experiments characterize the

accuracy of our image formation model, verifying polarization field displays achieve increased brightness, higher resolution, and extended

depth of field, as compared to existing automultiscopic display methods for dual-layer and multi-layer LCDs.

Lanman, D., Wetzstein, G., Hirsch, M., Heidrich, W., Raskar, R. "Polarization Fields: Dynamic Light Field Display using Multi-Layer LCDs". ACM Trans. Graph. (Siggraph Asia) 2011.

Layered 3D: Tomographic Image Synthesis for Attenuation-based Light Field and High Dynamic Range Displays

We develop tomographic techniques for image synthesis on displays

composed of compact volumes of light-attenuating material.

Such volumetric attenuators recreate a 4D light field or high-contrast

2D image when illuminated by a uniform backlight. Since

arbitrary oblique views may be inconsistent with any single attenuator,

iterative tomographic reconstruction minimizes the difference

between the emitted and target light fields, subject to physical constraints

on attenuation. As multi-layer generalizations of conventional

parallax barriers, such displays are shown, both by theory

and experiment, to exceed the performance of existing dual-layer

architectures. For 3D display, spatial resolution, depth of field, and

brightness are increased, compared to parallax barriers. For a plane

at a fixed depth, our optimization also allows optimal construction

of high dynamic range displays, confirming existing heuristics and

providing the first extension to multiple, disjoint layers. We conclude

by demonstrating the benefits and limitations of attenuationbased

light field displays using an inexpensive fabrication method:

separating multiple printed transparencies with acrylic sheets.

Wetzstein, G., Lanman, D., Heidrich, W., Raskar, R. "Layered 3D: Tomographic Image Synthesis for Attenuation-based Light Field and

High Dynamic Range Displays". ACM Trans. Graph. (Siggraph) 2011. Cover Feature!

Refractive Shape from Light Field Distortion

Acquiring transparent, refractive objects is challenging as these kinds of objects can only be observed

by analyzing the distortion of reference background patterns. We present a new, single image approach

to reconstructing thin transparent surfaces, such as thin solids or surfaces of fluids. Our method is

based on observing the distortion of light field background illumination. Light field probes have the

potential to encode up to four dimensions in varying colors and intensities: spatial and angular variation

on the probe surface; commonly employed reference patterns are only two-dimensional by coding either

position or angle on the probe. We show that the additional information can be used to reconstruct

refractive surface normals and a sparse set of control points from a single photograph.

Wetzstein, G., Roodnick, D., Heidrich, W., Raskar, R. "Refractive Shape from Light Field Distortion".

IEEE International Conference on Computational Vision (ICCV) 2011.

Hand-Held Schlieren Photography with Light Field Probes

We introduce a new approach to capturing refraction in transparent media, which we call

Light Field Background Oriented Schlieren Photography (LFBOS). By optically coding the

locations and directions of light rays emerging from a light field probe, we can observe

and measure changes of the refractive index field between the probe and a camera or an

observer. Rather than using complicated and expensive optical setups as in traditional

Schlieren photography we employ commodity hardware; our prototype consists of a camera

and a lenslet array. By carefully encoding the color and intensity variations of a 4D

probe instead of a diffuse 2D background, we avoid expensive computational processing

of the captured data, which is necessary for Background Oriented Schlieren imaging (BOS).

We analyze the benefits and limitations of our approach and discuss application scenarios.

Wetzstein, G., Raskar, R., Heidrich, W. "Hand-Held Schlieren Photography with Light Field Probes".

IEEE International Conference on Computational Photography (ICCP) 2011. Best Paper Award!

Computational Plenoptic Imaging

The plenoptic function is a ray-based model for light that includes the color spectrum as well

as spatial, temporal, and directional variation. Although digital light sensors have greatly

evolved in the last years, one fundamental limitation remains: all standard CCD and CMOS

sensors integrate over the dimensions of the plenoptic function as they convert photons into

electrons; in the process, all visual information is irreversibly lost, except for a two-dimensional,

spatially-varying subset - the common photograph. In this state of the art report, we review approaches

that optically encode the dimensions of the plenpotic function transcending those captured by

traditional photography and reconstruct the recorded information computationally.

Wetzstein, G., Ihrke, I., Lanman, D., Heidrich, W. "Computational Plenoptic Imaging".

Computer Graphics Forum 2011, Volume 30, Issue 8, pp. 2397–2426, Survey Paper (updated version of the Eurographics 2011 STAR),

Wetzstein, G., Ihrke, I., Lanman, D., Heidrich, W. "State of the Art in Computational Plenoptic Imaging".

Eurographics (EG) STAR 2011

Wetzstein, G., Ihrke, I., Gukov, A., Heidrich, W. "Towards a Database of High-dimensional Plenoptic Images".

IEEE International Conference on Computational Photography (ICCP Poster) 2011

2010

Coded Aperture Projection

Coding a projector’s aperture plane with adaptive patterns together with inverse

filtering allow the depth-of-field of projected imagery to be increased.

We present two prototypes and corresponding algorithms for static and programmable

apertures. We also explain how these patterns can be computed

at interactive rates, by taking into account the image content and limitations

of the human visual system. Applications such as projector defocus

compensation, high quality projector de-pixelation, and increased temporal

contrast of projected video sequences can be supported. Coded apertures

are a step towards next-generation auto-iris projector lenses.

Grosse, M., Wetzstein, G., Grundhoefer, A., Bimber, O. "Coded Aperture Projection".

Transactions on Graphics (Journal) 29:3, June 2010. Presented at Siggraph 2010.

Movie: Technology (~75MB) Movie: Technology (~75MB)

Grosse, M., Wetzstein, G., Grundhoefer, A., Bimber, O. "Adaptive Coded Aperture Projection". SIGGRAPH 2009 Talk

Grosse, M., Wetzstein, G., Grundhoefer, A., Bimber, O. "Adaptive Coded Aperture Projection". SIGGRAPH 2009 Talk

Sensor Saturation in Fourier Multiplexed Imaging

Optically multiplexed image acquisition techniques have become increasingly popular for encoding different exposures,

color channels, light-fields, and other properties of light onto two-dimensional image sensors. Recently,

Fourier-based multiplexing and reconstruction approaches have been introduced in order to achieve a superior light

transmission of the employed modulators and better signal-to-noise characteristics of the reconstructed data.

We show in this paper that Fourier-based reconstruction approaches suffer from severe artifacts in the case of sensor

saturation, i.e. when the dynamic range of the scene exceeds the capabilities of the image sensor. We analyze

the problem, and propose a novel combined optical light modulation and computational reconstruction method that

not only suppresses such artifacts, but also allows us to recover a wider dynamic range than existing image-space

multiplexing approaches.

Wetzstein, G., Ihrke, I., Heidrich, W. "Sensor Saturation in Fourier Multiplexed Imaging".

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2010.

A Theory of Plenoptic Multiplexing

Multiplexing is a common technique for encoding highdimensional image data into a single, two-dimensional image.

Examples of spatial multiplexing include Bayer patterns to capture color channels, and integral images to encode

light fields. In the Fourier domain, optical heterodyning has been used to acquire light fields.

In this paper, we develop a general theory of multiplexing the dimensions of the plenoptic function onto an image

sensor. Our theory enables a principled comparison of plenoptic multiplexing schemes, including noise analysis,

as well as the development of a generic reconstruction algorithm. The framework also aides in the identification and

optimization of novel multiplexed imaging applications.

Ihrke, I., Wetzstein, G., Heidrich, W. "A Theory of Plenoptic Multiplexing".

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2010 (with oral presentation).

Optical Image Processing Using Light Modulation Displays

We propose to enhance the capabilities of the human visual system by performing optical image processing

directly on an observed scene. Unlike previous work which additively superimposes imagery on a scene, or

completely replaces scene imagery with a manipulated version, we perform all manipulation through the use

of a light modulation display to spatially filter incoming light. We demonstrate a number of perceptually-motivated

algorithms including contrast enhancement and reduction, object highlighting for preattentive emphasis, color

saturation, de-saturation, and de-metamerization, as well as visual enhancement for the color blind. A camera

observing the scene guides the algorithms for on-the-fly processing, enabling dynamic application scenarios such as

monocular scopes, eyeglasses, and windshields.

Wetzstein, G., Luebke, D., Heidrich, W. "Optical Image Processing Using Light Modulation Displays".

Computer Graphics Forum (Journal) 2010, Volume 29, Issue 6, pages 1934-1944.

Adobe DNG reader for Matlab

A simple Adobe DNG loader for Matlab.

The loader reads RAW color filter array (CFA) data and metadata (such as the arrangement of the CFA filters) from a DNG file.

The loader is based on libTIFF. A reference of TIFF tags can be found

here and for more info on creating, compiling

and linking Matlab executables (such as the DNG reader) refer to

Peter Carbonetto's tutorial.

The reader is helpful for reading RAW CFA data into Matlab for prototyping de-mosaicking algorithms or other

processing on the RAW sensor data. Adobe has a

DNG converter

for Windows that allows you to convert your camera specific RAW file, for instance .NEF for Nikon or

.CR2 for Canon, to .DNG that still preserves the RAW data and most of the header information.

Dave Coffin's dcraw also supports DNG files.

MatlabDNG.tar.gz

2008 and earlier

The Visual Computing of Projector-Camera Systems

This article focuses on real-time image correction techniques that enable projector-camera systems to display

images onto screens that are not optimized for projections, such as geometrically complex, colored and textured

surfaces. It reviews hardware accelerated methods like pixel-precise geometric warping, radiometric compensation,

multi-focal projection, and the correction of general light modulation effects. Online and offline calibration as well

as invisible coding methods are explained. Novel attempts in super-resolution, high dynamic range and high-speed

projection are discussed. These techniques open a variety of new applications for projection displays. Some of them

will also be presented in this report

Bimber, O., Iwai, D., Wetzstein, G., and Grundhoefer, A., "The Visual Computing of Projector-Camera Systems".

In Computer Graphics Forum (Journal), Volume 27, Number 8, pp. 2219-2245, 2008

Bimber, O., Iwai, D., Wetzstein, G., and Grundhoefer, A., "The Visual Computing of Projector-Camera Systems".

EuroGraphics (STAR), 2007

Radiometric Compensation through Inverse Light Transport

Radiometric compensation techniques allow seamless projections onto complex everyday surfaces.

Implemented with projector-camera systems they support the presentation of visual content in

situations where projection-optimized screens are not available or not desired - as in museums,

historic sites, air-plane cabins, or stage performances. We propose a novel approach that employs

the full light transport between a projector and a camera to account for many illumination aspects,

such as interreflections, refractions and defocus. Precomputing the inverse light transport in

combination with an efficient implementation on the GPU makes the real-time compensation of captured

local and global light modulations possible.

Wetzstein, G. and Bimber, O., "Radiometric Compensation through Inverse Light Transport".

Pacific Graphics, 2007

presentation slides

Wetzstein, G. and Bimber, O.

"Radiometric Compensation of Global Illumination Effects with Projector-Camera Systems".

ACM SIGGRAPH`06 (Poster), 2006

Movie: Technology (~37MB) Movie: Technology (~37MB)

Hector VR system

Hector is a script-based distributed platform independent VR system. It is written in

Python

, C and C++. Modern VR systems embrace the idea of using scripts for rapid prototyping of applications,

Hector is one step further: the entire core of the VR system is written in Python to

enable rapid prototyping not only for applications but for the VR system itself.

Design issues include a component based framework using independent modules, distribution,

easy exchangability of components, e.g. the renderer and scaleability.

Computational expensive parts of the framework such as rendering and mathematical operations

are implemented in low level programming languages such as C or C++ and wrapped, mostly

with SWIG, to be usable in Python.

Hector modules include libraries for rendering

[SGI OpenGL|Performer,

OpenSceneGraph and OpenGL],

audio [OpenAL], input devices [VRPN, haptic wheel for linux], networking

[SPREAD],

physics [OpenDynamicsEngine] and many more.

Two applications were created during the project: a physical based VR racer with haptic wheel

input and a pool simulation.

Hector is a sourceforge project and the source is available on the CVS. For more information

please refer to www.sourceforge.net/projects/hector

Wetzstein, G., Goellner, C.M., Beck, S., Feiszig, F., Derkau, S., Springer, J., Froehlich, B., "HECTOR - Sripting-Based VR System Design".

ACM SIGGRAPH'07 (Poster), 2007

Enabling View-Dependent Stereoscopic Projection in Real Environments

With this work we take a first step towards an ad-hoc stereoscopic projection

within real environments. We show how view-dependent image-based and geometric warping,

radiometric compensation, and multi-focal projection enable a view-dependent visualization

on ordinary (geometric complex, colored and textured) surfaces within everyday environments.

All these techniques are accomplished at interactive rates and on a per-pixel basis for multiple

interplaying projectors. Special display configurations for immersive or semi-immersive VR/AR

applications that require permanent and artificial projection canvases might become unnecessary.

Such an approach does not only offer new possibilities for augmented reality and virtual reality, but

also allows merging both technologies. This potentially gives some application domains – like architecture

– the possibility to benefit from the conceptual overlaps of AR and VR.

Bimber, O., Wetzstein, G., Emmerling, A., and Nitschke, C.

"Enabling View-Dependent Stereoscopic Projection in Real Environments".

International Symposium on Mixed and Augmented Reality (ISMAR'05), 2005

Movie: Technology (~40MB) Movie: Technology (~40MB)

Movie: Head-tracked visualization inside an 11th century water reservoir (~7MB, DivX) Movie: Head-tracked visualization inside an 11th century water reservoir (~7MB, DivX)

Siggraph'05 Emerging Technologies (Supported by A.R.T., more3D , Bauhaus-University Weimar) Siggraph'05 Emerging Technologies (Supported by A.R.T., more3D , Bauhaus-University Weimar)

Experiments inside an 11th century water reservoir in Peterborn/Erfurt (in cooperation with Bennert Group)

http://www.uni-weimar.de/medien/ar

HoloGraphics

In 2004 I joined the HoloGraphics research group at Bauhaus University.

We investigate the possibility of integrating computer-generated graphics

into holograms. Our goal is to combine the advantages of conventional holograms

(i.e., extremely high visual quality and realism, support of all depth queues

and of multiple observers at no computational cost, space efficiency, etc.) with

the advantages of today's computer-graphics capabilities (i.e., interactivity,

real-time rendering, simulation and animation, stereoscopic and autostereoscopic

presentation, etc.).

http://www.holographics.de

Bimber, O., Zeidler, T., Grundhoefer, A., Wetzstein, G., Moehring, M., Knoedel, S., and Hahne, U.

"Interacting with Augmented Holograms".

In proceedings of SPIE Conference on Practical Holography XIX: Materials and Applications, January 2005

Puppets'n'Hands

In cooperation with the TV station ABS/CBN in Manila, Philippines,

we developing a modeler and a real time rendering system for

modelling and animating flexible puppets such as the ones used

in ABS/CBN TV shows. The modeler implements

a new artist friendly manipulation method based on hand and tool manipulation.

The real time rendering system is capable of gathering data from

different sensors (dataglove, sliders, 3D tracking systems) and of mapping

them in real time to a physical simulation that simulates multilayered elastic

forces so as to transmit movement in real time to the virtual puppet. High level

real time surface rendering displays then the resulting surfaces with special

effects such as fur, cloth, hair, and all the materials that are used with real

puppets in current TV productions.

Wuethrich, C. A., Augusto, J., Banisch, S., Wetzstein, G., Musialski, P., Hofmann, T.

"Real Time Simulation of Elastic Latex Hand Puppets".

International Conferences in Central Europe on Computer Graphics, Visualization and

Computer Vision (WSCG'05), 2005

The Virtual Aquarium

Internship at

Fraunhofer Center for Research in Computer Graphics, Providence, RI, USA

Design and implementation of an easy to use workflow model

for creating interactive application for virtual and augmented reality

applications and assistant teacher. To overcome the cumbersomeness of

creating high quality applications for the Virtual Showcase, a workflow

model for designers and artists was developed that uses standard modelling

and animation tools such as 3d studio max or Maya in combination with the latest

CgFX shader plugin. CgFX supports multipass programmable vertex and fragment

shaders and generic interactively adjustable parameters. The Virtual Aquarium

including different corals and swimming fish was designed as a demo. The project

was done in cooperation with the Mystic Aquarium in Conneticut, USA.

Wetzstein, G., Stephenson P. "Towards a Workflow and Interaction Framework

for Virtual Aquaria". IEEE VR Workshop VR for Public Consumption, Chicago,

March 2004

Stephenson, P., Jungclaus, J., Branco, P., Horvatic, P., Wetzstein, G., Encarnacao, L. M.

"The Interactive Aquarium : Game-Based Interfaces and Wireless Technologies for Future-Generation Edutainment".

In TESI 2005 Conference Proceedings CD-ROM : Training, Education & Simulation International. Maastricht, 2005

Movie [~25MB]

Consistent Illumination within Augmented Environments

To achieve a consistent lighting situation between real and virtual environments is

important for convincing augmented reality (AR) applications.

A rich pallet of algorithms and techniques have been developed that match

illumination for video- or image-based augmented reality. However, very little

work has been done in this area for optical see-through AR. We believe that the

optical see-through concept is currently the most advanced technological approach

to provide an acceptable level of realism and interactivity.

Methods have been developed which create a consistent illumination between

real and virtual components within an optical see-through environment – such

as the Virtual Showcase. Combinations of video projectors and cameras are applied

to capture reflectance information from diffuse real objects and to illuminate

them under new synthetic lighting conditions. For diffuse objects, the capturing

process can also benefit from hardware acceleration – supporting dynamic update

rates. To handle indirect lighting effects (like color bleeding) an off-line

radiosity procedure is outlined that consists of multiple rendering passes.

For direct lighting effects (such as simple shading, shadows and reflections)

hardware accelerated techniques are described which allow to achieve interactive

frame rates. The reflectance information is used in addition to solve a main

problem of a previously introduced technique which creates consistent occlusion

effects for multiple users within such environments.

Bimber, O.,Grundhoefer, A., Wetzstein, G., and Knoedel, S.

Consistent Illumination within Optical See-Through Augmented Environments

In proceedings of International Symposium on Mixed and Augmented Reality(ISMAR'03), pp. 198-207, 2003

Movie [~16MB] DivXBundle

|