Research Discussions

The following log contains entries starting several months prior to the first day of class, involving colleagues at Brown, Google and Stanford, invited speakers, collaborators, and technical consultants. Each entry contains a mix of technical notes, references and short tutorials on background topics that students may find useful during the course. Entries after the start of class include notes on class discussions, technical supplements and additional references. The entries are listed in reverse chronological order with a bibliography and footnotes at the end.

Class Discussions

Welcome to the 2019 class discussion list. Preparatory notes posted prior to the first day of classes are available here. Introductory lecture material for the first day of classes is available here, a sample of final project suggestions here and last year's calendar of invited talks here. Since the class content for this year builds on that of last year, you may find it useful to search the material from the 2018 class discussions available here. Several of the invited talks from 2018 are revisited this year and, in some cases, are supplemented with new reference material provided by the list moderator.

August 9, 2019

%%% Fri Aug 9 04:18:57 PDT 2019

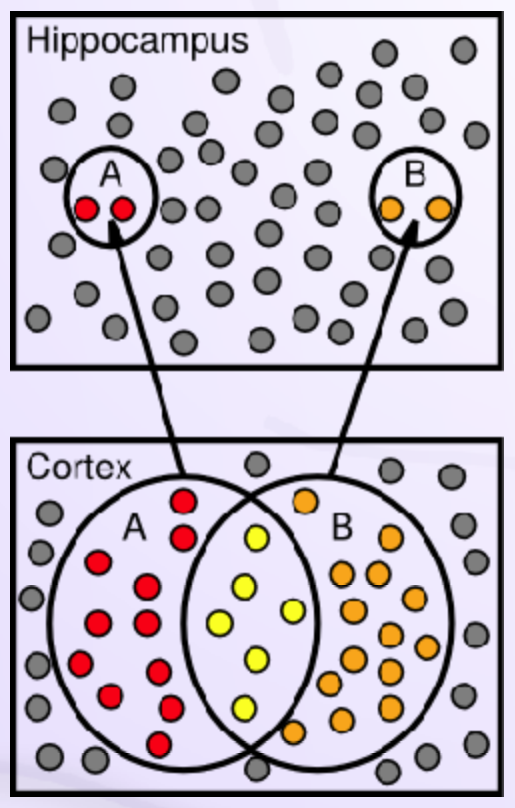

Here is a reading list focusing on the most up-to-date research relating to McClelland's Complementary Learning Systems Theory first introduced in the mid 90s, amended several times over the last twenty years, but remains remarkably close to its initial formulation. If you only have time to read only one paper, I recommend the first article listed below [221], as it is particularly well written, organized in a convenient tutorial format, reasonably comprehensive given the size of the related literature and particularly relevant to solving several key problems in training deep neural networks. The folder named Reference Papers includes PDF for many of the papers listed below:

I. Complementary Learning Systems Theory:

Kumaran et al [221] —

What Learning Systems do Intelligent Agents Need? Complementary Learning Systems Theory UpdatedSchapiro et al [332] —

Complementary learning systems within the hippocampus: A neural network modelling approach to reconciling episodic memory with statistical learningKumaran and Maguire [222] —

The Human Hippocampus: Cognitive Maps or Relational Memory?Kumaran and McClellandPR-12 [223] (REMERGE) — Generalization Through the Recurrent Interaction of Episodic Memories: A Model of the Hippocampal System

Kirkpatrick et al [215] —

Overcoming catastrophic forgetting in neural networks

II. Foundational and Related Earlier Papers:

McClelland et al [258] —

Why There Are Complementary Learning Systems in the Hippocampus and Neocortex: Insights From the Successes and Failures of Connectionist Models of Learning and MemoryMcClelland and Goddard [257] —

Considerations arising from a complementary learning systems perspective on hippocampus and neocortexO'Reilly et al [289] —

Complementary Learning SystemsHuang et al [193] (LEABRA) —

Assembling Old Tricks for New Tasks: A Neural Model of Instructional Learning and Control

August 5, 2019

%%% Mon Aug 5 03:25:36 PDT 2019

Papers on the Neural Correlates of Mathematical Thinking

Mathematical and Analytical Reasoning:

Amalric and Dehaene [12] see Fig 2

Menon [259] — see description of hippocampal role

Iuculano et al [203] — comprehensive overview

Imagination and Hypothetical Reasoning:

Hassabis and Maguire [175]— see Fig 1, Fig 3 and Fig 5

Abstraction and Hierarchical Reasoning:

Koechlin and Jubault [217]

Badre and D'Esposito [24] — see Fig 6

Badre [26] — see Fig 1 and Fig 2

Badre and Frank [25] — see [132] for computational model

The relevant figures from the above papers are available here (PDF).

Miscellaneous Loose Ends:

Robert Nesse [279] has written a very interesting book on the evolutionary basis for many psychological disorders. See this recent book review in the Wall Street Journal for a representive sample of its reception. His earlier work with George Williams [280] established him as one of the founders of the field of evolutionary medicine. Robert Sapolsky, Michael Gazzaniga and Carl Zimmer are among those extolling his contributions to neuropathology and clinical psychiatry. Richard Dawkins' interview with Nesse in 2013 focuses on evolutionary medicine, but the examples that Nesse uses in responding to Dawkins illustrate the principle guiding both books.

"An evolutionary perspective on dire mental diseases encourages new perspectives that shift attention away from the easy assumption that because they are influenced by genes, they are caused by defective genes. It calls attention to new traits, fitness landscapes, and control systems that may result in vulnerability. What such traits might be is a very good question. They are unlikely to be things as obvious as creativity or intelligence. Instead, they may be things such as rates of neuron growth in early development, rates of neuron pruning in adolescents, and rates of transmission in neural networks. On a higher level, attributing meaning to tiny gestures by others may be increasingly useful up to some peak beyond which it crashes into sustained paranoia. I am all too aware that these are mayor speculations and that the actual systems are likely to be complex in ways that make them hard to grasp. Nonetheless, investigating how selection shapes traits that maximize fitness but leave some individuals vulnerable offers opportunities to look for causes that are not underneath the streetlamps of population genetics and neuroscience." — From: Randolph M. Nesse. Good Reasons for Bad Feelings: Insights from the Frontier of Evolutionary Psychiatry. Page 261. 2019.

Substitute "genetic pathways" for "effectively operated neurotic chains" in the following quote from Norbert Wiener, and you'll have an approximate version of the above: "The superiority of the human brain to others ... is a reason why mental disorders are certainly most conspicuous and probably most common in man ... the longest chain of effectively operated neurotic chains, is likely to perform in a complicated type of behavior efficiently very close to the edge of an overload, [and] will give way in a serious and catastrophic way ... very possibly amounting to insanity." — Norbert Wiener. Cybernetics, or Control and Communication in the Animal and the Machine. Technology Press. 1948. Page 151.

July 21, 2019

%%% Sun Jul 21 04:43:14 PDT 2019

I've been struggling to deal with the problem of naïve physics as it relates to (a) the state-space representation for reinforcement learning and action selection, (b) hierarchical and compositional modeling and sample complexity, and (c) the role of analogy in transfer learning across multiple domains / environments. The naïve physics problem broke the back of logic-based approaches to building AI systems. In his ACM Turing Award lecture, Geoff Hinton explains why this failure happened in the context of the debate over symbolic and connectionist models and machine learning. I'll tell you some interesting anecdotes on Monday if you're interested.

The other loose end that I'm trying to tie up concerns catastrophic forgetting, memory consolidation and the problem of continuous training in which some parts of the network are being modified by gradient descent, some by Hebbian learning, others by targeted replay exploiting episodic memory and still others by some form of adaptive fast weights. I grouped these problems into two categories or general challenges that I refer to as grounded thinking (related to embodied cognition and modeling physical systems) and lifelong learning (related to transfer interference and catastrophic forgetting) where the former is an apt phrase for what I have in mind and the latter is borrowed from Thrun [366].

This is not the same as the symbol grounding problem which is the problem of how words (symbols) get their meanings. In particular, the notion of grounding is not tied to symbols, linguistics or formal logic. It owes more to Charles Sanders Peirce's theory of signs, than it does to Frege, Kripke or modern day logicians including Jerry Fodor, John McCarthy and Zenon Pylyshyn. Here are my recent thoughts concerning the importance of embodiment in the context of grounded thinking — see July 17; an example of how Barbara Tversky thinks about the importance of gestures; — see July 18; and several examples of biological learning strategies that operate in specialized subnetworks on different timescales — see July 19.

Miscellaneous Loose Ends: Please don't interpret my enthusiasm for the ideas in Tversky's book as a wholsale endorsement of her entire theory. The same goes for the popular writing of Andy Clark, Christine Kenneally or any of the other popular accounts that I've mentioned in these notes. None of these books are intended as scholarly works; they serve as outreach and education for the general public, and allow the writer to explore ideas in a more speculative and accessible format than would be possible in a peer-reviewed journal. They also provide a source of inspiration that can be invaluable to researchers in machine learning and AI, as long as they don't get carried away.

I've contemplated the idea that natural language could serve as the language of thought [128], perhaps even supplanting other means of internal neural communication1. Tversky's book provided a new perspective allowing me to better appreciate the importance of the body — or any suitably-capable cognitive apparatus serving as a tightly-coupled interface between an agent and its environment / underlying dynamical system — in enabling cognition and grounding language. Natural language complements our innate means of communicating with one another and sharing knowledge and is indispensable from a cultural and technological standpoint.

July 19, 2019

%%% Fri Jul 19 04:44:06 PDT 2019

This entry considers several neural network training methods that address the problem of catastrophic forgetting and lifelong learning. Yoon et al [405] present a neural-network architecture for lifelong learning that addresses the several key challenges2 They compare their architecture — that that they call Dynamically Expandable Networks (DEN) — with Elastic Weight Consolidation Kirkpatricket al [215] and Progressive Networks Rusu et al [322]. In contrasting their approach with the other two, they note that "since we retrain the network at each task t such that each new task utilizes and changes only the relevant part of the previous trained network, while still allowing to expand the network capacity when necessary. In this way, each task t will use a different subnetwork from the previous tasks, while still sharing a considerable part of the subnetwork with them." While only the Kirkpatricket al paper makes any claims regarding biologically plausibility, this trio of papers is worth taking a look at.

Another way that the brain appears to support learning new memories on top of existing ones is through neurogenesis: see Bergman et al 2015 Adult Neurogenesis in Humans and Kempermann et al 2015 Neurogenesis in the Adult Hippocampus. The dentate gyrus is one of the few circuits in humans that is generally agreed to support neurogenesis3. As you may recall from Randy O'Reilly's talk in class, the dentate gyrus is believed to support some form of pattern separation [347]. As another example of how neuroscience can contribute the development of AI systems, Brad Aimone and his colleagues at Sandia National Labs explored adding new neurons to deep learning networks during training, inspired by how neurogenesis in the dentate gyrus of the hippocampus helps learn new memories while retaining previous memories intact. His simulation studies described in a 2009 Neuron paper [1] and summarized in a recent podcast [00:22:46], suggest a somewhat more complicated role for the dentate gyrus that involves both pattern separation and time-dependent pattern integration4.

You have probably heard that sleep plays a critical role in learning new skills. Matthew Walker is a sleep researcher at UC Berkeley and his lab is responsible for a number of important results relating to sleep and learning. In a recent talk at Google moderated by Matt Brittin, Walker said the following:

So, in the studies with rats, what we've done is had them run around a maze with electrodes in their brains and you can pick up this kind of electrical signature of learning as it is running around the maze, and let's say that you just make each one of those brain cells have a tone to it and so as the rat is running around a maze, you just hear this set of brain cell firing as the rat's brain is encoding the maze [bup, bup, bup, bup, bump, bup, bup, bup, bup, bump, ...] and then, what was fascinating in these studies, is that when you let the rats sleep, what you heard was the same sequence of tones replayed [bup, bup, bup, bup, bump, ...], it wasn't at that speed. It was 20 times more quickly [Walker repeats the same sequence of tones, up, bup, bup, bup, bump, ..., but much more quickly] and so what we see is that the brain is actually essentially replaying those memories almost as though what it's doing, firstly is scoring the memory trace or etching the memory trace more powerfully into the brain so that when you wake up the next day you can better remember the things that you learned before and we know that sleep is wonderful for doing that.That actually happens during deep non-rapid eye movement sleep what happens during REM sleep is something more interesting there the brain almost becomes chaotic and random, and that what we think is happening during REM sleep is that during deep sleep you take the information you've learned and you save it — you hold onto it, then it's during REM sleep that we say, based on the information that we've learned today, how does it interrelate with everything that we've previously learned, how do we figure out which connections we should build and which we should let go of and it seems as though dream sleep is a form of informational alchemy, that you start at the site — it's almost like memory pinball — you begin bouncing around the attic of all of your memories and saying should this be a connection, should this connection, ..., but REM sleep is almost like a Google search gone wrong that it's during REM sleep that you input your search term and it immediately takes you to page 20 which is about some bizarre thing that you think, hang on a second, is there really such a strange tangential link? It's during dream sleep that we test out the most bizarre, strange, associative connections, and that's the reason that you wake up the next morning, often having divine solutions to previously impenetrable problems. That's what dream sleep seems to be about as well. — see the video on YouTube at [00:35:00]

You've probably heard some version of this before. There's more detail in Walker's recent book [384] and on the website for his lab at UC Berkeley. I've included this here along with several relevant papers from Walkers Lab [305, 385, 351, 383], not for what it tells us about sleep in particular, but rather what it tells us about learning and memory consolidation, and perhaps carries with it some insights into catastrophic forgetting and training networks in which different subnetworks are trained at different rates or using different learning methods and local objective functions.

Miscellaneous Loose Ends: You can find an extended excerpt from Barbara Tversky's book [375] in the footnote here5. The excerpt features a number of studies that look at whether subjects rely on gestures in solving problems involving structural and dynamical reasoning and whether their problem solving performance benefits from the use gestures.

July 17, 2019

%%% Wed Jul 17 04:03:56 PDT 2019

This entry attempts to shed light on several issues relating to the programmer's apprentice and related applications, beginning with the idea of embodiment as an essential interface with the agent's environment. I skimmed Barbara Tversky's book [375] over the weekend — thanks to Gene Lewis for the suggestion — and listened to Michael Shermer interview Tversky on this podcast. The interview was too high level to be useful to me and the audio quality made it difficult to hear what she was saying, but I found her perspective as well as the examples and the studies she described in the book worth attention. Yesterday I started reading it more carefully during lunch as I wanted see how her theory relates to other work on embodied cognition starting with Kenneth Craik [78], Varela, Thompson and Rosch [377] and more recently Andy Clark [72] — all of which are more philosophical but interesting nonetheless.

The book covers a lot of ground, explaining as it does how the movement of our bodies influences almost every aspect of experience from our most grounded (physical) activities to the most abstract (metaphysical) flights of fancy. Her main thesis is that human thought is embodied cognition. Language takes a backseat despite its impact on human culture. Tversky is right about the role of gestures and signaling in humans and many other animals. The work of Lieberman [241, 239] and more recently Terrence Deacon [84] underscores this connection, emphasizing that language is evolutionarily pretty late — estimates vary wildly anywhere from 50,000 years ago to as early as the first appearance of the human genus more than 2 million years ago — leaving little time for natural selection to make dramatic changes. Andy Clark — whom Tversky quotes in the prologue of her chapter — has a particularly insightful take on this that I mentioned in the class discussion notes.

I recommend that you read, listen to or watch Andy Clark's Edge Talk on "Perception As Controlled Hallucination: Predictive Processing and the Nature of Conscious Experience". If you only have a few minutes, listen to the segment from 00:12:00 to 00:20:00 or read the first part of the conversation. The audio samples — begin listening at: 13:00 in the video — provide a compelling example of how prediction, priors and controlled hallucination conspire to construct our reality and guide experience.

If you've read the books I just mentioned you might think there is nothing new in Tversky's book. To get something out of it you have to deal with the fact that it is aimed at a general audience and there is a lot of relevant previous work that Tversky relies on to make her points. She writes well and she skilfully weaves the thread of her ideas into a larger narrative that integrates what she borrows from previous work, explains what she rejects or reinterprets and highlights what she brings new to the table. I understand why she didn't use mathematics to explain many of the basic concepts, but metaphor can be a clumsy tool for characterizing some of the phenomena she discussed. For the most part, you can interpret her prose as being consistent with the mathematics of neural networks. Any pattern of activation in the brain can be thought of as a thought vector, the set of all such vectors constitutes a Hilbert space and the transformations she alludes to later in the book are called either projections or transformations depending on what branch of mathematics you're coming from.

Everything inside the brain is abstract. Nothing about the real world has its exact counterpart in the brain including the features encoded in primary sensory cortex. Activations of networks in the multi-modal association areas are examples of composites that represent complex entities, trajectories within the corresponding vector spaces represent system dynamics, collocations in space-time represent relationships and together these correspond to the sort of phenomena that can be modeled using variants of interaction networks [32, 31, 409] and graph networks [402, 326, 7, 205]. It is interesting to look carefully at the DeepMind implementation of graph nets and the work of Wang et al [390] in analyzing the dynamics of running programs for program repair — see the examples shown in Figure 5, for their use of GRUs as components in a larger architecture [69].

Reading Tversky's book with an open mind is the worth effort. It sparked connections to my earlier thinking about relational reasoning and analogy as they relate to hierarchical and compositional modeling, as well as revisiting recent work in code synthesis as it relates to the programmer's apprentice as an agent embedded in the "code world" which I'll expand on in a minute. I particularly liked Tversky's treatment of the role of attention in how our behavior is coupled with movement and the exploration and exploitation of our environment — see Hudson and Manning [196] for an interesting take on this. Tversky notes that gestures include pointing which is the most basic form of reference, followed by iconic images and finally symbols all of which emerged prior to language.

There is no official book of gestures; there is no need, since gestures are a natural extension of our bodies — the design of which we share with our fellow humans along with our experience of the natural work in which we evolved. Tversky makes the case that mirror neurons are an important evolutionary development facilitating transfer learning and the rapid spread of knowledge and culture. Her treatment of perspectives is worth reading — Geoff Hinton in his ACM Turing Award lecture made the case that computer vision has failed to leverage frames of reference as the basis for learning relationships between objects that we perceive in our environment. There is much else in Tversky's book to warrant reading it cover to cover, but the goal here is not to slavishly emulate how humans think, but rather to gain insight into how AI systems intended to serve as experts in specialized domain might be architecturally organized so as to facilitate reasoning in their area of expertise. If you are interested in how the hippocampus represents abstract thinking, take look at this footnote6.

Having read Tversky, it is a useful exercise to think of the apprentice as an embodied agent with a body that corresponds to an IDE (Integrated Development Environment) and an environment that corresponds to a conventional computer, a small network of workstations or a large network of linked servers. In the following, we consider the simple case of a single computer. There is an important sense in which a computer provides a rich environment to learn in and — like our physical environment — is governed by a few fundamental principles whose emerging properties the agent can discover by exploration.

Programs represented as source code listings are static objects that have a great deal of inherent structure and latent dynamics. Think of source code listings as the DNA specifying algorithmic genotypes, the compiler as the reproductive machinery responsible for producing phenotypes (bodies) corresponding to running programs, the computer hardware as the environment in which running programs live and its peripherals - I/O devices - the means by which programs perceive, alter and are altered by the greater world beyond the confines of their (process thread instances) manifestations. An alternative analogy is to think of malware instances as the parasitic phenotype, computers as hosts and the internet as the world in which the hosts live — my imagination immediately seized upon Westworld but I was able to fend off the tempting meme.

Running programs are dynamic objects that manifest their behavior primarily though changes to memory including RAM, caches, ALU registers, call stacks, process-scheduling queues and input-output devices, just to name a few of the ways that running code can alter the state of its physical environment. The apprentice is just a program that lives in an environment that includes other running programs, that can write and run its own programs, observe their behavior as well as its own, and intervene to modify their own behavior to suit their purposes. The apprentice's "guild master" programmer is just another stream of data — actually multiple streams corresponding to different sensory modalities including voice and video, and its observations and interventions are analogous to the physical sensory and motor activities of a human moving through its environment.

Despite the fact that writing and running programs seems so much more difficult than playing Atari games — or Starcraft for that matter, "code world" has a lot of advantages in comparison with the simulated worlds of OpenAI Gym and DeepMind Open Source Environments. The set of rich environments is as diverse as there are different applications, different languages, different abstractions, different levels of complexity as in Karel and DrScheme. The distance between environments can be relatively small as in the case of different dialects of Lisp and variants for writing regular expressions. Or it could be large depending on the application, e.g., interactive graphics in C++ versus formatting scripts written in bash.

As an exercise, think about how our understanding of the dynamics of the world around us might emerge, as a child goes through the early stages of development, from helpless infant to curious toddler and on into later childhood. Think about how the infant brain is gradually assembled, neurons and synapses are over-produced and then pruned in late childhood and early adolescence. How all of this happens against the backdrop of the child learning about the environment, becoming familiar with its own body, creating the diverse maps that represent and facilitate our situational awareness, and how the dynamics of the body interact with the dynamics of the environment in which it lives.

What would the earliest maps look like? They probably would be quite primitive early on and yet the foundations must have been laid relatively early, exploiting pathways whereby sensory information enters the brain in order to construct the basic groundwork upon which to lay down the traces for a more nuanced representation that can accommodate fast growth of the growing child. Besides a naÏve physics representation of the physical environment in which action is played out, might there also be the beginning of the simplest mental models corresponding to its primary caregivers. Apparently a sense of self emerges rather late within the early developmental window that characterizes the first 3 to 4 years [277, 274, 174, 83].

Keep in mind that the brain is still very much a work in progress in this early stage. As an analogy, I imagine a child playing in a sand pile in the midst of a construction site where a building is being erected around the child and construction workers are scurrying about carrying tools and building materials. The world is being gradually revealed to a brain that is constantly preparing itself to take into account yet more complex aspects of the environment in which it behaves. How would it go about doing this? What neural structures and cognitive biases are available to bootstrap this process? What affordances does the brain, body and environment have to offer to make the job easier or even simple?

Now think about the extent to which the apprentice will have to construct a complicated edifice encapsulating what amounts to a huge amount of information even when restricted to the task of writing programs. For example, consider the seemingly simple case of the assistant predicting the consequence of evaluating an assignment, e.g., X = X + Y x 3 or a relatively simple conditional statement, if P then A else B. How many years of education were required to gain enough knowledge to perform this relatively simple task and how might we accelerate arriving in this enlightened state? Perhaps not with the same level of skill as an engineer, but more along the lines of a child pecking away at calculator.

The task of learning how to perform operations that were difficult for us in grade school might be facilitated by the assistant performing the equivalent of a baby grasping, waving its arms and rocking back and forth in its crib. Remember the toys often attached to baby cribs that look something like an abacus with brightly colored wooden beads that slide back and forth on metal rods? It's important that the beads are different colors and make a satisfying clicking noise when they collide with one another. The colors and sound attract the infant while the different ways one can arrange the beads engage the toddler. Perhaps such exploratory play lays the groundwork for learning simple math operations.

Now might be a good opportunity to review the material on hierarchical and compositional modeling here and perhaps skim the Wikipedia pages about J.J. Gibson, Jean Piaget, and Lev Vygotsky and then read the "Neural Programmer-Interpreters" paper by Scott Reed and Nando de Freitas [311] and "Learning Aware Models" by Amos et al [13]7.

Miscellaneous Loose Ends: I've placed a copy of my bibliography references in BibTex format here for your use as a convenient reference. There are over 5,000 references most with abstracts and primarily concerning topics in neuroscience, machine learning and computer science. I use it primarily as a memory palace for technical books and papers that have proved useful and are likely to remain relevant to my research.

July 12, 2019

%%% Fri Jul 12 05:56:38 PDT 2019

Some of you met with me on July 2nd to discuss the possibility of writing an arXiv paper loosely based around the content of this year's course. The plan we discussed involved organizing the paper around a list of major challenges standing in the way of developing sophisticated AI systems like the programmer's apprentice. A running theme of the paper would be how neuroscience has led the way to important algorithmic and architectural innovations. An amended version of the slides I went over in our discussion is available here. The amendment was to add a slide at the end on how insights from fMRI studies have inspired hierarchical and compositional models to support action selection across multiple domains.

At the end of the meeting I asked for volunteers willing to take the lead on one of the six challenges, and said that whether or not the paper happens depends on whether there are enough of students willing to put substantial effort into contributing to such a paper. I know you are busy this summer and so this may not be a good time for you to make such a commitment, but it's not as though you'll have a lot of free time once classes start. In any case, the response to my request for contributing authors yielded no takers. For the July 2nd meeting, I selected those whose projects and interests were most closely aligned with the paper as I envisioned it, reasoning that, if there were no serious volunteers from that subset, then the paper wouldn't get done by the end of August.

So rather than burden you with more work this summer, I've decided to write the first draft of the paper by myself. This will probably take me the rest of the Summer, but I can guarantee it would take substantially longer if there were more contributing authors even if those authors were really committed to helping out. See Brooks's Law from The Mythical Man-Month if you want to understand my rationale. Depending how it goes, we can revisit including additional authors in the Fall. As an added benefit for prospective co-authors, using the first draft as an extended outline, it will be much easier for you to figure out whether and how you might contribute to a second draft. In the meantime, enjoy the rest of the summer.

July 11, 2019

%%% Thu Jul 11 02:11:54 PDT 2019

After the lecture that I gave last week concerning some of the major challenges to progress in building systems like the programmer's apprentice, I realized that, while it addressed the problem of how to organize procedural (subroutine) memory in a manner that is both hierarchical and compositional, it did not make a compelling proposal for generating a rich enough state representation to precisely identify the different contexts in which to deploy particular subroutines. What was needed was a state space representation rich enough to capture the essential characteristics of situations in terms of factors that directly speak to the dynamics of acting in difference contexts.

For example, altering a piece of code designed for one purpose to serve another requires that you understand the consequences of the changes you might need to make in order to evaluate different alterations. Specifically, the state representation needs to be rich enough to identify the dynamics governing the state in which you're acting. The dynamics of Tetris is different from the dynamics of Pac Man. Both of these games have many variants with different layouts and characters but essentially the same rules and physics engines. Experienced players can easily determine whether a game is a variant of one or the other of these classic games and apply their general knowledge to the specific instance at hand.

Making such distinctions requires that an agent ignore the superficial features of the game at hand and focus on what counts, namely how do the relevant pieces of the game move and what control does the player have in terms of intervening in the action so as to earn points. In some variants, the physics might depend on the color or size of an object, in which case those characteristics are relevant to understanding the underlying dynamics and the player's ability to influence the state of the game. Some players are familiar with dozens if not hundreds of games many of which exhibit Tetris-like dynamics in some parts of the game, Pac-Man-like dynamics in other parts and perhaps a combination of the two in still other parts. The term analogy is often used to describe the relationships between such games.

In the two weeks since the first lecture, I've been following in the intellectual footsteps of Hamrick, Battaglia, Botvinick, Hassabis and others trying to gain some purchase on what's missing from the model I'm proposing. This slide — laid out in the same style as those in the first lecture — includes a theoretical claim that addresses the missing pieces alluded to earlier, pointers to studies pertaining to the relevant neural correlates and references to recent work on learning relational models suggesting how we might go about developing systems that support the necessary functionality. In the coming weeks, I will flesh out a neural-network architecture design that implements this functionality, and develop an outline for the promised white paper summarizing the complete architecture.

July 9, 2019

%%% Tue Jul 9 04:55:38 PDT 2019

In developing hierarchical and compositional models to support action selection across multiple domains, general method to differentiate between different contexts for acting that primarily depend on activity in the parietal and temporal cortex corresponding to the association areas that produce abstract representations incorporating sensory data across all modalities. The problem is that such representations — at least in the case for standard type of network architectures, e.g., stacked convolutional neural networks with recurrent connections and attentional layers, used for vision or speech — do little to separate out the features of different environments that are most relevant to action selection8.

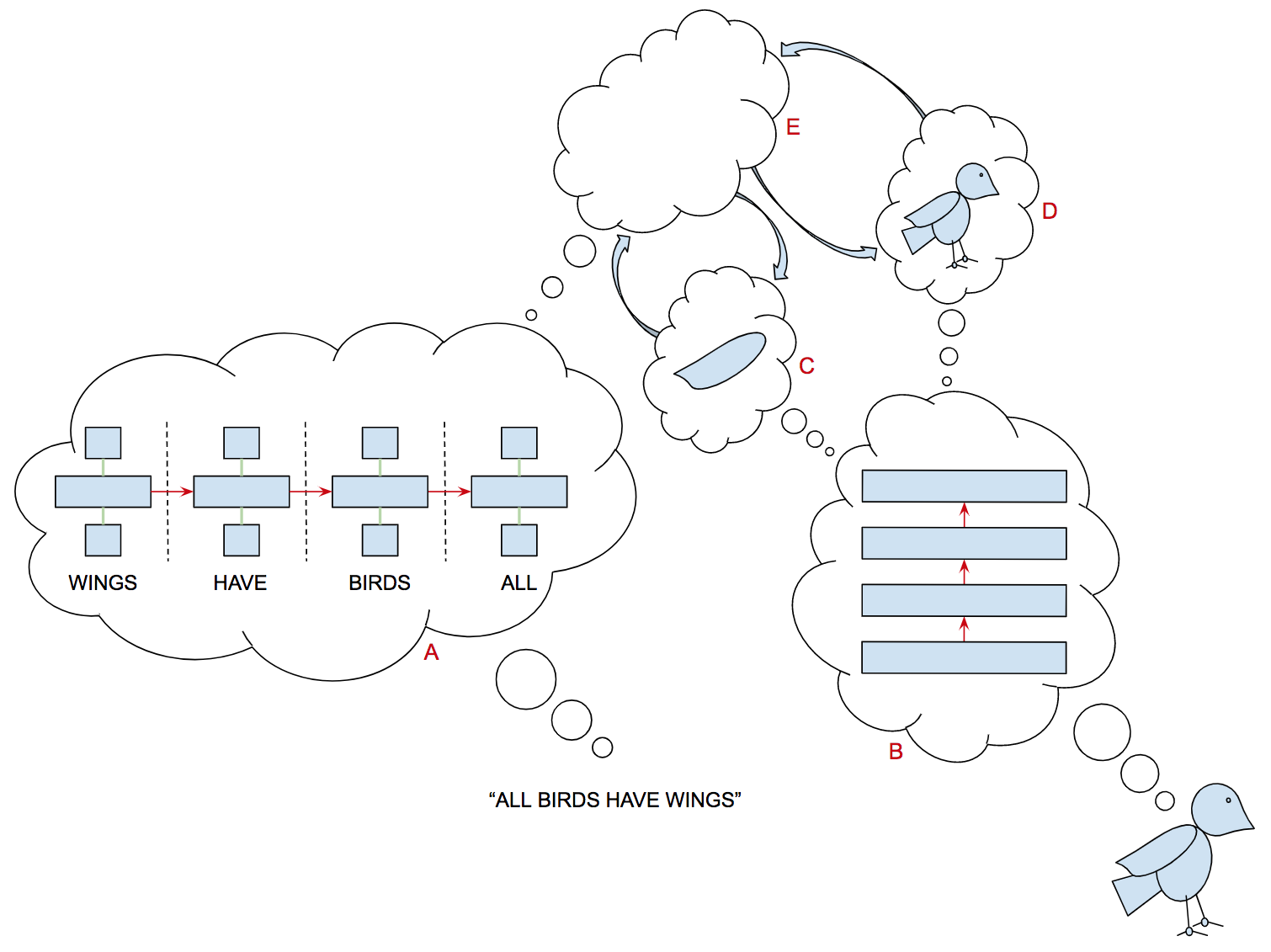

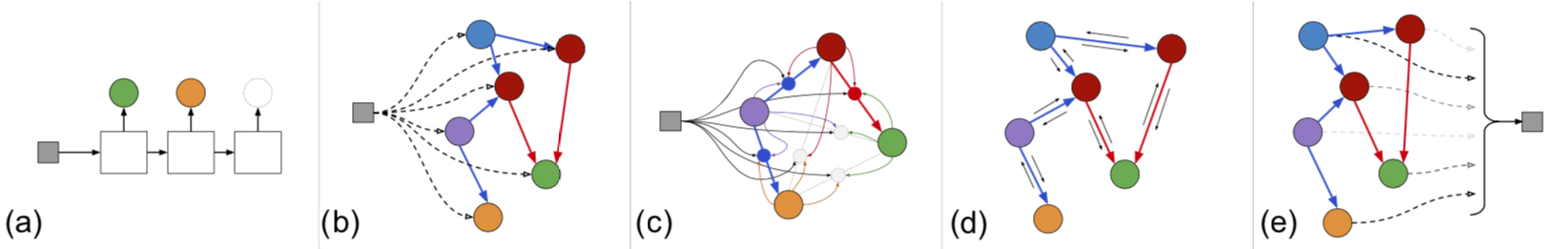

In our discussions in class, Jessica Hamrick and Peter Battaglia emphasized the importance of rich relational models that capture the distinctive entities, relationships between entities and co-variances among those relationships that characterize different environments and assist in prediction and action selection [170, 32, 171, 326, 409, 327, 392, 33, 31, 236]. These models — encompassing the ontological, relational and dynamical properties particular to specific environments — appear to be exactly the sort of representations one needs in order to differentiate contexts for the purpose of selecting specialized strategies (subroutines) for reasoning and acting.

There are a number of theories hypothesizing that analogy is pervasive in human reasoning and may even constitute the core of human understanding — Douglas Hofstadter's theories being the mostly widely cited9. For our purposes, an analogy is just a compelling model mapping from one domain to another — one that aligns entities, relations and dynamics in such a way as to facilitate borrowing strategies from one domain in order to apply them in another. We are constantly creating, refining and evaluating such models based on how well they help us make predictions and plan for the future. Over time we construct a rich repertoire of models that we can draw upon in different circumstances10.

It would interesting to see if we can tease apart the functionality as described above in terms of a basic capability tied more closely to direct sensing of the environment and a more sophisticated level of reasoning that operates on top of this basic capability allowing for more abstract thinking. In particular, I'm interested in the neural-network architecture question of how imagination-based planning and relational modeling as implemented in graph-nets-like component networks might fit in a larger architecture that aspires to solving problems in a wide variety of domains. Such a division of labor between a basic — common across a wide range of species — and a more sophisticated capability — found in humans but perhaps not other animals — makes sense from an architectural and possibly evolutionary perspective, if not from a strictly-observed anatomical criterion.

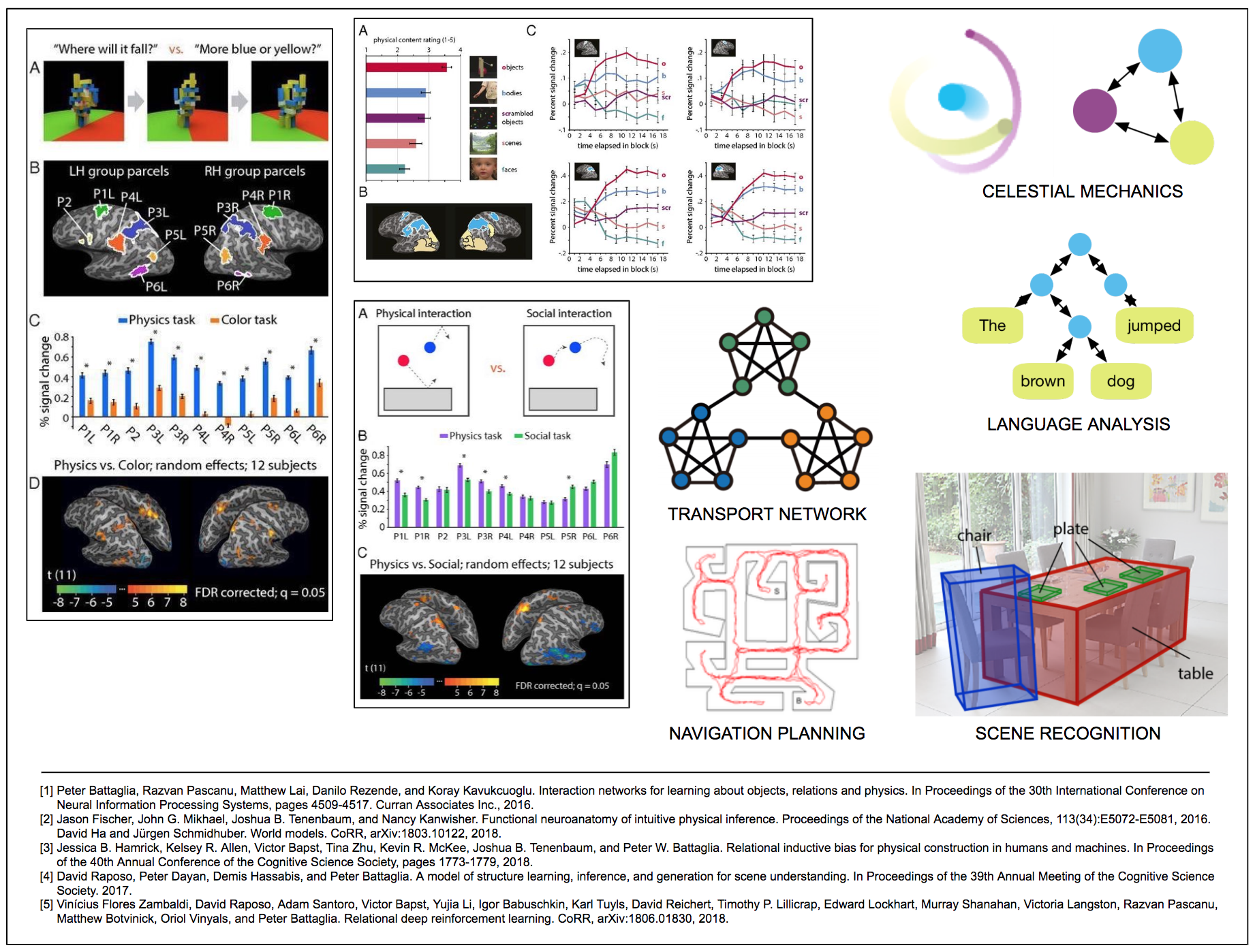

|

Several studies offer speculation along similar lines [308, 309, 78]. Recent fMRI studies from the labs of Nancy Kanwisher and David Dilks attempt to localize activation related to the use of such models [212, 126]. Their results indicating activity in the parietal and frontal cortices when subjects are watching or predicting the unfolding of physical events agrees with the hypothesis that learning and deploying such models has its basis in the sensory cortex and abstract association areas in particular; that this basic capability is likely conserved across mammals and perhaps birds, cetaceans and cephalopods as well; and that this activity is unconscious and effortless. Whereas many of the more sophisticated applications of this basic core are situated in the frontal cortex; are less well distributed across the animal kingdom; and some are found only in human primates.

Still, it could be the case that such intuitive physical inference — what is often referred to as naïve physics reasoning in the AI literature — is carried out by strictly the same domain-general cortical regions that contribute to a wide variety of tasks, termed the multiple demand (MD) network11. Responses in the MD areas generally scale with task difficulty, and this network is thought to provide a flexible problem-solving framework that contributes to general intelligence. To test whether the physics-responsive areas identified in the first three experiments are the same as the MD network — see the attached slide, the authors separately localized the MD network in the same twelve subjects who participated in the first two experiments, thereby providing support for there being two separate networks [126].

July 3, 2019

%%% Wed Jul 3 02:43:15 PDT 2019

Here are my slides from yesterday complete with post hoc presenter notes that I dictated this morning. The transcription is likely include errors and omissions so please don't disseminate. The full lecture and discussion was two hours and so my notes constitute a substantially abbreviated version of the talk. As promised, I've supplied related content for the challenges discussed in class.

Each challenge is paired with a link to an earlier entry in the class discussion list providing an introduction to one facet of the related issues. In most cases, the entry selected is one among many to be found in the class notes. You might also find it useful to search in the introductory material, e.g., the section on accelerating language learning. Here's the annotated list of technical challenges:

Item 1: catastrophic forgetting — June 27, 2019 Thinking Beyond Hippocampal Place Cells

Item 2: sample complexity — May 31, 2019 Complex Environments Varied and Numerous

Item 3: hierarchical and compositional — June 25, 2019 Hierarchy, Abstraction, Compositionality

Item 4: relational and analogical reasoning — July 9, 2019 Relational Models, Planning Contexts

Item 5: modeling other minds — July 29, 2019 Inner Speech, Language as Thinking

Item 6: language and communication — June 17, 2019 Why No Dedicated Language Areas

June 29, 2019

%%% Sat Jun 29 03:08:45 PDT 2019

Here's the current plan for the course summary document — this plan is provisional and we'll be discussing the content and focus of the proposed paper at length in our meeting tomorrow. We will meet tomorrow = Tuesday, July 2 from 2-4 PM in Gates 358. The room is larger (seats 26) than I thought we would find on such short notice and so if there is someone else on your team who is in town and free during that period, feel free to invite them. Please each of you invite no more than one person, if we decide to go forward I will arrange for suitable room, invite everyone who handed in a project and schedule the next meeting with more advance notice.

This class was larger than in the past with a total of 35 students taking the class for credit and receiving a final grade. I don't expect everyone who took the course this year to participate in writing the final summary document. However, I will proceed for the time being as if everyone in the class is committed to contributing and plan accordingly.

The provisional plan is to update the content in this year's introductory notes to account for what we learned and also speak to the remaining challenges that stand in the way of implementing a fully-functional programmer's apprentice.

For each of the original challenges plus any additional ones we decide to include, the plan is to integrate brief mention of each challenge in the primary text and then write a more detailed analysis in an appendix. This strategy is similar to the one we used in writing the summary paper for the 2013 class.

I will send out a calendar invitation containing a Hangout link. If someone is familiar with Zoom and would like to help out that would be most appreciated, since I will preoccupied with getting my notes and slides together for the discussion. If you invite someone, you can share the hangout link.

Miscellaneous Loose Ends If time permits and one of you is willing to invest some effort in doing some of the background research, I would like to say something about natural language processing and narrow-domain dialogue management in the class summary paper. While we didn't talk about it in class, I had several interesting conversations with students last quarter. Here are few observations that we might unpack in the proposed arXiv paper. Each entry includes a footnote that lists one or more issues / perspectives that may be useful to include in a corresponding appendix, but I am open to considering other options.

It is useful to think of language as natural selection's way of enabling human beings to share thoughts12.

Inner speech is self-directed dialogue for learning how to speak, rehearse and evaluate what to say14.

Generating and understanding speech is a probabilistic process of action selection and interpretation15.

Language is hierarchical, compositional and, importantly, the direct product of goal-directed planning16.

Acquiring linguistic facility requires both physical grounding and developmentally-staged learning17.

Here are a few ideas to think about in developing a technology to bootstrap the programmer's apprentice;

synthetic data — create ablation training examples by strategically modifying working software;

motor mimicry — create an invertible IDE in analogy with the role of mirror neurons in primates;

scripted base — employ a scripting language like python and hierarchical network to generate data;

developmental — recapitulate child development by building language facility from the bottom up;

curriculum — leverage multiple objective functions and combine intrinsic and extrinsic rewards;

I highly recommend that you read, listen to or watch Andy Clark's Edge Talk on "Perception As Controlled Hallucination: Predictive Processing and the Nature of Conscious Experience". If you only have a few minutes, listen to the segment from 00:12:00 to 00:20:00 or read the first part of the conversation18. The audio samples — begin listening at: 13:00 in the video provide a compelling example of how prediction, priors and controlled hallucination conspire to construct our reality and guide experience.

June 27, 2019

%%% Thu Jun 27 03:14:09 PDT 2019

In class discussions, we talked about place and grid cells in the hippocampus, opining that the hippocampus is much more general than this usage implies. Dmitriy Aronov a postdoc working in David Tank's lab at Princeton conducted some interesting experiments using rats in a virtual environment and obtained recordings of hippocampal cells similar to those from rats running mazes in a physical environment. Continuing his collaboration with Tank after moving to Columbia University, Aranov trained rats to traverse an auditory rather than a physical space. The animals used a joystick to move through a sort of sound maze — a defined sequence of frequencies. When the rat moved the joystick, the frequency increased and kept increasing for as long as the animal deflected the joystick:

|

The researchers discovered a set of cells that act very much like place cells [17]. Instead of firing when the animal is in a specific location, these 'sound cells' fire when the animal hears a specific tone. "[i]t has the classic pattern of the hippocampal place cell," Aronov says. This Simons Institute article cites related work by Beth Buffalo and her collaborators at the University of Washington and Eva Pastalkova, now at the Howard Hughes Medical Institute's Janelia Research Campus. Their findings show that the same neural circuitry can map space during visual or physical exploration, which hints at the representational flexibility of entorhinal circuits — Tank and Aronov mention that their sound experiments were inspired in part by Buffalo's research [320].

Most studies of navigation in the hippocampus and entorhinal cortex focus on specific types of cells — grid cells, place cells, head-direction cells and others. However, these cells make up only a small fraction of the neuron population in those brain regions. Lisa Giocomo, a neuroscientist at Stanford University, is developing new ways to study the role of the remaining cells. Giocomo points out that this type of unbiased approach will be important for gaining a complete view of the function of the entorhinal circuit. "It is hard to fully understand what role the entorhinal cortex is playing in behavior if we don't even know what over half of the neurons are encoding," she says. "This approach allows us to reveal what the majority of neurons are encoding without requiring predefined assumptions for what tuning should look like." SOURCE

This is just a sample of current papers that are redefining and broadening the concepts of place and grid cells. It would be interesting to explore what these new perspectives suggest in terms of building neural network models of the hippocampal-entorhinal-cortex complex. In a recent paper in Current Biology [42], Andrej Bicanski and Neil Burgess propose that "grid cells support visual recognition memory, by encoding translation vectors between salient stimulus features. They provide an explicit neural mechanism for the role of directed saccades in hypothesis-driven, constructive perception and recognition, and of the hippocampal formation in relational visual memory." A concensus is building that operates on a relatively general and functionally diverse set of abstract patterns, covering a wide range of behavioral and cognitive requirements. SOURCE

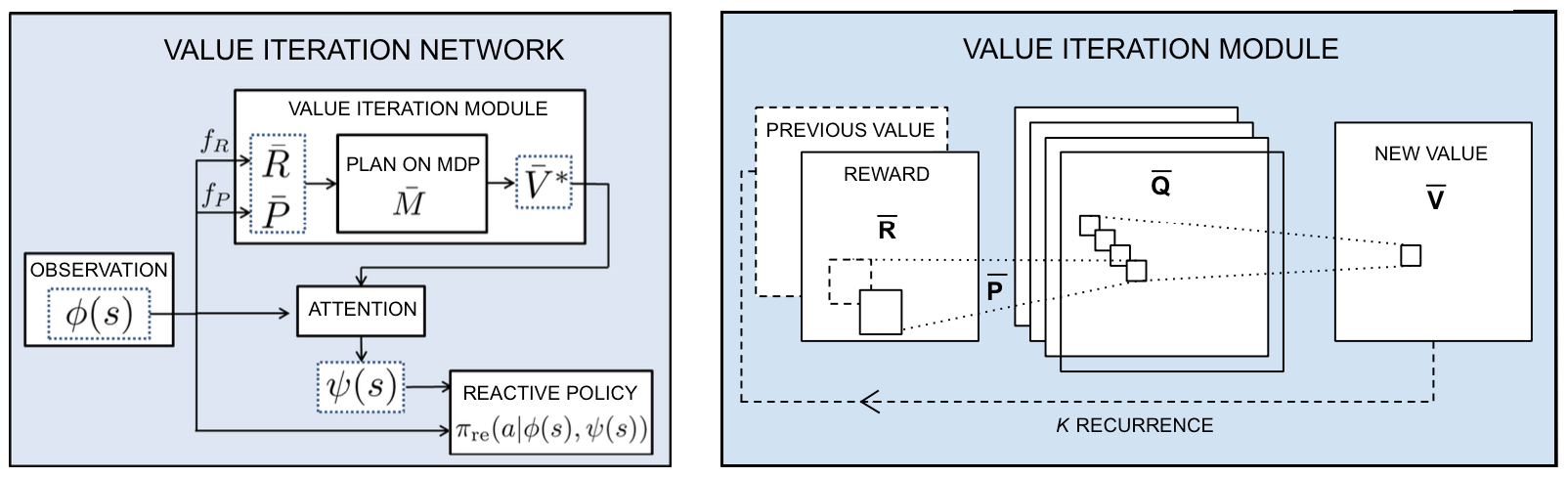

On a related note, a group of researchers at DeepMind have developed a theory of hippocampal function that recasts navigation as part of the more general problem of computing plans that maximise future reward. In a recent paper [350] in Nature, the authors write that "[o]ur insights were derived from reinforcement learning, the subdiscipline of AI research that focuses on systems that learn by trial and error. The key computational idea we drew on is that to estimate future reward, an agent must first estimate how much immediate reward it expects to receive in each state, and then weight this expected reward by how often it expects to visit that state in the future. By summing up this weighted reward across all possible states, the agent obtains an estimate of future reward [...] we argue that entorhinal grid cells encode a low-dimensionality basis set for the predictive representation, useful for suppressing noise in predictions and extracting multiscale structure for hierarchical planning." SOURCE

June 25, 2019

%%% Tue Jun 25 02:51:29 PDT 2019

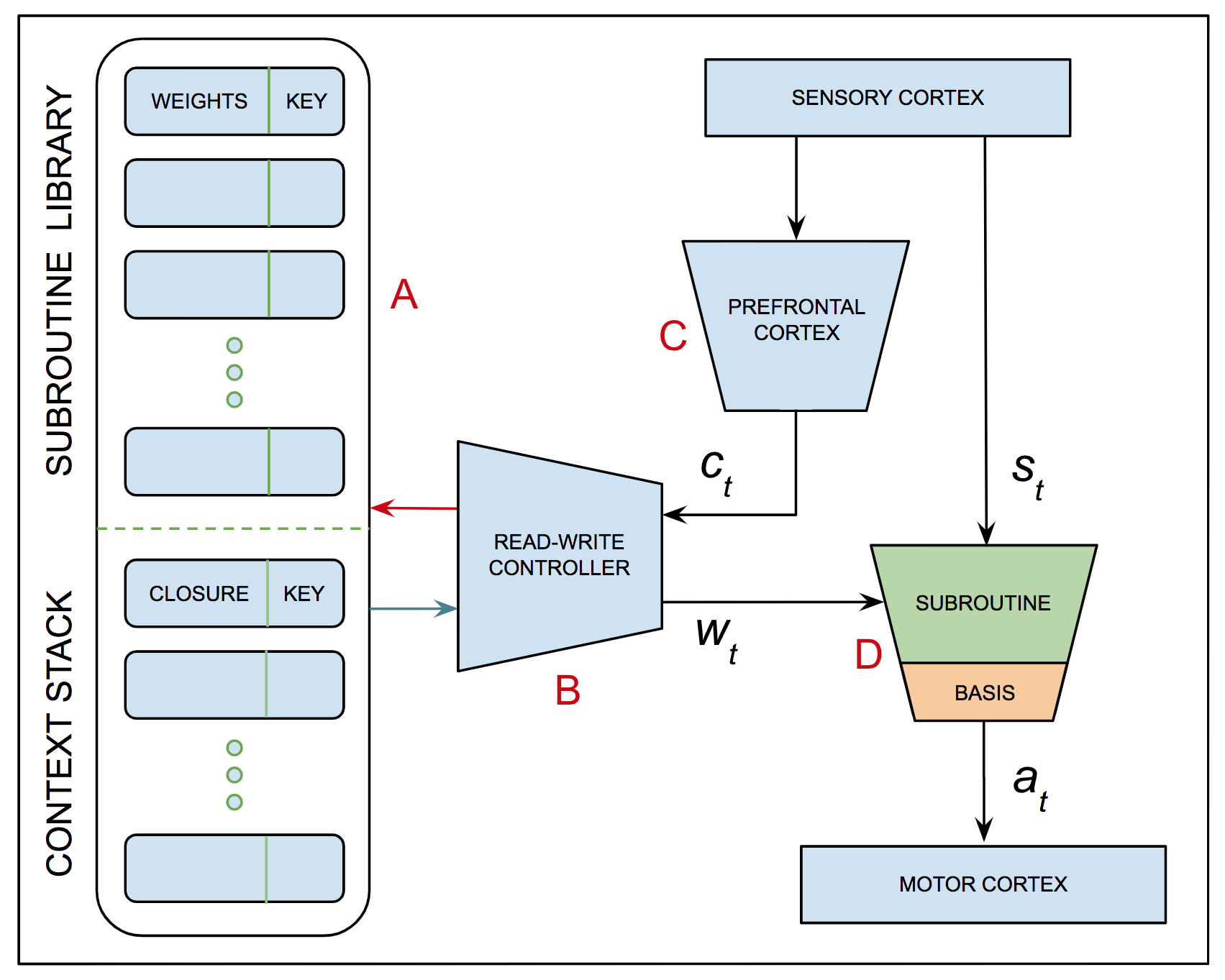

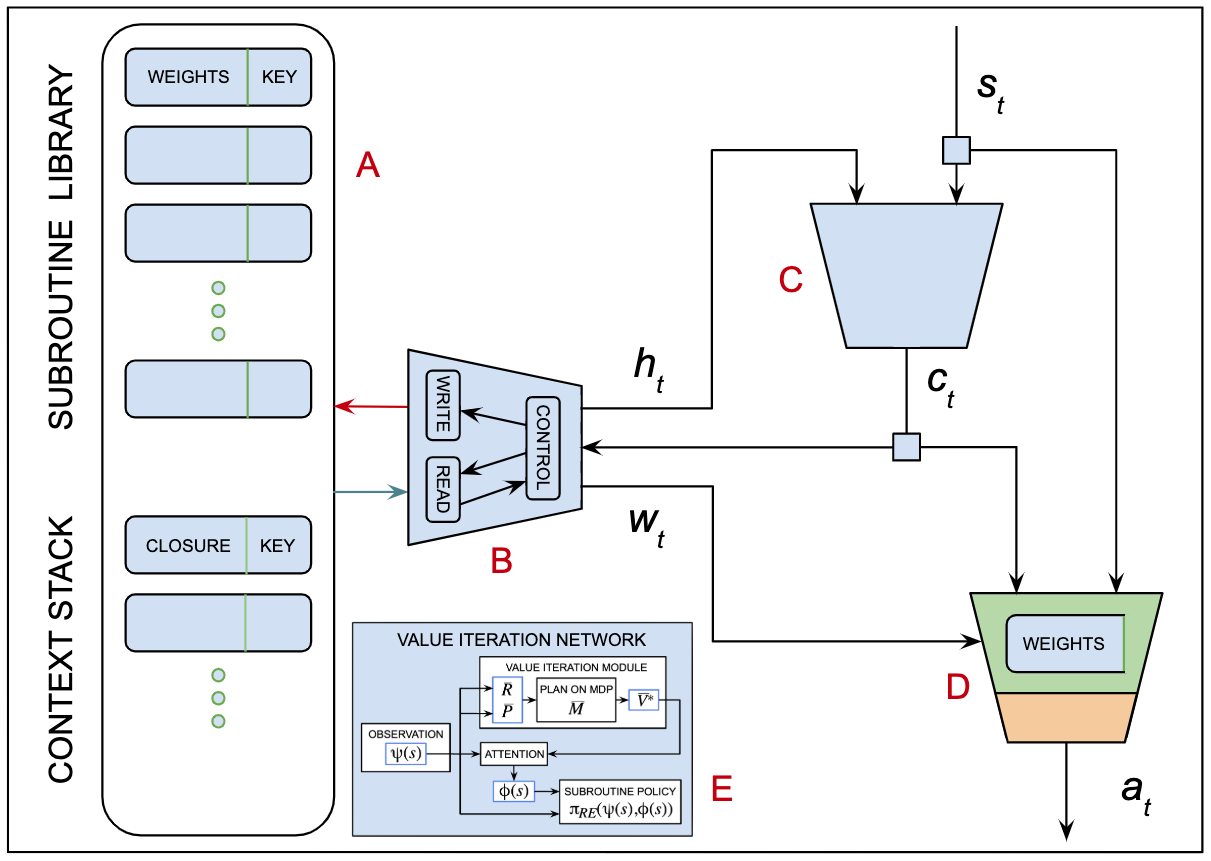

I've continued to develop ideas on how to support hierarchy and compositionality within a reinforcement-learning framework. I've found several papers suggesting that something like my context-based approach to representing abstract subroutines coupled with the method of augmenting the neural state vector / current pattern of activations in working memory is on the right track. The evidence is in the form of fMRI studies that show patterns of activity in the PFC consistent with such a theory, and biologically-plausible implementations of the approach that demonstrate how such a strategy improves on flat, indecomposable approaches.

The basic idea is simple to understand. The PFC employs neural circuits that alter and enhance working memory to create an augmented context that encodes information about the current state of ongoing planning. This context serves as the input to action-selection circuits of the basal ganglia and thalamus. Relevant circuits in the PFC implement a meta-controller that learns to construct this augmented context and supports abstract actions whose primary consequences correspond these working-memory enhancements. Absent in the papers I've read is an account of how the brain avoids catastrophic forgetting [150], nor is there any discussion of the depth or degree of integration of the hypothesized biological solution.

The proposal outlined earlier, deals with catastrophic forgetting and supports arbitrary depth by taking advantage of the properties of a dynamic external memory implemented as a differentiable neural computer [154]. It was not meant as a biologically plausible model, rather as a hybrid, biologically inspired but practically designed to circumvent the computational limitations of human brains. The earlier proposal also provides a link between biologically-plausible action selection and conventional-computing program emulation albeit with the benefits and limitations of differentiable models.

What follows is a bibliographical reference catalog including papers most relevant to the above discussion. For evidence relating to the existence and neural correlates of the suggested model of how the human brain handles hierarchy and compositionality in planning and action selection see Badre and Frank [25] and earlier work by Koechlin et al [218], Badre [26], Badre and D'Esposito [24] and Dehaene and Changeux [92]. For work specifically on hierarchical reinforcement from a cognitive neuroscience perspective see Botvinick [56] and Botvinick and Barto [54].

For biologically-plausible simulations of how this sort of hierarchical inference might be implemented and demonstrating it improving on behavior generated by a flat / unaugmented models, see Reynolds and O'Reilly [313]19, Frank and Badre [132] and Rasmussen et al [310]. For early influential attempts to develop hierarchical reinforcement learning see Kaelbling [210] — macro-operators for Watkin's Q-learning algorithm, Sutton et al [358] — temporal abstractions using options, Hauskrecht et al [176]20 — temporal abstraction using macro-actions, and Dietterich [109] — the MAXQ value function decomposition. For more recent approaches see Sahni et al [325] — skill networks, Rosenbaum et al [319] — routing networks and Silver et al [340] — policy networks.

For your convenience, I've uploaded PDF documents for all of the papers cited above to my Stanford class website. You can find them here — if challenged for access, type student for user and urwelcome for password. It should be easy to map from the bibliography below to the file names corresponding to the cited papers.

References:

June 17, 2019

%%% Sat Jun 15 04:39:30 PDT 2019

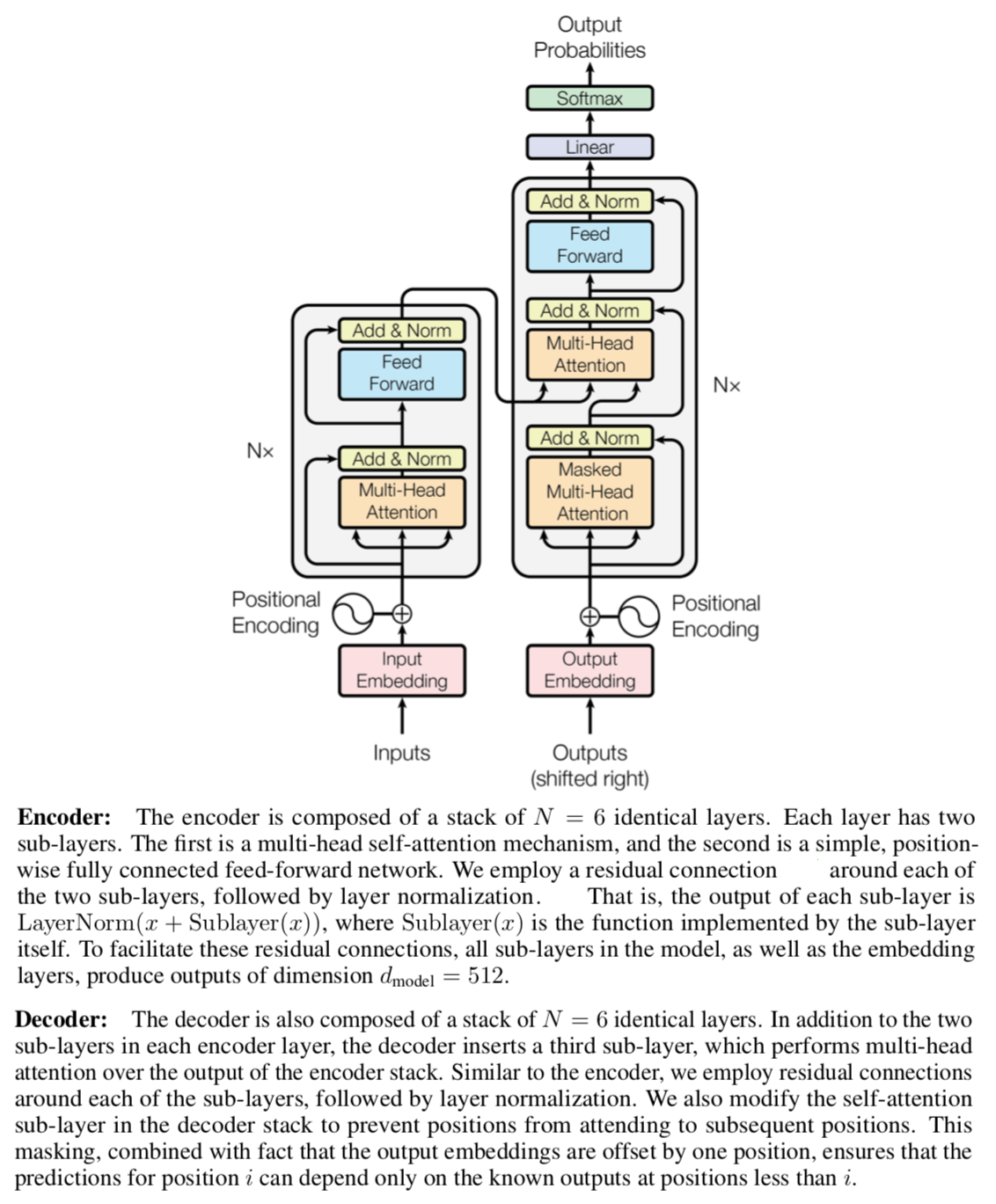

I've been reading your final project reports for a few days now. Highlighting various paragraphs and adding my comments in the margins, and what I notice is that there are two kinds of commentary I tend to make. One has to do with problems you've encountered in training, often in the form of perplexing cases in which an algorithm that you're confident should be an improvement on the state-of-the-art turns out not to be nearly as good as the competing algorithm — that is it appears to be given the experiments you were able to run in the relatively short time allowed for completing your class project. The second has to do with the complexity of the networks that you're using and the reasons you have for selecting particular technologies like variational auto encoders, generative adversarial networks, convolutional networks and different flavors of Long-Short-Term Memory, Gated Recurrent Unit Neural Networks, or whatever happens to be the neural network architecture du jour.

On the one hand, these are the sort of comments you routinely hear in conversations with Google software engineers concerning what architectures work and what ones are difficult to train and what sort of alchemy one has to invoke in trying to eke out a little more performance from a given architecture. On the other hand, this makes it blatantly clear that we don't have the tools that engineers need in order to solve these common problems with any confidence of a successful outcome. There are wizards among us who can iterate quickly, generate synthetic data to expand existing data sets and experiment with different types of models — often dozens of variants in hundreds of different combinations, all run in parallel — and then absorb all this knowledge and apply it to the problem at hand. They are however as rare as hen's teeth and even these adepts routinely fail on the harder problems, especially those involving novel data sets or new architectures.

When I read your tales of woe, I can commiserate with you, but I can't tell you how to avoid such problems in the future, and I can't suggest any particular tools that would help you solve the specific problems you describe in your project reports. I started to collect a list of the major issues standing between us and developing human level AI, or for that matter less ambitious technologies capable of mastering even a few of the everyday skills we humans take for granted. In thinking about how we might summarize what we've learned this quarter, it occurs to me that in terms of a useful paper that we might collaborate on together, perhaps the most valuable thing we could do is draw upon what we've learned concerning how to leverage ideas from cognitive neuroscience and explain in some detail why effectively exploiting those ideas is nontrivial — expanding on the list below — and suggesting some possible avenues for how we might correct this.

Here is a preliminary list of major technical issues standing in the way of progress:

Item 1: catastrophic forgetting — clearly we need to be able to learn across multiple time scales, constantly switching between different types of problems, simultaneously exercising different skills and requiring different approaches to learning in different parts of large neural network architectures, especially those with lots of recurrent connections. For example, in some theoretical models of episodic memory in the hippocampal-entorhinal complex, there are least three different types of learning occurring all at once in multiple nested recurrent loops. Imagine continuous training in which some parts of the network are being modified by gradient descent, some by Hebbian learning, others by targeted replay exploiting episodic memory and still others by some form of adaptive fast weights. [See Mattar & Daw and Hinton & Plaut]

Item 2: sample complexity — also related to environmental (Kolmogorov) complexity and the problem of transfer learning — the ability to learn new skills and apply them in novel situations seems so natural to us that we will have to make an effort to appreciate just how hard these challenges are likely to be as we tackle increasingly more complicated applications. Many of the skills we have been contemplating for the programmer's apprentice are likely to require some form of curriculum learning or applying what we know about developmental psychology in order to efficiently learn those skills simultaneously in the midst of everyday activities, e.g., "What does this case statement do?", "How do I set a break point in the debugger?", or "Can I substitute an integer for a float in this expression?" [See Kulkarni]

Developmental psychologists believe that most animals learn what they need to by applying strategies that are baked into their genome allowing them to quickly acquire critical survival skills. Instincts that guide and shape rapid learning may also serve to restrict what an animal can learn. It seems likely our built-in instincts are substantially less powerful but also less restrictive. Instead we rely on an extended developmental period in which we essentially learn how to learn, a skill that separates us from most other animals. The challenge is to design artificial systems that learn how to learn by exposure to the right sort of feedback and a diverse set environments in which to experiment. [See Zador]

Item 3: hierarchical and compositional modeling — these two characteristics are common in combinatorial / symbolic architectures. Connectionist models are inherently hierarchical and convolutional models are the epitome of compositional architectures. So far, however, developing hierarchies of composable subroutines that exhibit the sort of compactness and modularity we find in conventional (symbolic) computing libraries has eluded us. While there has been a recent trend to consider ways in which connectionist models might handle graphs and trees, it may be that the way human brains handle hierarchical structure is very different from the tree structured models that dominate our current ways of thinking about the problem.

Item 4: relational and analogical reasoning — while an important focus for earlier work in cognitive modeling and linguistic inference, e.g., Douglas Hofstadter's Gödel, Escher and Bach and the work of Dedre Genter and Keith Holyoak comes to mind, it is only recently that the rising tide of deep learning has begun to look at this seriously. As a means of facilitating transfer learning between superficially different domains these methods provide a powerful way to adapt and reuse hard-won procedural and declarative knowledge. Here again we may find ourselves somewhat misled by the specific emphases in some of the older work in cognitive science. Perhaps this is a case where identifying and analyzing the neural correlates of analogical reasoning might yield substantial dividends.

Item 5: reasoning about other minds — conventionally referred to as theory of mind modeling, this area of study is I believe muddied by a misguided belief that this sort of reasoning is intrinsically deep and conceptually hard to get a handle on. Contrary to this view, researchers like Michael Graziano and Matt Botvinick focus on what they consider to be a core antecedent to such reasoning in our ability to construct and apply models of our own bodies, essentially determining the boundaries of the semipermeable, mental, physical and social membranes that separate "us from them" and dealing with the ambiguity that results when the boundaries between organisms are mutable and depend upon context.

From these body centric models and relating to what is often referred to as embodied cognition, it is easier to imagine the emergence of a sense of "self" and its separation from "other". If you don't get too caught up in trying to encompass what it means to have a mind, it is relatively straightforward to think about why having some fundamental sense of self would arise as a natural consequence of evolution, as would an inclination to group together with closely related kin to improve our chances of reproductive success. Maintaining social and personal boundaries is important for survival despite the accompanying socially-divisive and deeply-seated distinction between "us" and "them".

From a personal sense of self and the realization that we are able to reason about other things and other animals including humans, it is not a huge step to infer — or at least accommodate from a purely pragmatic perspective — that other inanimate objects around us might also be able to reason about their environment and protective of their persistent selves. Again making this explicit as a theory of mind is less fraught with difficulty than the philosophical conundrums that occupy those of a more academic leaning. Here again Graziano, Botvinick, Rabinowitz and others with a more practical bent seem to have no trouble grasping this. The practical problem of how one integrates such thinking in a general strategy for planning and problem solving and a better understanding of how one might learn such models or build upon some instinctual or developmental seed of inspiration is an open and fascinating technical and engineering challenge. [See Rabinowitz]

Item 6: language and communication — despite decades of academic study in the fields of computational linguistics, natural language processing and developmental psychology, we appear to be at a loss for developing theories that can be used to formulate robust, practical language learning. Delving into the background literature is not for the faint of heart, and the acrimonious debates that played out within and between the various communities with a stake in this endeavor are difficult to undestand for those of us who weren't raised in the one of the major factions that characterize these disciplines.

You could of course go back to Chomsky, Jackendoff, Lieberman, Fodor, Pinker, Pylyshyn, Savage-Rumbaugh, etc., but I believe there is a more fundamental level that starts with primitive signaling and builds in easy-to-learn stages to the subtle instrument of human social interaction that is the basis for culture and civilization as we know it. I'm an advocate of starting out with basic signaling and semiotic primitives and following the developmental arc apparent in the interaction between parents and their children and depends, not on the child possessing an instinct for learning language, but rather on the child's parents possessing an instinct for teaching their children how to learn. [See Deacon]

Editor: That was long and discursive, but I expect you're used to that by now. If I was sure about what I was trying to convey, I would have simply written the paper, but I'm not sure and I am reasonably confident that no one else has cause to believe they have the definitive answers either. Hence my reaching out to you, inviting you to participate and puzzle out some of the issues listed above. Not solve the problems so much as articulate them clearly and motivate them convincingly. If this sounds like something you'd like to contribute to, I'll create a forum where we can discuss this with the goal of writing a white paper for arXiv.

References:

%%% Mon Jun 17 05:41:24 PDT 2019

Here is a trick question. What do the neural circuits in Wernicke's area do? Broca's area? Basal ganglia? What if circuits in your reptile ancestor's brain did X and millions of years later homologous circuits in your brain were demonstrated to do Y? What if basal ganglia in Homo sapiens "ran substantially faster and utilized more parallel connections" than basal ganglia in Homo erectus?

Daniel Everett [121] in Chapter 6 of How Language Began: The Story of Humanity’s Greatest Invention makes the case that —

Neither the brain nor the vocal apparatus evolved exclusively for language. They have, however, undergone a great deal of microevolution to better support human language. It is often claimed that there are language specific areas of the brain such as Wernicke’s area or Broca’s area. There are not. On the other hand, in spite of the lack of dedicated language regions in the brain, several researchers have shown the importance of the subcortical region known as the basal ganglia to language. The basal ganglia are a group of brain tissues that appear to function as a unit and are associated with a variety of general functions such as voluntary motor control, procedural learning (routines or habits), eye movements and emotional function. This area is strongly connected to the cortex and thalamus, along with other brain areas. These areas are implicated in speech and throughout language. Philip Lieberman refers to the disparate parts of the brain that produce language as the functional language system [19]. From Chapter 6 How the Brain makes Language Possible [121].

To a large extent, Everett's thesis is borrowed from Philip Lieberman who has spent a good deal of his professional life gathering evidence to support these claims. Lieberman's paper On the Nature and Evolution of the Neural Bases of Human Language [20] is well worth reading if you haven't the time to read one of his longer treatises such as Human language and our reptilian brain: The subcortical bases for speech, syntax and thought [19] or Toward an Evolutionary Biology of Language [239]. If you haven't even the time to read [20], you can read or skim the following notes, to understand the basic ideas.

%%% Sun Jun 16 06:23:47 PDT 2019

One thing you learn from reading evolutionary neurobiologists is that — possibly without exception — no part of the brain does just one thing. By that they don't mean that a given part of the brain might have once done X and now does Y, but rather that if it ever did X and now does Y that it most certainly still does X. This is especially relevant in understanding the origins of language since it is such a late addition to our repertoire of cognitive capabilities. In particular, the identification of Wernicke's area and Broca's areas as language areas — a notion that persists to this day despite an overwhelming amount of evidence to the contrary — is misleading; the real question for us is what role do these two areas play in the human brain that natural selection exploited this role in the evolution of language.

I've been reading Philip Lieberman [241, 240, 239, 19] and Daniel Everett [121] focusing on their accounts of how language evolved in human beings, and the first thing you learn from them is that while a fully capable language of the sort we now have involves relatively complex syntax, much of it relies upon features that were present in our — and some other animal's — use of signs, signals and referential behavior. For example, consider the way in which the various components of phrases — whether spoken or signed — are combined in ways that avoid ambiguity and encourage specific interpretations, and similarly in the composition of two or more phrases in generating more complicated interlocutory constructions.

I was particularly taken by a comment made by Daniel Everett in which he described the function of the basal ganglia as primarily having to do with the selection and deployment of both individual actions and routinely repeated sequences of activity corresponding to what we've been calling subroutines or simply routines, including activity of a purely cognitive sort. Everett suggests that it is useful to think about such activity is consisting of simply thoughts, and that the role of the basal ganglia is essentially to transform one thought into another — just as one action leads to another depending upon the particular context in which they occur. I should say — and Everett gives credit where credit is due — that Everett is channeling Lieberman here, but Everett also provides a good deal of original commentary and insight based on his decades of fieldwork as an anthropologist and linguist.

From this viewpoint, the basal ganglia is just machinery for transforming thoughts and the thoughts are no more than patterns of neural activity in the brain. This way of thinking is particularly agreeable to those of us who think of the weight matrices that specify how one layer of a neural network depends on another are transformations from one vector space to another, but it is also useful as a thought experiment in thinking about how language evolved. It seems a bit contrived to think of our experience of the moment as a point or vector in high-dimensional Hilbert space. Surely this physical space that surrounds us is more complicated than a single point. Indeed a single point in isolation conveys little information. However, a point embedded in a space populated by millions of such vectors conveys a good deal of information assuming that the points and the relationships between them as encoded in the transformations that relate one point to another are grounded in — that is to say they can be reliably mapped onto — the physical environment experience through our senses.

Relevant to our earlier comments about Wernicke's area and Broca's area, given that damage to the former often results in deficits relating to the understanding of language and damage to the latter often results in deficits relating to the generation of language, what might that tell us about the general function of these two areas. Considering that neither area is anatomically or cytoarchitecturally coherent, to avoid confusion in the sequel we refer to them generically as regions. Everett and Lieberman take a step backward and ask the question how are these regions wired together and to what other parts of the brain such as the basal ganglia and the reward centers that comprise the cortico-basal ganglia-thalamo-cortical (CBGTC) loop.

Lieberman and McCarthy2013 [241] write that "[t]he neural bases of human language are not domain-specific — in other words, they are not devoted to language alone. Mutations on the FOXP2 transcriptional gene shared by humans, Neanderthals, and at least one other archaic species enhanced synaptic plasticity in cortical–basal ganglia circuits that are implicated in motor behavior, cognitive flexibility, language, and associative learning. A selective sweep occurred about 200,000 years ago on a unique human version of this gene. Other transcriptional genes appear to be implicated in enhancing cortical–basal ganglia and other neural circuits.

The basal ganglia are implicated in motor control, aspects of cognition, attention and several other aspects of human behavior. Therefore, in conjunction with the evolved form of the FOXP2 which allows for better control of the vocal apparatus and mental processing of the kind used in modern human's language, the evolution of connections between the basal ganglia in the larger human cerebral cortex are essential to support human speech (or sign language). The FOXP2 gene, though it is not a gene for language, has important consequences for human cognition and control of the muscles used in speech.

This gene seems to have evolved in humans since the time of Homo erectus. [...] FOXP2 also elongates neurons and makes cognition faster and more effective. [...] Such a FOXP2 difference could have resulted in a lack of parallel processing of language by Homo erectus, another reason they would have thought more slowly. [...] FOXP2 in modern humans also increases in length and synaptic plasticity of the basal ganglia, aiding motor learning and performance of complex tasks." See Pages 117-118 in [121].

Everett writes that another reason that the basal ganglia are important is that their role in language illustrates the importance of the theory of micro-genetics. This theory claims that human thinking engages the entire brain, beginning with the oldest parts of the brain first or as put in a recent study: "The implication of micro genetic theory is that cognitive processes such as language comprehension remain integrally linked to more elementary brain functions, such as motivation and emotion [...] linguistic and nonlinguistic functions should be tightly integrated, particularly as they reflect common pathways of processing [372]." See Pages 135-136 in [121].

Christine Kenneally [213] channeling Lieberman writes "[i]t is clear from this evidence, according to Lieberman, that the basal ganglia are crucial in regulating speech and language, making the motor system one of the starting points for our ability not only to coordinate the larynx and lips in talking, but to use abstract syntax to create meaningful and more complicated expressions. [...] One of the important functions of the basal ganglia is their ability to interrupt certain motor or thought sequences and switch to a different motor or thought sequence. Climbers on Everest become increasingly inflexible in their thinking as they ascend the mountain — stories about bad decision-making in adverse circumstances abound. Accordingly, Lieberman's climbers showed basic trouble with their thinking. [...]

Basal ganglia motor control is something we have in common with many, many animals. Millions of years ago, an animal that had basal ganglia and a motor system existed, and this creature is the ancestor of many different species alive today, including us when we deploy syntax, Lieberman argued, we are using the neural bases for a system that evolved a long time ago for reasons other than stringing words together. [...] Chimpanzees, obviously, have basal ganglia. Birds have basal ganglia. So do rats. When rats carry out genetically programmed sequences of grooming steps, they are using the basal ganglia. If their basal ganglia are damaged, then their separate grooming moves are left intact, but their ability to execute a sequence of them is disrupted." In backhanded homage to Chomsky, Lieberman calls their grooming pattern UGG for universal grooming grammar.

Recognizing these changes helps us to recognize that human language and speech are part of the continuum seen in several other species. It is not that there is any special gene for language or an unbridgeable gap that appeared suddenly to provide humans with language and speech. Rather, what the evolutionary record shows is that the language gap was formed over millions of years by baby steps. Homo erectus is evidence that apes could talk if they had brains large enough. Humans are those apes. See Pages 193-194 in [121].

Miscellaneous Loose Ends: Note Tony Zador's paper [26] on how animals rely on highly structured brain connectivity to learn rapidly: "Here we argue that much of an animal's behavioral repertoire is not the result of clever learning algorithms — supervised or unsupervised — but arises instead from behavior programs already present at birth. These programs arise through evolution, are encoded in the genome, and emerge as a consequence of wiring up the brain. Specifically, animals are born with highly structured brain connectivity, which enables them learn very rapidly. Recognizing the importance of the highly structured connectivity suggests a path toward building ANNs capable of rapid learning." Check out this interview with Zador about 01:18:00 into the podcast. You might also find Nathaniel Daw's interview and lecture on rational planning using prioritized experience replay as it relates sharp wave ripples and hippocampal replay.

If you missed it here is a link to my earlier compilation of papers on hierarchical and compositional models relating to multi-policy reinforcement learning. Related is the work of Ramachandran and Le [307] and Rosenbaum et al [19] on routing networks — Consider a model with a single large network or supernetwork with numerous subnetworks that implement experts and a second smaller network or router that learns to route examples through the supernetwork. Given that the router is characterized as a neural network, it must be trained. Rosenbaum et al [19] use reinforcement learning to train the router and Ramachandran and Le [307] use the noisy top-k gating technique of Shazeer et al [336] that enables the learning of the router directly by gradient descent.

That's all for CS379C this year. I wordsmithed the list of technical challenges that I circulated earlier and made it the penultimate entry in this class discussion. If you want to share it or circulate the ideas more broadly, please use this link to do so. I'm just about finished going through the final project reports for the second time and all the remaining grades will be posted by noon on Tuesday — the registrar's deadline for all students — at the very latest.

June 11, 2019

%%% Tue Jun 11 04:48:44 PDT 2019

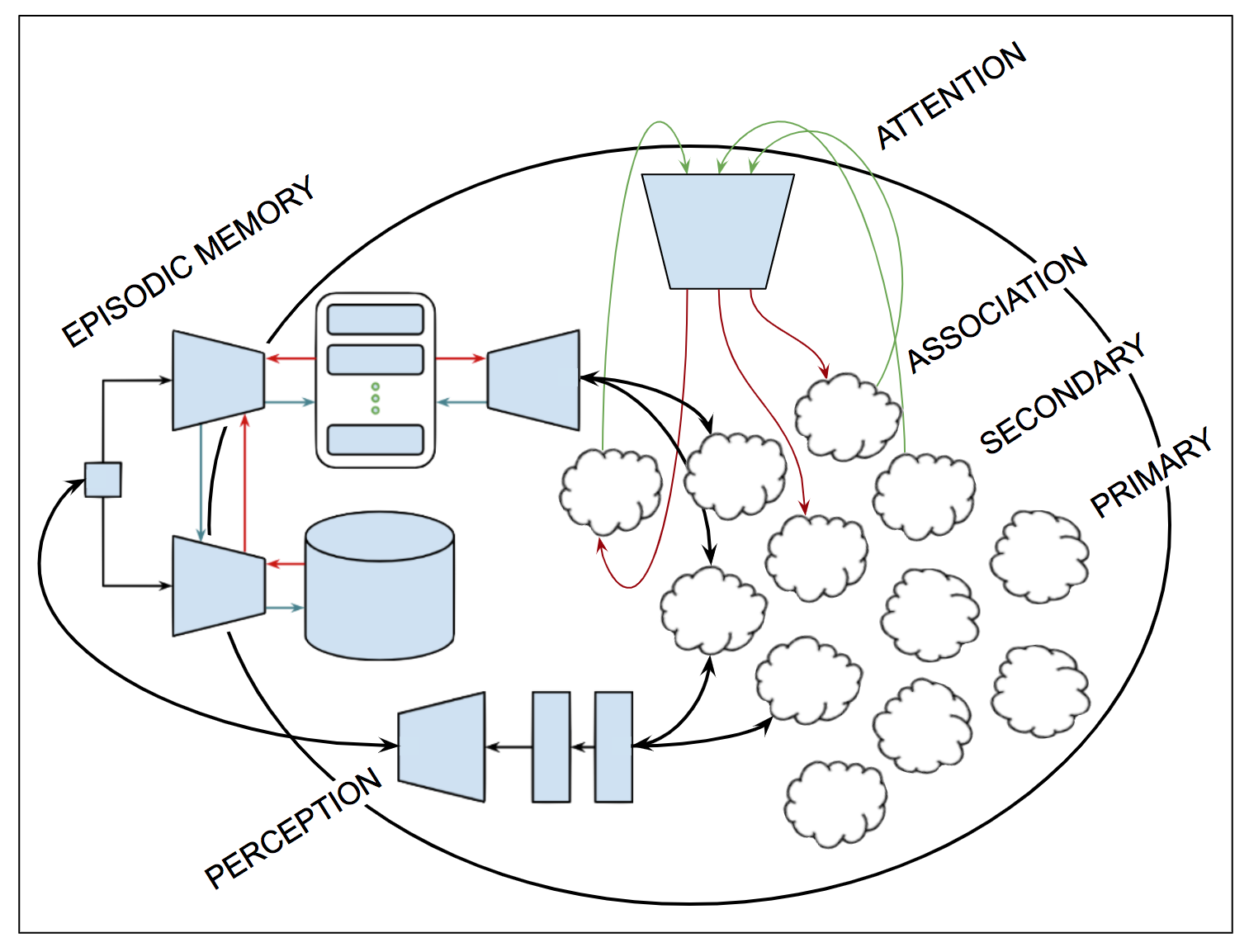

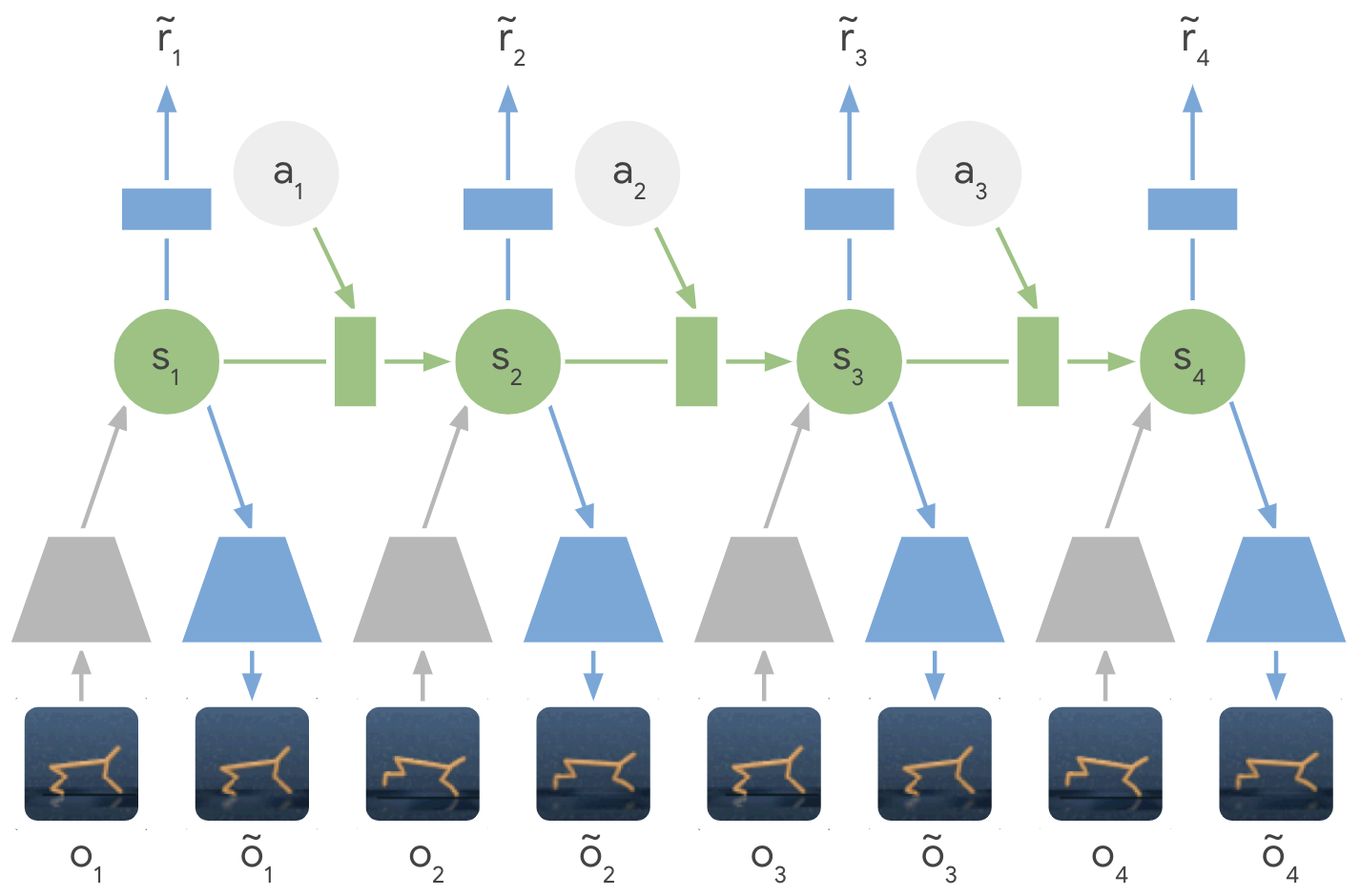

Since all of you were working hard on completing your final projects, I decided to cash out my current thinking about action selection and compositional modeling. In previous entries we've considered several aspects of the problem — some we've addressed, some we've ignored and still others we've briefly explored and then put on the back burner. This entry attempts to combine several of the ideas we've talked about in a single architecture, tracing sensory input to motor output with an emphasis on the role of the neural state vector in selecting what to do next and how libraries of subroutines provide a means for exploiting compositionality and leveraging hierarchical planning. I wrote and then destroyed a few thousand words before giving up on prose and set about trying to visualize what I have in mind. The result is summarized in Figure 78. If nothing else, I am better prepared to empathize with your struggles to take the ideas you explored in your project proposals and convert them into working code, experimental results and useful insights.

| |