Stanford Molecular Imaging Scholars (SMIS) Program Quarterly Seminar

Zoom meeting: https://stanford.zoom.us/j/99117388314?pwd=R29OSjlTdUt0a3pLaG5Zc1BFNTJIUT09

Password: 922183

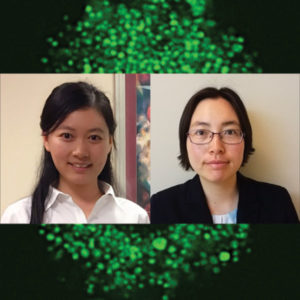

Guolan Lu, PhD

Mentor: Eben Rosenthal, MD; Garry Nolan, PhD

“Co-administered Antibody Improves the Penetration of Antibody-Dye Conjugates into Human Cancers: Implications for AntibodyDrug Conjugates”

Dianna Jeong, PhD

Mentors: Craig Levin, PhD; Shan Wang, PhD

“Novel Detection Approaches for Achieving Ultra-fast time resolution for PET”

Location & Timing

August 5, 2020

8:30am-4:30pm

Livestream: details to come

This event is free and open to all!

Registration and Event details

Overview

Advancements of machine learning and artificial intelligence into all areas of medicine are now a reality and they hold the potential to transform healthcare and open up a world of incredible promise for everyone. Sponsored by the Stanford Center for Artificial Intelligence in Medicine and Imaging, the 2020 AIMI Symposium is a virtual conference convening experts from Stanford and beyond to advance the field of AI in medicine and imaging. This conference will cover everything from a survey of the latest machine learning approaches, many use cases in depth, unique metrics to healthcare, important challenges and pitfalls, and best practices for designing building and evaluating machine learning in healthcare applications.

Our goal is to make the best science accessible to a broad audience of academic, clinical, and industry attendees. Through the AIMI Symposium we hope to address gaps and barriers in the field and catalyze more evidence-based solutions to improve health for all.

Judy Gichoya, MD

Assistant Professor

Emory University School of Medicine

Measuring Learning Gains in Man-Machine Assemblage When Augmenting Radiology Work with Artificial Intelligence

Abstract

The work setting of the future presents an opportunity for human-technology partnerships, where a harmonious connection between human-technology produces unprecedented productivity gains. A conundrum at this human-technology frontier remains – will humans be augmented by technology or will technology be augmented by humans? We present our work on overcoming the conundrum of human and machine as separate entities and instead, treats them as an assemblage. As groundwork for the harmonious human-technology connection, this assemblage needs to learn to fit synergistically. This learning is called assemblage learning and it will be important for Artificial Intelligence (AI) applications in health care, where diagnostic and treatment decisions augmented by AI will have a direct and significant impact on patient care and outcomes. We describe how learning can be shared between assemblages, such that collective swarms of connected assemblages can be created. Our work is to demonstrate a symbiotic learning assemblage, such that envisioned productivity gains from AI can be achieved without loss of human jobs.

Specifically, we are evaluating the following research questions: Q1: How to develop assemblages, such that human-technology partnerships produce a “good fit” for visually based cognition-oriented tasks in radiology? Q2: What level of training should pre-exist in the individual human (radiologist) and independent machine learning model for human-technology partnerships to thrive? Q3: Which aspects and to what extent does an assemblage learning approach lead to reduced errors, improved accuracy, faster turn-around times, reduced fatigue, improved self-efficacy, and resilience?

Zoom: https://stanford.zoom.us/j/93580829522?pwd=ZVAxTCtEdkEzMWxjSEQwdlp0eThlUT09

Ge Wang, PhD

Clark & Crossan Endowed Chair Professor

Director of the Biomedical Imaging Center

Rensselaer Polytechnic Institute

Troy, New York

Abstract:

AI-based tomography is an important application and a new frontier of machine learning. AI, especially deep learning, has been widely used in computer vision and image analysis, which deal with existing images, improve them, and produce features. Since 2016, deep learning techniques are actively researched for tomography in the context of medicine. Tomographic reconstruction produces images of multi-dimensional structures from externally measured “encoded” data in the form of various transforms (integrals, harmonics, and so on). In this presentation, we provide a general background, highlight representative results, and discuss key issues that need to be addressed in this emerging field.

About:

AI-based X-ray Imaging System (AXIS) lab is led by Dr. Ge Wang, affiliated with the Department of Biomedical Engineering at Rensselaer Polytechnic Institute and the Center for Biotechnology and Interdisciplinary Studies in the Biomedical Imaging Center. AXIS lab focuses on innovation and translation of x-ray computed tomography, optical molecular tomography, multi-scale and multi-modality imaging, and AI/machine learning for image reconstruction and analysis, and has been continuously well funded by federal agencies and leading companies. AXIS group collaborates with Stanford, Harvard, Cornell, MSK, UTSW, Yale, GE, Hologic, and others, to develop theories, methods, software, systems, applications, and workflows.

Mixed Reality for Surgical Guidance will take place on Thursday, April 1st from 9:00 – 10:30 am PDT.

The event will start with a one-hour panel discussion featuring Dr. Bruce Daniel of Stanford Radiology and the Stanford IMMERS Lab; Christoffer Hamilton of Brainlab, a surgical software and hardware leader in Germany; and Dr. Thomas Grégory of Orthopedic Surgery at the Université Sorbonne Paris Nord.

This panel will be moderated by Dr. Christoph Leuze of Stanford University and the Stanford Medical Mixed Reality (SMMR) program.

Immediately following the panel discussion, you are also invited to a 30-minute interactive session with the panelists where questions and ideas can be explored in real time.

Register here: https://stanford.zoom.us/meeting/register/tJcqf-GrqToiHNKL4D-5haRLowQylIwMEAve

Join us for a panel on Behavioral XR on Thursday, June 3rd from 9:00 – 10:30 am PDT. The event will start with a one-hour panel discussion featuring Dr. Elizabeth McMahon, a psychologist with a private practice in California; Sarah Hill of Healium, a company developing XR apps for mental fitness based in Missouri; Christian Angern of Sympatient, a company developing VR for anxiety therapy based in Germany; and Marguerite Manteau-Rao of Penumbra, a medical device company based in California. This panel will be moderated by Dr. Walter Greenleaf of Stanford’s Virtual Human Interaction Lab (VHIL) and Dr. Christoph Leuze of the Stanford Medical Mixed Reality (SMMR) program. Immediately following the panel discussion, you are also invited to a 30-minute interactive session with the panelists where questions and ideas can be explored in real time.

Register here to save your place now! After registering, you will receive a confirmation email containing information about joining the meeting.

Please visit this page to subscribe to our events mailing list.

Sponsored by Stanford Medical Mixed Reality (SMMR)

Radiology Department-Wide Research Meeting

• Research Announcements

• Mirabela Rusu, PhD – Learning MRI Signatures of Aggressive Prostate Cancer: Bridging the Gap between Digital Pathologists and Digital Radiologists

• Akshay Chaudhari, PhD – Data-Efficient Machine Learning for Medical Imaging

Location: Zoom – Details can be found here: https://radresearch.stanford.edu

Meetings will be the 3rd Friday of each month.

Hosted by: Kawin Setsompop, PhD

Sponsored by: the the Department of Radiology

Stanford AIMI Director Curt Langlotz and Co-Directors Matt Lungren and Nigam Shah invite you to join us on August 3 for the 2021 Stanford Center for Artificial Intelligence in Medicine and Imaging (AIMI) Symposium. The virtual symposium will focus on the latest, best research on the role of AI in diagnostic excellence across medicine, current areas of impact, fairness and societal impact, and translation and clinical implementation. The program includes talks, interactive panel discussions, and breakout sessions. Registration is free and open to all.

Also, the 2nd Annual BiOethics, the Law, and Data-sharing: AI in Radiology (BOLD-AIR) Summit will be held on August 4, in conjunction with the AIMI Symposium. The summit will convene a broad range of speakers in bioethics, law, regulation, industry groups, and patient safety and data privacy, to address the latest ethical, regulatory, and legal challenges regarding AI in radiology.

Regina Barzilay, PhD

School of Engineering Distinguished Professor for AI and Health

Electrical Engineering and Computer Science Department

AI Faculty Lead at Jameel Clinic for Machine Learning in Health

Computer Science and Artificial Intelligence Lab

Massachusetts Institute of Technology

Abstract:

In this talk, I will present methods for future cancer risk from medical images. The discussion will explore alternative ways to formulate the risk assessment task and focus on algorithmic issues in developing such models. I will also discuss our experience in translating these algorithms into clinical practice in hospitals around the world.

Keynote:

Self-Supervision for Learning from the Bottom Up

Why do self-supervised learning? A common answer is: “because data labeling is expensive.” In this talk, I will argue that there are other, perhaps more fundamental reasons for working on self-supervision. First, it should allow us to get away from the tyranny of top-down semantic categorization and force meaningful associations to emerge naturally from the raw sensor data in a bottom-up fashion. Second, it should allow us to ditch fixed datasets and enable continuous, online learning, which is a much more natural setting for real-world agents. Third, and most intriguingly, there is hope that it might be possible to force a self-supervised task curriculum to emerge from first principles, even in the absence of a pre-defined downstream task or goal, similar to evolution. In this talk, I will touch upon these themes to argue that, far from running its course, research in self-supervised learning is only just beginning.