This document serves as an appendix to the class discussion list from CS379C taught in the Spring of 2020. Due to the disruption caused by the SARS-CoV-2 outbreak and ensuing pandemic, it was decided that students would not be required to produce a final project including working code. In the last few classes, we discussed possible coding projects that would demonstrate some of the more ambitious ideas we explored during the quarter, and, as an exercise, fleshed out a project related to what we had been calling the SIR task borrowing from O'Reilly and Frank [98] described in this appendix.

The entries in this appendix are listed in reverse chronological order, in keeping with the format of the class discussion lists. The oldest entry summarizes our progress immediately following the end of classes. If you arrived at this page by clicking on a link in some other document, you might want to refer to the last status update on the project recorded in this document which is available here. The most recent – and also final – post in this document is here and speaks less to the project and more to our preliminary thinking about topics for the 2021 class.

December 31, 2020

%%% Thu Dec 31 09:34:32 PST 2020

The following is a redacted and annotated transcription – text set off in [square brackets] is likely a transcription error – of Chapter 14 from the audiobook version of [123] which is itself largely adapted from the earlier treatment in [122]. If you are interested in following up, the earlier book, Figments of Reality, though uneven and intellectually overreaching in some parts, has much to recommend it as a source of interesting viewpoints and thought provoking theories. I mostly agree with Melanie Mitchell's review in the New Scientist [86]. It may seem an odd reference for a scientist / engineer to draw insight from, but the general topic and main premise are highly relevant to our discussion of [1], and so please bear with me:

Now look at a baby in a pram throwing its rattle out onto the pavement for mummy or child-minder or indeed passers-by to retrieve we probably think that the child is not coordinated enough yet to keep its rattle within reach we think lost property, then we see mummy give the rattle back to the child to be rewarded with a smile and we think, no it's more subtle there is a baby teaching its mother to fetch just as we adults do with dogs now we think object.The baby smile is itself part of a complex reciprocal system of rewards that were set up long ago in evolution. We watch babies copy the smiles of parents, but no, it can't be copying because even blind babies smile, anyway copying would be immensely difficult from anywhere on the retina, the undeveloped brain must [sort out a face] with a smile then work out which of its own muscles to work to produce that effect without a mirror.

No! It's a pre-wired reflex; babies reflexively react to cooing sounds and to pre-wired recognition of smiles and upwardly curved lines on a piece of paper works just as well. Smile [icon] rewards the adult who then tries hard to keep the baby doing it. The complex interactions proceed changing both participants progressively.

They can be analyzed more easily in unusual situations such as cited children with signing parents, perhaps deaf or dumb but occasionally as part of a psychological experiment. For example, in 2001 a team of Canadian researchers headed by Laura-Ann Petito studied three children about six months old, all with perfect hearing, but born to deaf parents [102, 103].

The parents cooed over the babies in sign language and the babies began to babble sign language? That is make a variety of random gestures with their hands in return. The parents used an unusual and very rhythmic form of sign language quite unlike anything they would use to adults.

Similarly adults speak to babies in a rhythmic sing-song voice and between the ages of about six months and a year the babies' babel takes on properties of the parents' specific language. They are rewiring and tuning their sense organs - in this case the cochlea, to hear that language [best]. Some scientists think that babbling sounds is just random opening and closing of the jaw, but others are convinced it is an essential stage in the learning of language.

The use of special rhythms by parents and the spontaneous babbling with hand movements when the parents are deaf indicate that the second theory is closer to the mark. Petito suggests that the use of rhythm is an ancient evolutionary trick exploiting the natural sensitivities of the young child.

As the child grows its complex interaction with surrounding humans comes to produce wholy unexpected results, what we call emergent behavior meaning that [it is not apparent in the behavior of the individual component systems as they perform independently, but only in the situation in which two or more systems interact]. We call the process a complicity. The interaction of an actor with an audience can build up a wholly new and unexpected relationship.

The evolutionary interaction of blood sucking insects with vertebrates paved the way for protozoan blood parasites that cause diseases like malaria and sleeping sickness. A car and driver behaves differently from either [one] alone, and car and driver and alcohol is even less predictable. Similarly human development is a progressive interaction between the child's intelligence and the cultures extelligence a complicity1.

This complicity progresses from simple vocabulary learning to the syntax of little sentences and the semantics of fulfilling the child's needs and wants and the parents' expectations. The beginning of storytelling then becomes an early threshold into worlds that [] chimpanzees know not of. The stories that all human cultures use to mold the expectations and behavior of the growing child use iconic figures, there are always some animals and then status figures of the culture, e.g., princesses, wizards, giants and mermaids. These stories sit in all our minds contributing to [our acting out our thinking about and predicting] what will happen next as caveman or cameraman.

We learn to expect outcomes of particular kinds frequently expressed in ritual words and "they all lived happily ever after" or "so it all ended in tears". as G. K. Chesterton pointed out, fairy tales are certainly not as modern detractors of the fantasy genre believe set in a world where anything can happen be existed in a world with rules, "don't stray from the path", "don't open the blue door", "you must be home before midnight" and so on in a world where anything could happen you couldn't [have stored words at all].

The stories that have been used in England over the centuries have changed in complicity with the changing culture making the culture change and responding to those changes like a river changing its path across a wide floodplain that it has itself built the Grimm brothers and Hans Christian Anderson were but the last of a long series with Charles Perrault's accumulating the mother goose tails around 1690, there were many collections before that especially some interesting Italian groupings and retellings for adults [40].

The great advantage we all get from this programming is very clear; it trains us to do 'what if' experiments in our minds using the rules that we picked up from the stories just as we picked up syntax by hearing our parents talking these stories of the future enable us to set ourselves in an extended imagined present just as our vision is an extended picture reaching much further out in all directions than the tiny central part to which we're paying attention.

These abilities enable each of us to see ourselves as being set in a nexus of space and time our here and now form only the starting place for our seeing ourselves in other places at other times this ability has been called time-binding and has been seen as miraculous but it seems to us but it is the culmination for now of an entirely natural progression that starts from interpreting and enlarging vision or hearing and from making sense in general the extelligence uses this faculty and how.

[Each telling] improves it for each of us so that we can use metaphor to navigate our thoughts. Pooh bear getting stuck and unable to exit with dignity because he ate too much honey is precisely the kind of parable that we carry with us to guide our actions as metaphor from day to day. [TLD: Ask yourself, do humans learn language in order to read, learn and understand stories, or do we learn, listen, and seek to understand stories in order to learn language. Expressed in another way, is human language essentially a technology for creating, adapting, revising and utilizing stories. Think about the implications of this insight for building intelligent artifacts including not just individual, independent humanoid AI agents, but also collections of AI agents that learn by sharing stories among themselves and with us, not only accelerating their learning skills but also our incorporating our ethos into their values and aspirations.]

Thought Experiments: As an exercise, think about how a teacher might use stories and puzzles to teach students algorithmic thinking. Since logic is essential for understanding, designing and verifying programs, include exercises employing logic to analyze program properties. The following is sketchy and could be improved to reach a broader audience, but it illustrates the main features I have in mind for this exercise:

Imagine a row of soldiers standing in a column or a row of children sitting in desks in a classroom, and imagine the participants – soldiers or students – are told to compare their height with the height of the person directly behind them, and change positions – desks in the row or places in the column – if they are taller than this person.

The goal is to arrange the participants so that every student has an unobstructed view of the blackboard in the front of the room, or the soldiers an unobstructed view of their drill sergeant. Is it inevitable that this could always happen? For sake of this discussion, you can assume that if the participants are ordered by their height then every participant has an unobstructed view of what is ahead.

As you will see, it gets a bit complicated if everyone did this at the same time, and so imagine that this done one person at a time starting from the front of the row / column and working backward to the last person. Imagine two adjacent persons standing in line, they turn to face one another and one of them uses her hands to indicate the distance between the top of her head and the top of her partner's head. Note that because the participants change position, the "next" person in the line is poorly defined. Why is this a problem? Give an example of how such ambiguities might arise and suggest a way of resolving it.

What would happen if you carried out this process repeatedly? Would there come a time when repeating the process yet again would result in no changes in the order of the participants? If you think not, provide an example of an ordering and demonstrate how this might happen. Consider the case in which two adjacent participants disagree about which one of them is tallest, and they alternate between being the one to make the final determination. How might you resolve this so that any two participants will always agree on which one is tallest?

If you think repeating the process indefinitely will always result in a state in which repeating it one more time will result in no changes, construct an argument proving that this is so. One way to prove this statement is to assume that the statement is false and demonstrate that this leads to a logical contradiction, i.e., an absurd conclusion. This is called proof by reductio absurdum. Look up Peano's Axioms and prove that the set of natural numbers is infinite by reductio absurdum.

Another strategy is to demonstrate it works for a row consisting of two participants – the so-called base case, and then demonstrate that, if it works for a row consisting of N participants, then it works for a row consisting of N + 1 participants – this is referred to as the inductive step in a proof by induction. See if you can prove by induction that repeating the process described above will always terminate in a state such that every participant has an unobstructed view of what is ahead.

Miscellaneous Loose Ends: Students not familiar with some of the recent innovations in designing objective functions for reinforcement learning might find this note of some interest2.

December 23, 2020

%%% Wed Dec 23 05:04:14 PST 2020

In Sunday's group meeting I volunteered to provide a sample of papers and references relating to the alignment of neural circuits implicated in processing semantic information and those responsible for facilitating the production and understanding of language by means of speaking, writing and signing in the case of production, and listening, reading and observing in the case of understanding – language is more than simply a spoken medium for communication.

I recommend the following survey / review papers by Gregory Hickock and David Poeppel as a starter [105, 54, 55]. If you are ambitious, you might sample the reference book by Hickok and Small [53], which I recommend reservedly on the basis of the relatively few papers I've read in the almost 2,000 page compendium – ask me if you would like access to any of these resources:

@book{HickokandSmall2015neurolanguage,

title = {Neurobiology of Language},

author = {Hickok, G. and Small, S.L.},

publisher = {Elsevier},

year = {2015},

abstract = {Neurobiology of Language explores the study of language, a field that has seen tremendous progress in the last two decades. Key to this progress is the accelerating trend toward integration of neurobiological approaches with the more established understanding of language within cognitive psychology, computer science, and linguistics. This volume serves as the definitive reference on the neurobiology of language, bringing these various advances together into a single volume of 100 concise entries. The organization includes sections on the field's major subfields, with each section covering both empirical data and theoretical perspectives. "Foundational" neurobiological coverage is also provided, including neuroanatomy, neurophysiology, genetics, linguistic, and psycholinguistic data, and models.}

}

My lecture notes for CS379C on May 5 and the follow-up discussion and refined model on May 17 focus primarily on an exercise to come up with a simple model of inner speech inspired by the 2013 book by Fernyhough [41] and related journal articles [64, 5, 4]. In particular, Fernyhough and McCarthy-Jones [42] provide an interesting analysis of the various pathologies relating to inner speech that draws upon the literature on developmental psychology and neuroscience to postulate possible accounts of the origin of these maladies:

@incollection{FernyhoughandMcCarthy-Jones2013hallucination,

author = {Fernyhough, Charles and McCarthy-Jones, Simon},

title = {Thinking Aloud about Mental Voices},

booktitle = {Hallucination: Philosophy and Psychology},

editor = {Fiona Macpherson and Dimitris Platchias},

publisher = {The MIT Press},

year = {2013},

abstract = {It is commonly believed that auditory verbal hallucinations (AVHs) come from a misattribution of inner speech to an external agency. This chapter analyzes whether a developmental view of inner speech can deal with some of the problems associated with inner-speech theories. The chapter examines neurophysiological and phenomenological evidence relevant to the issue and looks up some key issues for future research.}

}

The conventional view of speech as primarily localized in Broca's and Wernicke's areas in the frontal and temporal lobes has yielded to a more nuanced distributed model that engages a diverse collection of cortical areas – see here, but if you want to understand how language and meaning are structurally and computationally related in the brain, you'll need to study the multi-modal sensory-motor association areas in the temporal and parietal lobes, the dual "what-versus-where" streams of the auditory and visual cortex, and the reciprocal connections related to Fuster's hierarchy and the action-perception cycle.

As a start you might take a look at the relatively recent work revealing insights about functional anatomy of language, where by function we include the relationship between the production of language by speech and signing and the interpretation of linguistic artifacts. See Huth et al [61] for an fMRI study out of Jack Gallant's group at UC Berkeley resulting in a semantic map of the cerebral cortex identifying putative semantic domains3.

@article{HuthetalNATURE-16,

author = {Huth, Alexander G. and de Heer, Wendy A. and Griffiths, Thomas L. and Theunissen, Fr\`{e}d\`{e}ric E. and Gallant, Jack L.},

title = {Natural speech reveals the semantic maps that tile human cerebral cortex},

journal = {Nature},

publisher = {Nature Publishing Group},

volume = {532},

issue = {7600},

year = {2016},

pages = {453-458},

abstract = {The meaning of language is represented in regions of the cerebral cortex collectively known as the 'semantic system'. However, little of the semantic system has been mapped comprehensively, and the semantic selectivity of most regions is unknown. Here we systematically map semantic selectivity across the cortex using voxel-wise modelling of functional MRI (fMRI) data collected while subjects listened to hours of narrative stories. We show that the semantic system is organized into intricate patterns that seem to be consistent across individuals. We then use a novel generative model to create a detailed semantic atlas. Our results suggest that most areas within the semantic system represent information about specific semantic domains, or groups of related concepts, and our atlas shows which domains are represented in each area. This study demonstrates that data-driven methods--commonplace in studies of human neuroanatomy and functional connectivity--provide a powerful and efficient means for mapping functional representations in the brain.},

}

December 19, 2020

%%% Sat Dec 19 04:53:41 PST 2020

Thanks to Chaofei for pointing out the Abramson et al [1] paper from DeepMind4. It is definitely relevant to our discussion last Sunday, and, in particular, to the debate between Gene, Yash and I on the best strategy to bootstrap learning in the model we're working on. The motivation spelled out in the first few pages of the paper reminds me of my early thinking about the programmer's apprentice and in particular the collateral benefits of having the apprentice interact with the programmer on the target task – using simple language, but also pointing, and other non-linguistic signals, and the importance of grounding language in target task, in our case, of interpreting and writing programs.

The DeepMind paper reinforces this intuition and makes the crucial connection between goals and rewards in the spectrum of imitative learning from the simplest, most primitive goals to complex plans that compose those primitives to achieve complex tasks. Relevant to our discussion last Sunday, infants learn how to point, compare, swap, order, combine, select, discard, attend and achieve other simple goals without supervision in their first few months postnally. Later, in the process of acquiring language, they learn to associate words and gestures with these simple goals, generalize them to apply to different contexts, and combine them to form plans for solving more complicated problems.

The DeepMind authors underscore the importance of language in learning how to solve problems and acquire knowledge. By attaching a word to performing a comparison or swapping two items, children acquire a linguistic affordance that serves as the locus for acquiring and applying knowledge concerning its use [82]. Yash and I were advocating we bootstrap learning by having the system learn to imitate, replicate and experiment with these primitive goals in different contexts as prologue to learning how to apply them to solving more complicated problems in the same way that baby chicks mirror the sounds that their parents make in preparation for learning species-specific alarm calls and intricate mating songs – see the discussion here.

The process whereby children acquire these primitives goes by different names in different disciplines, e.g., imitation learning, behavioral cloning, mirroring, motor babbling, etc. Whereas language is the key to generalizing and extending these primitives in humans, there are likely non-linguistic solutions to this problem, including, for example, simple pidgin proto languages, signing and pantomiming that can serve in this capacity. As for a mechanism, there is evidence that mirror neurons facilitate such learning in macaques and humans, as well as song birds and very likely in other species in which we have observed sophisticated behavioral imitation and the rapid sharing of novel tool use [144].

It is a misconception to think that what infants learn in their first few weeks is fundamentally simple when compared to learning how to transfer the contents of working memory between registers so as to provide the foundation for comparing, ordering, combining, sequencing, etc. The motor skills a baby acquires in its first few months require the integration of neural activity across multiple scales and include learning how to control circuits in the motor cortex, brain stem and spinal cord, as well as mechanosensory neurons located within muscles, tendons and joints. Learning such skills in chordates is facilitated by an inductive bias implicit in the manner in which bones, muscles and ligaments constrain movement to carry out useful motion.

This early learning depends on the accuracy and specificity of an action-perception cycle that, among other features, serves to align and subsequently maintain the alignment of our movements with the proprioceptive sense of the body. In the case of our model, proprioceptive awareness corresponds to accurately aligning the selection of registers and transfer of register contents with the spatiotemporal layout of working memory. In our proposed bootstrapping strategy, motor babbling amounts to training the system to reliably perform register transfers by imitating the output of the register-machine compiler in generating microcode instructions for representative code fragments.

Josh is not listed among the authors of Abramson et al [1], but Greg is one of the two corresponding authors, and his influence is apparent throughout. In a previous message, I called attention to the section in Merel et al [83] on inter-regional control communications, targeting the role of the coding space and related coding schemes in terms of facilitating communication between layers in hierarchical and modular learning systems. If you haven't read the section in [83] on ethological motor learning and imitation, see the excerpt in the footnote at the tend of this sentence as it anticipates the discussion in Abramson et al [1]6.

The authors take some time in describing the challenges involved in enlisting human agents to train artificial agents to produce human-like responses, concluding that "before we collect and learn from human evaluations, we argue for building an intelligent behavioral prior: namely, a model that produces human-like responses in a variety of interactive contexts", and so then turn to "imitation learning to achieve this, which directly leverages the information content of intelligent human behavior to train a policy."

The input space of the agent is multi-modal but steps are taken to reduce the complexity of the joint state space by substantially constraining each modality. The visual input to the agent is low resolution "[a]gents perceive the environment visually using RGB pixel input at resolution of 96 × 72," and artificial affordances facilitate performing visuomotor actions, e.g., "[w]hen an object can be grasped by the manipulator, a bounding box outlines the object." Language input and output is simplified by using a restricted vocabulary, "[w]e process and produce language at the level of whole words, using a vocabulary consisting of the approximately 550 most common words in the human data distribution."

The underlying Markov process is assumed to be partially observable, "[a]t any time t in an episode, the policy distribution is conditioned on the preceding perceptual observations, which we denote (o0, o1, …, ot) for 0 ≤ t, and the policy is autoregressive, "[t]hat is, the agent samples one action component first, then conditions the distribution over the second action component on the choice of the first, and so on." Their choice of GAIL (Generative Adversarial Imitation Learning) as the basic building block for their multi-modal transformer (MMT) architecture makes sense given the characteristics of their objective and the underlying dynamics [56, 143, 51].

The authors quite reasonably restrict the content of the dialogue between student and teacher in order "to produce a data distribution representing certain words, skills, concepts, and interaction types in desirable proportions" by using a controlled data collection methodology based on events called language games in homage to Ludwig Wittgenstein [148]. It is worth a moment to reflect on this approach, given that the point of the our exercise here is to separate the laudable high aspirations of Abramson et al [1] from the modest, yet noteworthy preliminary steps they chronicle in the paper, and see if we can learn from their experience.

While the problem addressed by Abramson et al is different in both scope and target skill from our work, there are some lessons we can apply to the problem we are working on. In our model, the environment corresponds to that part of the model separate from the basic agent architecture. This environment is accessible directly through the input stream and actionable through the output stream. We have access to unlimited ground truth through the DRM compiler, so that the size and distributional characteristics of samples are completely under the control of the curriculum learning system.

Apart from the superficial differences, what sets their approach from ours comes down to the power of language to efficiently convey skills between agents sharing the same language and general development trajectory. It would be worth talking about this during Sunday's meeting as I think it might resolve – or at least refocus – some of the issues that came up last Sunday regarding how to train the lowest level in our hierarchical model and what we expect to learn in higher levels. As preparation for such a discussion, see here for a brief discussion of the naïve physics of swapping registers.

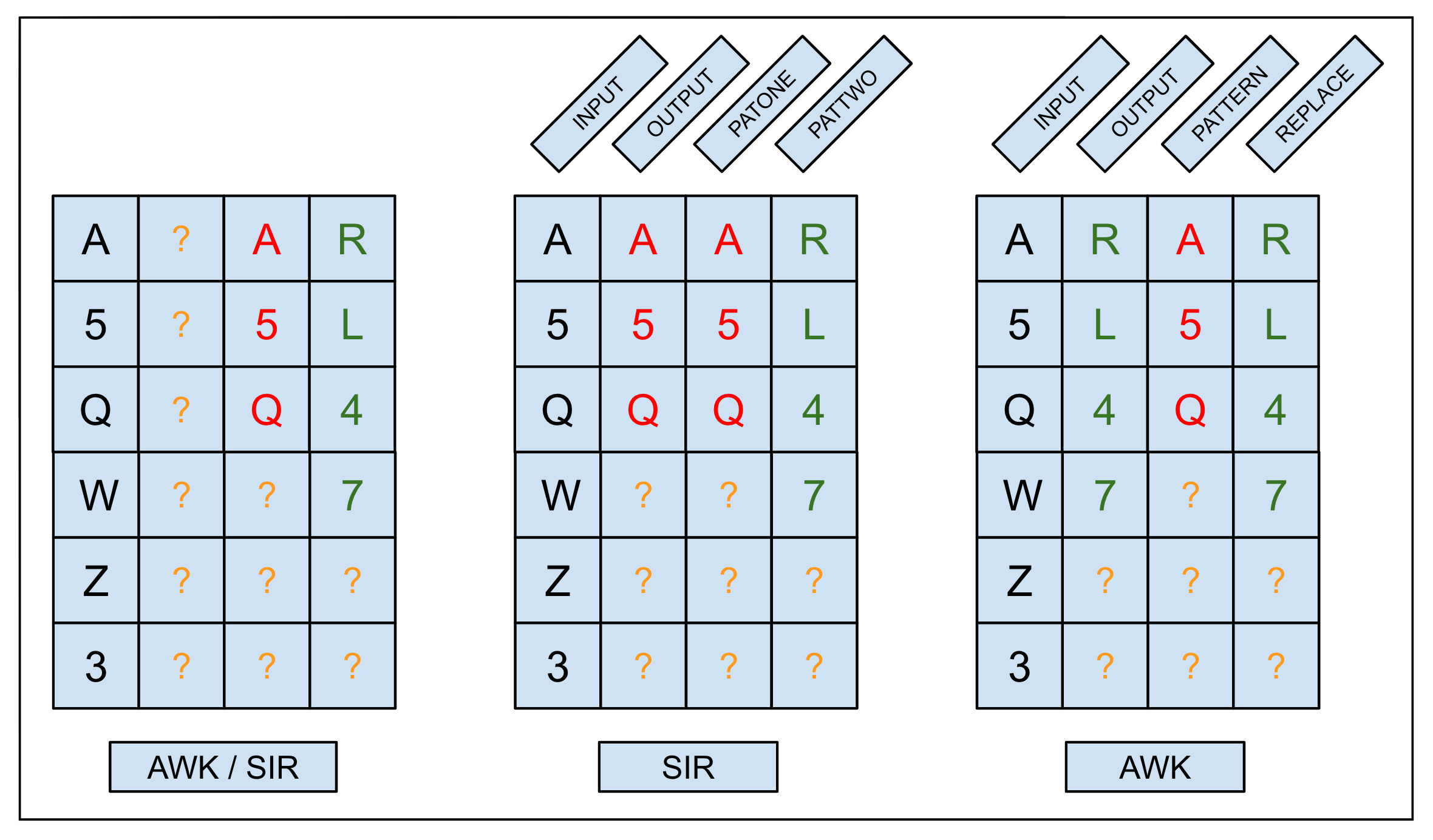

Miscellaneous Loose Ends: Figure 1 lists the implementations of two simple sorting algorithms that run on the current instantiation of the virtual register-machine – there is a subtle caveat regarding this claim that we will address shortly. Both algorithms are variants of bubblesort and, while they are neither space- nor time-efficient, efficiency is not the point of this exercise. This is primarily an exercise in understanding the difficulty of learning different algorithmic strategies:

The algorithm on the left consists of two recursive procedures: an inner loop that scans through the input sequence reversing consecutive pairs not in ascending order, and an outer loop that manages the use of the two register blocks in working memory. The algorithm uses the two register blocks that we employ for two-pattern SIR and AWK substitution tasks, by swapping back and forth between the two blocks until no additional changes are required. In each iteration, the inner loop reads from one register block and writes to the other.

| |

| Figure 1: Two variants of bubblesort. The one on the left works by reading from one register block and writing to the other, and alternating between — or swapping — the two register blocks. The one on the right reads from and writes to the same register block and only uses two blocks of the other register block for temporary storage in swapping the contents of two register blocks. The algorithm on the right is slightly more space efficient, requiring only O(n + 1) rather than O(2n) space. | |

|

|

An alternative would be to learn now to swap the contents of two registers, an operation that requires the use of another register for temporarily storing the contents of one register so as not to overwrite the only copy of the contents of the other. By swapping the contents of two registers, we can modify the algorithm to require O(n + 1) rather than O(2n) space. The algorithm on the right implements this strategy. It is interesting to think about which of the two algorithms would be easier to learn.

What does it mean to swap the contents of two registers? It's not enough to describe the state just prior to and immediately following the swap. If you want to understand the concept of a swap well enough to apply it in a novel situation, correct a flawed application of the concept or explain it to someone else, then you have to understand the concept of temporary storage, the potential destructive consequences of assigning a value to a variable that already has a value, and the general notion of protecting information necessary to complete a given task.

The programmer's apprentice would need to know this sort of thing, and while the programmer could, in principle, teach the apprentice, the problem is that there is good deal of other practical knowledge the apprentice would have to master before the programmer could even explain the relevant concepts. It is not clear to me whether or how the agent described in Abramson et al [1] would acquire this knowledge; my conjecture is that the answer is probably "yes, in principle", but that it may require more extensive preparatory training than the current corpus of language games supports.

December 5, 2020

%%% Sat Dec 5 04:49:23 PST 2020

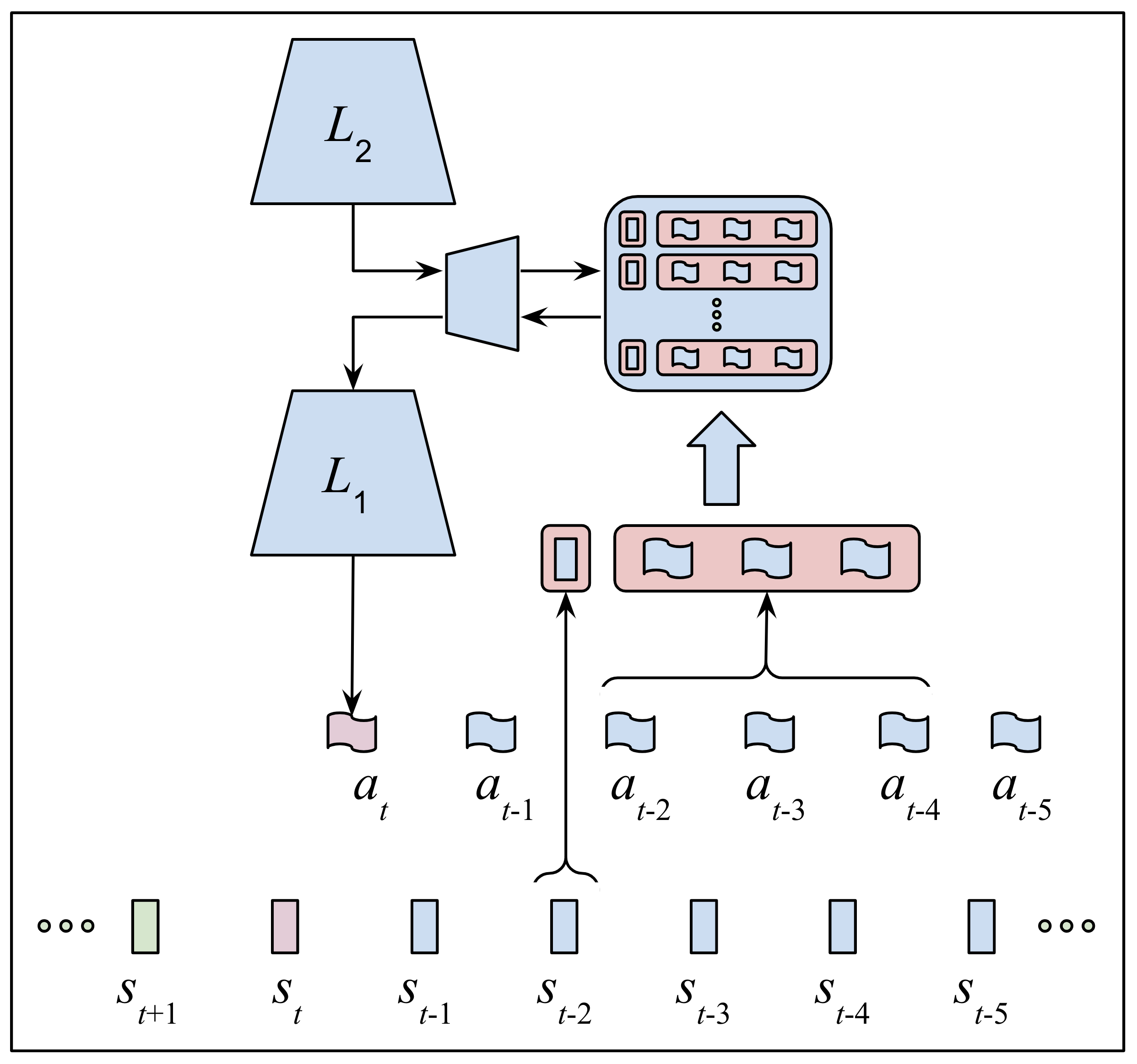

The most interesting takeaway from last Sunday's meeting is that Yash made a compelling case that most if not all of the functionality packed into this figure could be integrated into existing circuits in the model shown in the earlier figure. Yash's reasoning and the discussion that followed delved into how CNN layers in a transformer stack could learn complex compositional representations and how different flavors of experience-replay, e.g., prioritized, hindsight, etc. could be used to address the problems anticipated in learning the models we are proposing.

In the process of this discussion, I realized that the separate DNC-based, hippocampal-inspired complementary-learning-system that I envisioned was motivated by my desire to highlight functions as separate components in a single architecture and mitigate problems associated with attempting to learn sharply-defined ("symbol-like") features rather than embrace the distributed nature of neural computation in humans and the radical end-to-end-trained connectionism espoused in the PDP books and current machine learning practice. I haven't completely shed my biases, but I can appreciate the simplicity and elegance of the model Yash sketched in our discussion.

November 27, 2020

%%% Fri Nov 27 04:23:17 PST 2020

This entry is the latest installment in a series of exercises intended to step back and summarize the architecture as a whole and highlight specific innovations we have introduced to address the challenges of learning how to interpret and ultimately write programs designed to solve specific tasks. The BibTeX records including all of the abstracts are provided for your convenience in this footnote7.

A Differentiable Register Machine (DRM) is a type memory network [126] related to the Differentiable Neural Computer [50] (DNC) and its predecessor, the Neural Turing Machine [49], but emulating a class of automata called Register Machines featuring a Turing Complete instruction set consisting entirely of operations that transfer the contents of one register to another.

Working memory consisting of a collection of registers all the same size and each one capable of containing a single one-hot vector representing an alphanumeric character or special codon, e.g., an end-of-sequence marker. This memory is highly structured spatially in terms of its layout and patterns of access, and temporally in terms of how it changes over time as transfers between registers corresponding to DRM microcode instructions are executed altering the contents of working memory in the process of emulating programs.

The rest of the neural network architecture employs the DRM similarly to how a novice programmer might use an integrated development environment (IDE) in the process of learning how to interpret programs written in a high-level functional programming language such as Scheme, a modern dialect of Lisp that we employed to writer a compiler for the DRM we use to train the model. We’ll refer to this neural network as a Teachable Executive [Control|Coder] [Homunculus|Hierarchy] (TECH) – see Figure 2 for a relatively current sketch of the evolving network architecture.

| |

| Figure 2: The above graphic was part of a slide presentation for a talk I gave in Jay McClelland's regularly scheduled lab meeting on November 10, 2020. The centrally-situated, primarily black line-drawing of the neural network architecture is a slightly modified rendering of the architecture at the time of the talk generated by Yash Savani. The smaller, inset graphics surrounding the architecture drawing are borrowed from the three papers referenced in the inset bibliography. These three papers along with Merel et al [83] substantially influenced our design choices. | |

|

|

For explanatory purposes, you can think of the TECH neural network architecture as having the basic layout of a Transformer architecture of the sort you might use for machine translation or some other NLP task, but with four important differences:

A decoder that exploits the spatial and temporal structure inherent in the time series of DRM registers as they change over time in the process of executing DRM microcode instructions.

Specifically, this decoder is implemented by the attentional layers in the decoder of a conventional Transformer stack [111], aided by positional encodings and relative positioning constraints specific to the architecture of working memory that capture syntactic structural relationships between registers and their contents, providing guidance in the form of the softmax output highlighting content relevant to action selection in the downstream Monte Carlo Tree Search [141] (MCTS) component – not shown in Figure 2.

An encoder that compensates for the partially observable characteristics of the DRM time series by learning to incorporate task-relevant information about the past into its current summary of the evolving state of the DRM and its predictions of future states and their associated rewards for the purpose of reinforcement learning.

Specifically, this encoder is implemented as a variational autoencoder (VAE) and roughly corresponds to the encoder in a conventional Transformer stack. This particular class of VAE [132] employs a discrete latent representation to exploit the semantic spatiotemporal relationships implicit in the time series of register contents necessary to unambiguously identify register transfers in the lowest level in the hierarchy and subroutine selection in the higher levels.

A hierarchy of procedural abstractions that reduce the combinatorics of generating sequences of actions and that roughly correspond to the abstractions employed in software engineering to create modular artifacts that facilitate code reuse and simplify the development of complex software systems – see the May 19th entry in this log for Tishby and Polani's analysis of hierarchies of sensing-acting systems [127].

A more sophisticated method than the traditional beam search [68] for searching for the next action to perform analogous to choosing the next word in the case of machine translation or document summarization [119, 141], combined with learning a discrete latent representation that generates discrete codes – see the November 4th entry in this log for more technical detail regarding the class of vector-quantization variational autoencoders (VQ-VAE) [132].

Aside from the design of the differentiable register multiplexer – DRM switchboard – used to select and transfer information between registers, the only remaining major architectural component concerns the network responsible for compiling L1 primitives in order to generate the library of subroutines that will serve as L2 primitives. We imagine a two tiered system that includes a long-term consolidation memory containing program traces that have been adapted and indexed to apply to a wider range of situations than those observed during L1 training.

|

The above graphic depicts the contents of consolidation memory as a collection of computation trees constructed from multiple trace fragments using branch points identified in program execution traces to define the beginning and end of candidate subroutines. In addition to the consolidation memory, there is a smaller, shorter-term memory system that serves as a staging area and buffer for subsequent consolidation, rehearsal and the application of reinforcement learning strategies such as prioritized experience replay [91, 79, 113] and its extensions and refinements including hindsight experience replay [95, 9].

The resulting architecture will be challenging to train and likely require several different training strategies including both unsupervised methods, for example, imitation learning and a cognitive version of what is referred to as motor babbling in robotics, and supervised methods, and several methods for using Monte Carlo tree search to accelerate reinforcement learning [104, 134, 119, 141]. The remainder of this entry concerns our current plans for how to compile subroutines.

The following discussion represents a proposal for solving the three problems described in the November 19th entry of the appendix log. It addresses all three of the problems but the proposed solutions to these problems are interwoven and so I will lay out the proposal and hopefully it will be convincing that the proposed approach deals with the three problems.

In thinking about how Level I subroutines might have to be padded with sequences of individual register transfers in order to bridge the gap – or interpolate – between subroutines, I thought more about the problem of segmenting sequences of register transfers into reusable subsequences that can be combined and used as subroutines, and it occurred to me that this is already done implicitly by the compiler in the process of interpreting programs as sequences of procedure calls. The compiler-generated segmentations do not leave gaps and consistently produce the same sequence of subsequences as output given the same input / initial conditions, thereby providing a reliable source for training samples.

This approach to generating training data is especially powerful in the case of programs written in a traditional functional programming language in which iteration is carried out by recursive calls to the same procedure. We could, of course, disentangle traditional iterative constructs such as FOR and WHILE loops and treat the blocks of code within these constructs as reusable components but I predict that would be complicated and in any case unnecessary if we rely solely upon recursion.

Importantly, by constructing subroutines at the level of procedure calls, we finesse the problem of interpolating between subroutines by eliminating the gap altogether. In principle, we could build a more fine-grained set of reusable components by compiling a collection of subroutines that includes every expression occurring within procedure. If this strategy is successful it would eliminate altogether the need for run-time interpolation. It probably would not, however, eliminate the need for error correction in cases where the errors requiring correction are due to faults introduced in the process of compiling subroutines.

I have come up with several strategies for packaging, storing and retrieving subroutines, and so far they all have too many moving parts and involve sharing responsibility – and therefore coordination – across several architectural components. The strategy proposed here is a significant departure from all of the earlier ones, in that it packs all of the required functionality within a single architectural component and no doubt has its inspiration – and possibly its very origin – from one or more of the many papers about neural coding that I've read in the last three or four weeks.

We'll start with some assumptions and treat them for the remainder of this document as being valid – we can discuss this on Sunday if any of them seem particularly murky or improbable to you. The basic concepts build upon the Kumaran et al [73] paper on complementary learning systems and two papers about episodic memory and the hippocampus, one by Samuel Gershman and Nathaniel Daw [48] and the other by Dharshan Kumaran and Jay McClelland [74] both of the latter revisiting the role of the hippocampus focusing on integrative features of the hippocampal complex that have come to light in the past few years. In keeping with our approach all along in gleaning insight from cognitive neuroscience, we borrow the insights we deem useful and ignore most of the biological details in translating those insights into code.

Instead of thinking of the hippocampus as a stable repository for storing episodic memories, think of it as a dynamic sketchpad for storing and refining potentially useful procedural knowledge that is constantly in flux as useless or superseded knowledge is culled out, new information integrated with older, out-of-date information, and procedures in the form of plans or specialized courses of action are altered in their scope, optimized and corrected in light of new experience. All of this machinery is built upon a high capacity memory system that can retain memories indefinitely while at the same time offering the read and write flexibility of working memory.

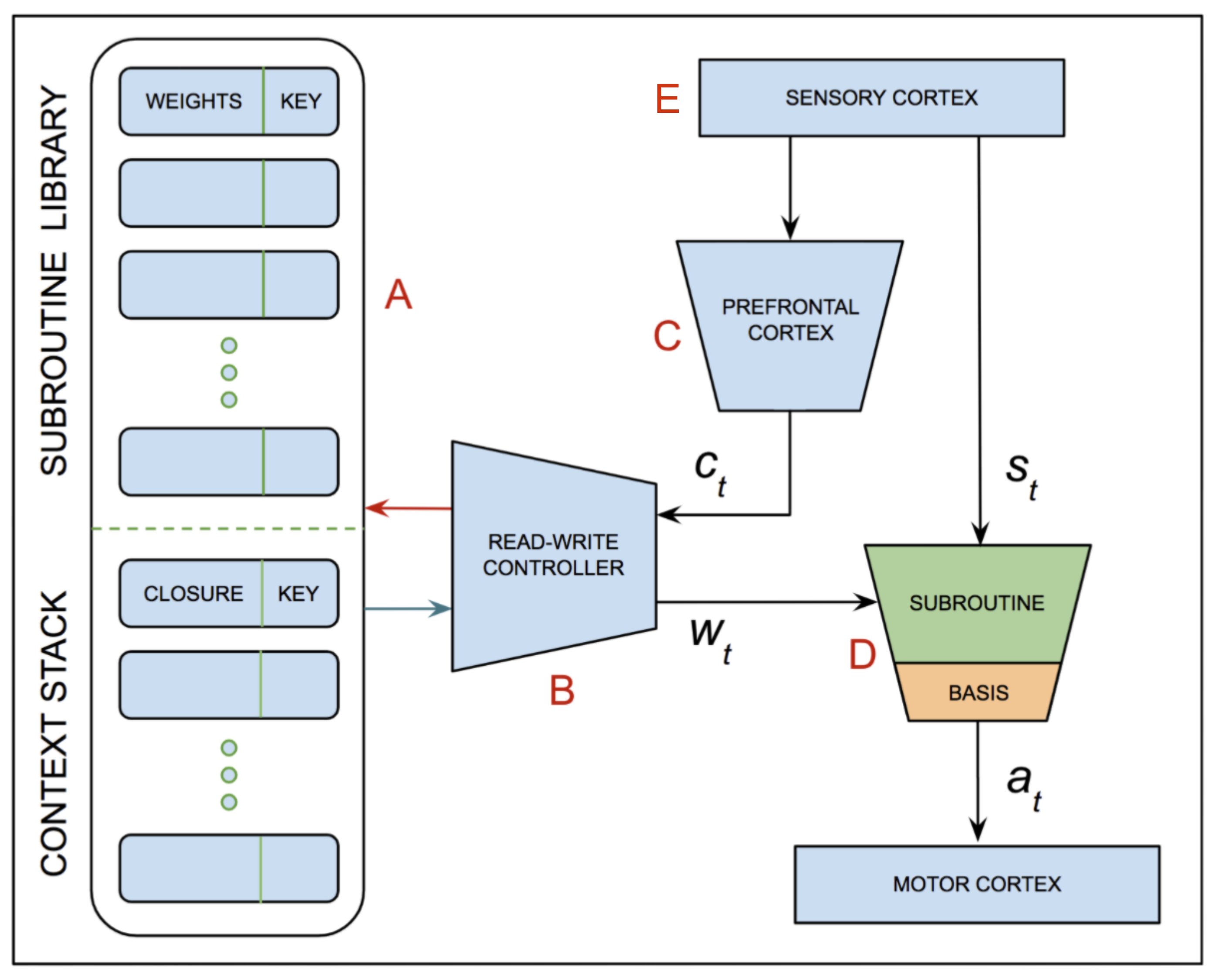

| |

| Figure 3: The above graphic was adapted from a model that we – Chaofei Fan, Gene Lewis, Meg Sano and I – explored last year and was featured in the October 25 posting to the 2020 class discussion list. The model has two features that are relevant to our discussion here. Network A takes as input a representation of the current state, and generates a representation of the context for action selection. Network K is an embedding network that takes as input a sequence of states corresponding to recent activity and generates as output a unique key associated with a subspace of the full state space that includes the current state. Network K plays the role of the coding space in Merel et al [83]. The networks C, D, E and F are controllers for two differentiable neural computer (DNC) peripherals that provide storage and access for short-term and long-term memory respectively. The model operates in two modes. In each cycle during the online mode, the C controller loads the selected expert network into location M where it is fed the output of A and produces the input to B. In this mode, the short-term memory is used to record activity traces that are subsequently used in the offline mode to update the networks stored in long-term memory using experience replay. The inset box labeled (a) illustrates the concept of trace consolidation in terms of compiling subroutines as computation trees. See here for an argument suggesting that the functionality provided by the components shown here could be integrated into existing circuits in the model shown in Figure 2. | |

|

|

We imagine a two tiered system that includes a less capacious, shorter-term memory system that serves as staging area and buffer for consolidation, rehearsal and various reinforcement learning strategies such as prioritized experience rapid replay[91, 79, 113]. In addition to the shorter-term staging area we have the larger, longer-term consolidation area which undergoes continuous changes in accounting for new information – see Figure 3 for an earlier related model, and the earlier explanation of trace consolidation in terms of compiling subroutines as computation trees.

November 19, 2020

%%% Thu Nov 19 03:54:13 PST 2020

The previous entry focused on using the transformer attentional layers to generate a representation of the evolving state of a running program so as to uniquely specify the next instruction in the program. We argued that it was not enough to create a representation that is unique only within the context of a particular program since primitives at one level in the hierarchy are compiled into subroutines at the next level and therefore likely to be employed in programs to solve tasks not encountered in training the subordinate level. We also demonstrated how the register machine compiler can used to visualize the evolving state of a running program.

In this entry, we provide an example of two programs that exhibit identical working memory content, but require different sequences of instructions in order to correctly carry out their specified functions. It seems plausible, but we have no proof as yet, that this problem can be resolved by assigning a unique signature to each program, adding a register that at all times holds the signature of the currently running program. As for recognizing and subsequently deploying a subroutine compiled at one level to learn a new program to solve a novel task in the superordinate, we discuss the role of the coding-space interface between superordinate and subordinate levels in the hierarchy.

| |

| Figure 4: An example of how state vectors constructed from identical register contents can crop up in performing either an AWK substitution task or a two-pattern SIR task, and produce different results. The panel on the left represents the contents of working memory giving rise to the ambiguous state vector. The next step for both programs is to compare the input stream against the pattern in the third column labeled PATONE in the SIR task and PATTERN in the AWK task. As shown in the middle panel, if the match succeeds, the SIR program writes to the output buffer the sequence of characters in the third column corresponding to the first pattern specified in the SIR task. As shown in the right panel, if the match succeeds, the AWK program writes to the output buffer the sequence of characters in the fourth column corresponding to the replacement pattern specified in the AWK task. If the match fails, the SIR program attempts to match second pattern, and if the AWK program fails, then it proceeds to the next character in the input buffer. | |

|

|

Recall that we originally assumed for simplicity that branch points would determine the boundaries of subroutines, but obviously expressions that determine branching conditions appear frequently within programs and those conditions don't always result in calling some other program. As a consequence, it seems likely that we will have to compile subroutines that include internal branches and then deal with consequences, if any, in situations when the calling program finishes and control returns to the caller. The remainder of this entry is devoted to exploring this issue in more detail.

The simple sequence of loading the contents of two (source) registers into the input registers (operands) of the comparator subroutine and transferring the resulting output, interpreted as a Boolean value, to a specified (sink) register includes no branches and is likely to be one of most frequently executed Level II subroutines often serving as the basis for conditional branches. Copying a sequence of characters from one register block to another. Comparing sequences in two register blocks, and swapping the contents of two registers are other prime candidates for packaging as subroutines.

Packaging might entail, in the case of comparing two sequences, providing the first register in each of the two blocks containing the sequences to be compared and a register in which to store the Boolean output reporting whether or not the two sequences are equal. Universally requiring standard representations and standard locations for temporarily storing Boolean values, e.g., 0 and 1, empty registers, e.g., ?, the start and end of sequences and subexpressions, e.g., [ and ], etc., will considerably simplify the job of compiling and packaging subroutines.

There are several problems at the interface between Level I and Level II that we have lumped into what Merel et al called the coding space. Apparently, Merel et al [83] train their Level I and coding-space related networks independently of Level II which in their case is characterized as one instance of a class of interchangeable modules that employ the same Level I motor primitives. Merel et al admit the shortcomings of the arrangement described in their paper, and briefly discuss the broader issues citing the work of Karl Friston [44] and Todorov, Ghahramani and Jordon [129, 130]. See this footnote8 for the relevant excerpt from [83].

What follows is a list of the major problems relating to our model in an attempt to address the issues raised in the papers referenced in the previous paragraph. Note that in the following we refer to Level I and Level II but same issues and problems arise in the other levels of the hierarchy:

Problem 1 – Level II needs a subroutine compiled from Level I primitives in order to construct (learn) a program to solve a new task in a class that was not included in training Level I. The central issues related to this problem concern (a) how Level II knows about this subroutine, (b) how it infers that the subroutine applies in the current circumstances, and (c) how the subroutine is initially packaged and subsequently adapted to apply to problems it has never before encountered.

Problem 2 – Level II has arrived at state s and ultimately wants to achieve state s′; it may not be possible to reach s′ by executing a single Level I subroutine and so making progress toward achieving s' will require search9. The central issues center around which of the two levels are responsible for initiating, subsequently guiding and ultimately selecting and returning a promising Level I subroutine or admitting failure. More generally, it raises the issue of whether Level I and its related coding-space network play any active role following the initial Level I training and compilation of subroutines.

Problem 3 – Suppose that Level II can't find a subroutine (generated by Level I) to bridge the gap between current state s and the goal state s′. Either there isn't a subroutine that extends the search from s or there exist such subroutines but none of them appear to make progress toward s′. How do you find a subroutine that applies in the current state and given such a subroutine how do you determine if it makes progress to the goal state? [The use of the term "goal" in this context may or may not be useful. Keep in mind that the objective is to learn how to emulate programs that solve tasks, so perhaps it makes more sense to say "instances of problems that can be solved by particular programs."]

There will likely be circumstances when some component of the overall system will have to search for and possibly adapt subroutines. If Level II can't find a suitable subroutine starting from the current state using some form of table lookup, Level II or Level I will have to search for a subroutine that is reachable from the current state and, whether Level II or Level I performs the search and selects the subroutine, Level I will have to interpolate between the current state and the initial state of the selected subroutine. Interpolation in our case is more complicated than in Merel et al [83] and will almost certainly entail some amount of search – see Pierrot et al [104] for an extension of the neural programmer-interpreter model [110] emphasizing the role of search in neural code synthesis.

November 17, 2020

%%% Tue Nov 17 03:19:03:14 PST 2020

I've been playing with data generated by the compiler and thinking about some of the assumptions we've made and their consequences with respect to our early thoughts about what needs to happen at the next level in the hierarchy. I'm being purposely long winded to be clear and hopefully reveal to myself the hidden biases and assumptions in my thinking.

In the following, "instructions" refer to what we've been calling "register-machine microcode instructions". Each instruction transfers the contents of one particular source – or read – register to one particular sink – or write – register, overwriting the contents of the latter in the process. The Level I primitives are register transfers, i.e., microcode instructions.

To avoid constantly having to repeat the phrase "executive homunculus", I'll call it the Teachable Executive [Coder | Control] Homunculus and refer to it affectionately as TECH. We could slip in a word beginning with "A" and call it TEACH. but I prefer the simpler TECH. We start with the question, "What tasks is a trained Level I TECH capable of performing?

It seems we should be able to configure the TECH working memory with a "novel" instance of a program / task that TECH has been trained on and have it solve the task. By novel I mean we train TECH on a set of programs drawn from several classes, for example, SIRtasks with one or two same-length patterns, and test it on SIRtasks with two patterns of differing length.

One problem with this is there are AWK tasks whose initial working memory representation is identical to a SIR task. We could show TECH what we intend it to do by providing input / output examples, but even this could be ambiguous if we are interested in distinguishing between different algorithms solving the same problem, e.g., insertion sort versus bubble sort.

I suggest we finesse this problem for now by adding a register containing a unique program code, e.g., AWK3745, and perhaps later consider some means for directly communicating with TECH using the input and output registers. This is too ambitious to consider at this stage, but it suggests a project for students interested in human-computer interaction.

Level I learns to emulate programs and, in order to do so, it has to learn how to map state vectors representing the contents of working memory to specific register transfers that change the contents of working memory thereby executing the next microcode instruction and producing the next state vector as a side effect.

The subroutines that will serve as Level II primitives are compiled from sequences of Level I primitives / microcode instructions. Assuming that we could map the state of a running program to a unique state vector, we could substitute those unique state vectors for their corresponding register transfers.

Given such a map, we could use state vectors as keys to retrieve the source and sink registers necessary to execute the next instruction. This assumes that the contents of working memory are sufficiently distinct to unambiguously identify any program state. That assumption may be warranted with respect to programs used to train Level I, but it is almost certainly not warranted in the case of the larger class of programs used to train Level II.

In fact, the assumption probably doesn't hold with respect to Level I. To make a simple analogy, if you want to predict the path of a flying projectile, it's not enough to know its current position, you also need to know its mass and the forces acting upon it. Similarly, to predict the next state vector you need a model of the underlying dynamics, i.e., the dynamics of the program making changes to the contents of working memory over time10.

This may seem every bit as hard as learning the program, since understanding the dynamics would seem to entail being able to emulate the program. However the previous observation confuses the difference between predicting and changing the future; accurately predicting the future may help in planning to achieve your goals, but it is neither necessary nor sufficient. Transformers suggest a possible solution to our problem.

In language processing applications such as machine translation, transformers employ encoder layers to create embeddings that represent the current context for selecting the next word, and attentional layers that measure the relevance of the words – as well as short phrases (n-grams) – appearing within that context. Positional encoding and relative positioning are important capabilities contributing to the success of transformers that will likely to prove important in our application.

In particular, positional encoding provides a framework for enabling TECH to exploit the topological structure of working memory and the functional relationships between registers and register blocks. While we typically display the layout of working memory as a 2-D grid of registers, many procedures that operate on working memory involve iterating over the linear structure of the register blocks corresponding to patterns and I/O buffers, suggesting a simple tree structure corresponding to a star topology. String comparison and copying operations involve iterating over two registers simultaneously exhibiting functional relationships between buffers that differ from one program to the next. Here [118, 75, 67, 31] are some recent papers exploiting different topologies using different positional encoding strategies.

Miscellaneous Loose Ends: To summarize, this entry challenges our earlier assumption that the compressed current contents of working memory are sufficient to serve as an instruction counter for identifying the next instruction in a previously ingested program. As an alternative, we propose a representation of program state as selective embedding of the last n working memory snapshots — selective with respect to emphasizing features that serve to predict / identify the next instruction in the emulation of both familiar and novel programs11.

Notice the division of labor between the method suggested above to represent the state of a running program for indexing the next step in that program by accounting for the underlying (register-transfer) dynamics, and the method for accessing registers in performing register transfers using VQ-VAE – see the previous entry in this log. Recall that we originally assumed for simplicity that branch points would determine the boundaries of subroutines, but obviously expressions that determine branching conditions appear frequently within programs and those conditions don't always result in calling some other program. As a consequence, it seems likely that we will have to compile subroutines that include internal branches and then deal with consequences, if any, in situations when the calling program returns and control returns to the caller.

November 7, 2020

%%% Sat Nov 7 04:08:02 PST 2020

The material discussed in this entry has been around for some time; however, the brief summary provided here is to make sure that students taking CS379C are aware of the basic technologies and their application. In addition to excerpts from the van den Oord et al VQ-VAE paper [132], I've included mention of research from Bengio et al [15] on the problem of propagating gradients through non-linearities, which is important in learning discrete latent representations as in the case of register addressing.

In terms of training the model, the register-machine compiler now produces a program trace consisting of a sequence of state-action pairs each one consisting of a state vector listing the contents of all working-memory registers followed by the register-machine microcode instruction (register transfer) that immediately preceded the instruction – see this footnote12 for sample output. The full trace is available here.

Van den Oord et al [132] introduce VQ-VAE as a method for employing discrete latent variables in a neural-network setting made possible with a new way of training inspired by vector quantization13 (VQ). The posterior and prior distributions are categorical, and the samples drawn from these distributions index an embedding table. These embeddings are then used as input into the decoder network." VQ is the basis for k-means clustering and related to autoregressive models in time-series analysis14.

The Bengio et al paper [15] explores four possible methods for estimating gradients of latent variables, proving some interesting properties about these methods and comparing them using a simple model of conditional computation in which "sparse stochastic units form a distributed representation of gating units that can turn off in combinatorially many ways large chunks of the computation performed in the rest of the neural network. In this case, it is important that the gating units produce an actual 0 most of the time. The resulting sparsity can potentially be exploited to greatly reduce the computational cost of large deep networks for which conditional computation would be useful."

Register transfers constitute a form of conditional computation. Van den Oord et al use an estimator proposed by Geoff Hinton that Bengio et al describe as "simply back-propagating through the hard threshold function (1 if the argument is positive, 0 otherwise) as if it had been the identity function. We call it the straight-through estimator. A possible variant investigated here multiplies the gradient on hi by the derivative of the sigmoid. Better results were actually obtained without multiplying by the derivative of the sigmoid."

Van den Oord et al apply this "straight-through gradient estimation for mapping from ze(x) to zq(x). The embeddings ei receive no gradients from the reconstruction loss, log p(z|zq(x)), therefore, in order to learn the embedding space, we use one of the simplest dictionary learning algorithms, vector quantization (VQ). The VQ objective uses the L2 error to move the embedding vectors ei towards the encoder outputs ze(x) as shown in the second term of Equation 3 in [132]." If you want to know more, this online tutorial does a credible job of explaining the original VQ-VAE paper, and, if you are not already familiar with k-means clustering, you can review the basic algorithms here.

November 3, 2020

%%% Tue Nov 3 05:02:58 PST 2020

The encoder / Bayesian predictor / VAE model plus ELBO training models featured in Merel et al [84, 83] and van den Oord et al [132] that we've been talking about address one of the central goals articulated in the MERLIN paper [145]: "building integrated AI agents that act in partially observed environments by learning to process high-dimensional sensory streams, compress and store them, and recall events with less dependence on task reward." Wayne et al [145] draw inspiration – see Page 3 in [145] – from three sources that have their roots in psychology and neuroscience: predictive sensory coding from Rao and Ballard [109], the hippocampal representation theory of Gluck and Myers [121], and the related, temporal context model from Howard et al [58, 57] and successor representation introduced by Peter Dayan [21]15.

I draw your attention to the last two items (italicized) in the previous paragraph as they address several of issues we discussed on Sunday, but before I get to that I want to clear up – or at least shed some light on – the different roles of the variational encoder and the transformer attentional model in the architecture that Yash described on Sunday. First, here is an excerpt (annotated with my italics) from the MERLIN paper that underscores the primary insights that we are channeling in the design of our model:

MERLIN optimizes its representations and learns to store information in memory based on a different principle, that of unsupervised prediction. MERLIN has two basic components: a memory-based predictor (MBP) and a policy [characterized as memory-dependent policies later in the paper]. The MBP is responsible for compressing observations into low-dimensional state representations z, which we call state variables, and storing them in memory. The state variables in memory in turn are used by the MBP to make predictions guided by past observations. This is the key thesis driving our development: an agent’s perceptual system should produce compressed representations of the environment; predictive modeling is a good way to build those representations; and the agent’s memory should then store them directly. The policy can primarily be the downstream recipient of those state variables and memory contents. [...] Recent discussions have proposed such predictive modeling is intertwined with hippocampal memory, allowing prediction of events using previously stored observations, for example, of previously visited landmarks during navigation or the reward value of previously consumed food.

Wayne et al [145] also highlight another feature that MERLIN put to good use and that Yash incorporated in the model architecture he showed us Sunday, namely that the MERLIN model "restrict(s) attention to memory-dependent policies, where the distribution depends on the entire sequence of past observations". And so the short answer to the questions we were wrestling with on Sunday, is that the VQ-VAE model remembers relevant information not explicitly encoded in the current state – this is the role of the memory-based predictor in MERLIN, and the transformer attentional model and softmax layers point to – or as I like to say, they highlight – specific information relevant to action selection in support of memory-dependent policies. You might say that the VQ-VAE sets the general context (compressed state vector) for acting while the transformer attentional machinery focuses on specific actionable cues, e.g., the output of the comparator or the likely source and sink for the next register transfer.

For what it's worth, I skimmed the papers [58, 57, 21] referenced concerning the temporal context model of Howard and the successor representation of Dayan and didn't find them particularly relevant to the problem of error correction as we are thinking about it in the context of the register machine. In searching the literature, I stumbled across a number of papers on predictive coding characterized as being employed to "generate predictions of sensory input that are compared to actual sensory input to identify prediction errors that are then used to update and revise the mental model." However, there are a lot of papers on the topic and almost as many alternative interpretations of what error prediction entails.

It might help to come up with a more precise characterization of what we mean by error correction and what we want to accomplish by using it. Most continuous-state controllers incorporate error correction as a means of correcting small errors that crop up naturally as a consequence of imprecision in visual tracking, servo motors, etc, errors that generally can't be anticipated but can be relatively easily corrected. It's this sort of error that Merel et al [83] address in their paper – that these errors can be so easily accommodated attests to forgiving nature of their target application. When our agent chooses the wrong source or sink register in selecting the next register transfer, it needn't be a complete disaster. Obviously, the cost of such an error will depend on the objective function, but it seems reasonable in evaluating performance on a SIRtask to assign partial credit for failing to match one instance of the target pattern in the input sequence, especially if the agent correctly matches most of the other instances.

Note that it is one thing to be able to predict the next state and quite another to figure what went wrong in attempting to achieve that state and how to correct it. The VAE is responsible for the former and the policy and attentional model are responsible for the latter. However, if we take the stance that the policy is trained to take advantage of the advice provided by the attentional model, then it would seem that the blame primarily rests on shoulders of the attention model. This apportionment of responsibility, if strictly adhered to, could serve to simplify training the model. If, as in Merel et al, the weights of the network implementing motor primitives are fixed prior to training the superordinate levels in the executive stack, then it would seem necessary to delegate the responsibility for recognizing and correcting the inevitable errors that likely to crop up when higher levels in the executive hierarchy deploy subroutines composed of motion primitives.

Miscellaneous Loose Ends: I've started enhancing the register-machine compiler to facilitate developing training protocols. The compiler already automates some parts of creating training examples, and there are some aspects of how the compiler runs, that you might want to know if you are developing training models. As a start here is an annotated sequence of compiler instructions along with descriptions of how they are interpreted by the virtual register machine:

INITIALIZE STATE: Load the BUFFIN register block and add any overflow to STAGED-INPUTS, the staging queue used by the OUTPUT register; NOTE: In most cases, initialization is accomplished by running LOAD-REGISTER-MACHINE for each training and evaluation step.

READ INPUT: At this point the NEW-CONTENT-SIGNAL should be #f, return the CURRENT-CONTENT.

WRITE INPUT CONTENT: Set the NEW-CONTENT-SIGNAL to #t, set CURRENT-CONTENT to the supplied CONTENT argument.

WRITE INPUT CONTENT: The NEW-CONTENT-SIGNAL should be #t, set CURRENT-CONTENT to the supplied CONTENT overwriting the old content; NOTE: This shouldn't happen, we assume that the last written content will be READ before the next WRITE.

READ OUTPUT: return the content of the OUTPUT register, no other action is taken.

WRITE OUTPUT CONTENT: Update the BUFFOUT register block, update the OUTPUT register, if STAGED-INPUTS is empty send BUFFIN the empty register codon, if not send the first item in the STAGED-INPUTS; NOTE: This advances the BUFFIN register block by adding to its LIFO queue. Note that what was the oldest content in the input buffer is no longer available to the AGENT. If this content is likely to be needed, the AGENT must store a copy in working memory in order to be prepared for that contingency.

READ INPUT: Set NEW-CONTENT-SIGNAL to #f, updates BUFFIN register block, returns the CURRENT-CONTENT.

READ INPUT: NEW-CONTENT-SIGNAL should be #f, return the CURRENT-CONTENT.

October 26, 2020

%%% Mon Oct 26 14:49:19 PDT 2020

This entry recaps some of the discussion in yesterday's SAIRG meeting and provides a summary for those who couldn't join us. Prior to the meeting Yash sent around a revised perspective on his earlier executive homunculus missive and much of the meeting yesterday revolved around issues raised in that document. This footnote includes Yash's revised perspective with my comments interspersed, as well as the promised brief summary of the meeting16. The main text of this entry focuses on one set of issues that are particularly relevant to training our model. I've included Yash's overview of issues and challenges followed by my attempt to address those issues using register-machine compiler discussed in the previous entry.

YASH: In order to make sure that the model is able to generalize, it is necessary that the model be trained using a curriculum or a game. Unlike other models such as the DNC or NTM, this curriculum exists at the global workspace state level. We use a compiler and code generator that creates an optimal program to solve the current stage of the curriculum. This optimal program is then compared to the actual execution of the executive homunculus. For the earliest stages of the curriculum, where the model is only required to perform simple actions like moving or copying registers, the compiler can be used as a direct supervision signal to train the executive model. As the stages progress, the optimal programs can be used to verify the outputs from the executive model and provide a training signal that is more diffuse. In this way, the executive homunculus can be gradually trained to become more general. Initially, it is trained to perform simple actions whose rewards are dense. As it gains fluency with simple actions, the tasks become more complicated, and the reward signals become more sparse. The goal of the later stages is to train the executive model to compose the low-level operations it learned in the earlier stages to execute more involved tasks. At all stages of the curriculum, the compiler and code generator can be used to provide a training signal for the model. Note we directly train on the global workspace state here because the sparsity of the I/O reward signal would necessitate far too many trials before the model learns to perform any meaningful action. Even once it has learned to execute a task, it is likely that the final execution would be based on specialized operations that are not modular enough to generalize the execution. Instead of learning a general set of skills that are applicable to a variety of cerebral tasks, the model would have learned only to overfit to the current task. By using the curriculum and compiler as supervisors, we can ensure that the model builds up the tools needed to execute the tasks in a hierarchy.

TLD: To support curriculum training, compiler macros can be instrumented so as to generate training data for any task that can be characterized as a specific code fragment. Previous posts suggested that branch points serve to identify such fragments and the subroutines they could be used to train. In programs for solving a given task, branch points are signaled by conditional statements introduced by IF, COND and CASE, but also iterative constructs including FOR, WHILE, UNTIL, MAP and tail recursive functions. We've simplified this by allowing only IF statements and tail recursion, which are sufficient to implement all the others.

This strategy works at multiple levels in the hierarchy of subtasks. For example, in the two-pattern SIRtask there are three levels corresponding to three nested loops. The outermost loop (i) iterates over each item in the input to determine if it is the first character in a sequence matching one of the two patterns. Each character in the input sequence requires a (ii) loop over each pattern in the list of patterns to determine if the immediately-following characters match the pattern. Each pattern requires a loop (iii) over each character in the pattern to determine if the two sequences – one input and one pattern sequence – match where each sequence corresponds to a block of registers.

Finally, in each iteration of the innermost loop, the contents (characters) of two registers are compared and the resultant branching involves four cases determined by one or more loop exit conditions: (a) the pattern character is a stop (end-of-sequence) codon – output 1 and proceed to the next character in the input (return to loop i), (b) the two characters match – continue with the next pattern and input sequence characters (return to loop iii), (c) the two characters don't match and this is the first of the two patterns – continue with next pattern (return to loop ii), and (d) the two characters don't match and this is the second of the two patterns – output 0 and proceed to the next character in the input (return to loop i).

Miscellaneous Loose Ends: These three nested loops don't necessarily have to align with three different levels of abstraction. Recursion is the key innovation and it seems inherent in the way we learn just about anything. What about the challenges involved in maintaining a call stack of any depth? Registers serve as global variables [72] but they don't seem to serve particularly well in dealing with nested namespaces as is required in procedural closures. Items deep in the call stack would have to be allocated protected registers within working memory or depend on the variational autoencoder to learn to remember them along the lines of Wayne et al [145]. This footnote contains a redacted version of an email message that I sent to a friend in response to his question about McFadden's EMI field theory of consciousness17.

October 25, 2020

%%% Fri Sun 25 04:56:45 PDT 2020

There is now a working version of the register-machine compiler along with a set of macros that make it easy to write register-machine programs in Scheme (Lisp) that generate instructions to solve one- and two-pattern SIRtasks. In this case of the register machine, instructions correspond to register transfers. Here's what a Scheme program for solving two-pattern SIRtasks looks like:

(define (simple-sirt-inputRMC) (send BUFFIN registers))

(define (simple-sirtRMC pattern-list)

(or (empty? pattern-list)

(simple-sirt-auxRMC (simple-sirt-inputRMC)

(car pattern-list) (cdr pattern-list))))

(define (simple-sirt-auxRMC input pattern pattern-list)

(if (emptyRMC pattern)

(printRMC 'OUTPUT 1)

(if (equalRMC (car input) (car pattern))

(simple-sirt-auxRMC

(cdr input) (cdr pattern) pattern-list)

(if (empty? pattern-list)

(printRMC 'OUTPUT 0)

(simple-sirtRMC pattern-list)))))

In the above listing, emptyRMC, equalRMC and printRMC are implemented as Lisp macros and the other function names ending with RMC are simple Lisp procedures. On the surface, the three macros behave like the Scheme built-in functions empty?, equal? and printf, while at the same time producing side effects that generate the required register transfers:

(define-macro (equalRMC ARG1 ARG2)

#'(let ((input-one (send ARG1 read))

(input-two (send ARG2 read)) (flag (void)))

(send (operator-input-one COMPARE) write input-one)

(send (operator-input-two COMPARE) write input-two)

(set! flag (equal? input-one input-two))

(if flag

(send (operator-output COMPARE) write 1)

(send (operator-output COMPARE) write 0)) flag))

(define-macro (emptyRMC REGISTERS)

#'(or (empty? REGISTERS)

(send (car REGISTERS) empty?)))

(define-macro (printRMC FORMAT VALUE)

#'(begin (datalogRMC 'OUTPUT VALUE)

(if (not (equal? FORMAT 'OUTPUT))

(error "PRINTRMC macro expects FORMAT = OUTPUT")

(if (not (or (equal? VALUE 1) (equal? VALUE 0)))