The Methodology of Knockoffs

The variable selection problem

The very general problem addressed by the knockoff methodology is the following. Suppose that we can observe a response \(Y\) and \(p\) potential explanatory variables \(X=(X_1, \ldots, X_p)\). Given \(n\) samples \(\left(X_{i,1}, \ldots, X_{i,p}, Y_{i}\right)_{i=1}^{n}\), we would like to know which predictors are important for the response.

We assume that, conditionally on the predictors, the responses are independent and the conditional distribution of \(Y_{i}\) only depends on its corresponding vector of predictors \((X_{i,1},\ldots,X_{i,p})\). Formally, we write this as:

\[ \begin{align*} & Y_i | (X_{i,1},\ldots,X_{i,p}) \overset{\text{ind.}}{\sim} F_{Y|X} , & i=1,\ldots,n, \end{align*} \]

for some conditional distribution \(F_{Y|X}\).

The variable selection problem is motivated by the belief that, in many practical applications, \(F_{Y|X}\) actually only depends on a (small) subset \(\mathcal{S} \subset \{1, \ldots, p\}\) of the predictors, such that conditionally on \(\{X_j\}_{j \in \mathcal{S}}\), \(Y\) is independent of all other variables.

This is a very intuitive definition, that can be informally restated by saying that the other variables are not important because they do not provide any additional information about \(Y\). A minimal set \(\mathcal{S}\) with this property is usually known as a Markov blanket. Under very mild conditions on \(F_{Y|X}\), this can be shown to be unique and the variable selection problem is cleanly defined.

In order to avoid any ambiguity in those pathological cases in which the Markov blanket is not unique, we will say that the \(j\)-th predictor is null if and only if \(Y\) is independent of \(X_j\), conditionally on all other predictors \(X_{-j} = \{X_1, \ldots, X_p\} \setminus \{X_j\}\). We denote the subset of null variables by \(\mathcal{H}_0 \subset \{1, \ldots, p\}\) and call the \(j\)-th variable relevant (or non-null) if \(j \notin \mathcal{H}_0\).

Our goal is to discover as many relevant variables as possible while keeping the false discovery rate (FDR) under control. For a selection rule that selects a subset \(\hat{S}\) of the predictors, the FDR is defined as

\[ \begin{align*} \text{FDR} = \mathbb{E} \left[ \frac{|\hat{S} \cap \mathcal{H}_0|}{\max(1,|\hat{S}|)} \right]. \end{align*} \]

An important application

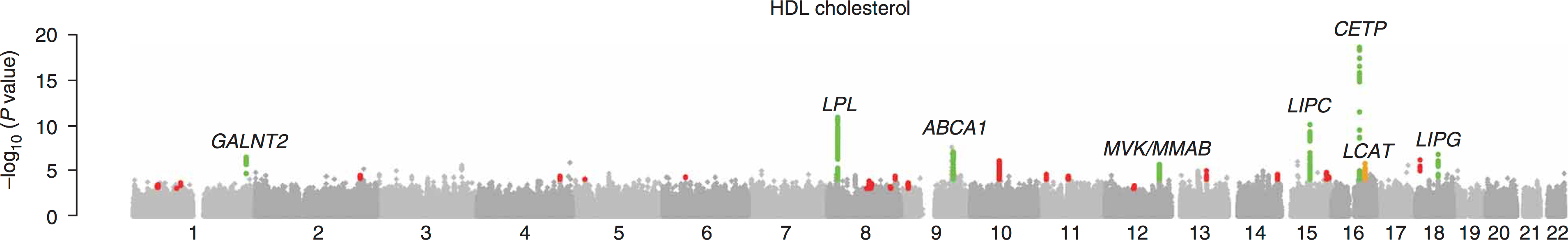

Controlled variable selection is particularly relevant in the context of statistical genetics. For instance, a genome-wide association study aims at finding genetic variants that are associated with or influence a trait, choosing from a pool of hundreds of thousands to millions of single-nucleotide polymorphisms. This trait could be the level of cholesterol or a major disease.

|