Bio

I am a Ph.D. student of Stanford Institute for Computational and Mathematical Engineering working with Lexing Ying and Jose Blanchet. I also work closely with Jianfeng Lu and Tatsunori Hashimoto. My research is supported by Stanford Interdisciplinary Graduate Fellowship.

I received Bachelor of Science (Honor Track) from School Of Mathimatical Science at Peking University in 2019.07, majoring Information and Computing Science advised by Prof.Bin Dong. I also enjoy working with Prof. Liwei Wang on theoretical machine learning. I was a visiting student at MIT CSAIL under the supervision of Prof. Justin Solomon during summer 2018. I was a research intern at MSRA(Microsoft Research Asia) working on Bayesian Deep Learning. (Mentor: Visual Computing Group David Wipf) During the internship, I also work closely with Machine Learning Group@MSRA. I was a visting scholar at Tokyo University and Riken AIP working with Prof. Taiji Suzuki during 2019 summer. I'll work at Microsof Research with Di He and Greg Yang this summer.

Non-parametric estimation, Optimal Control, Statistical learning theory, Inverse problem.

My Research

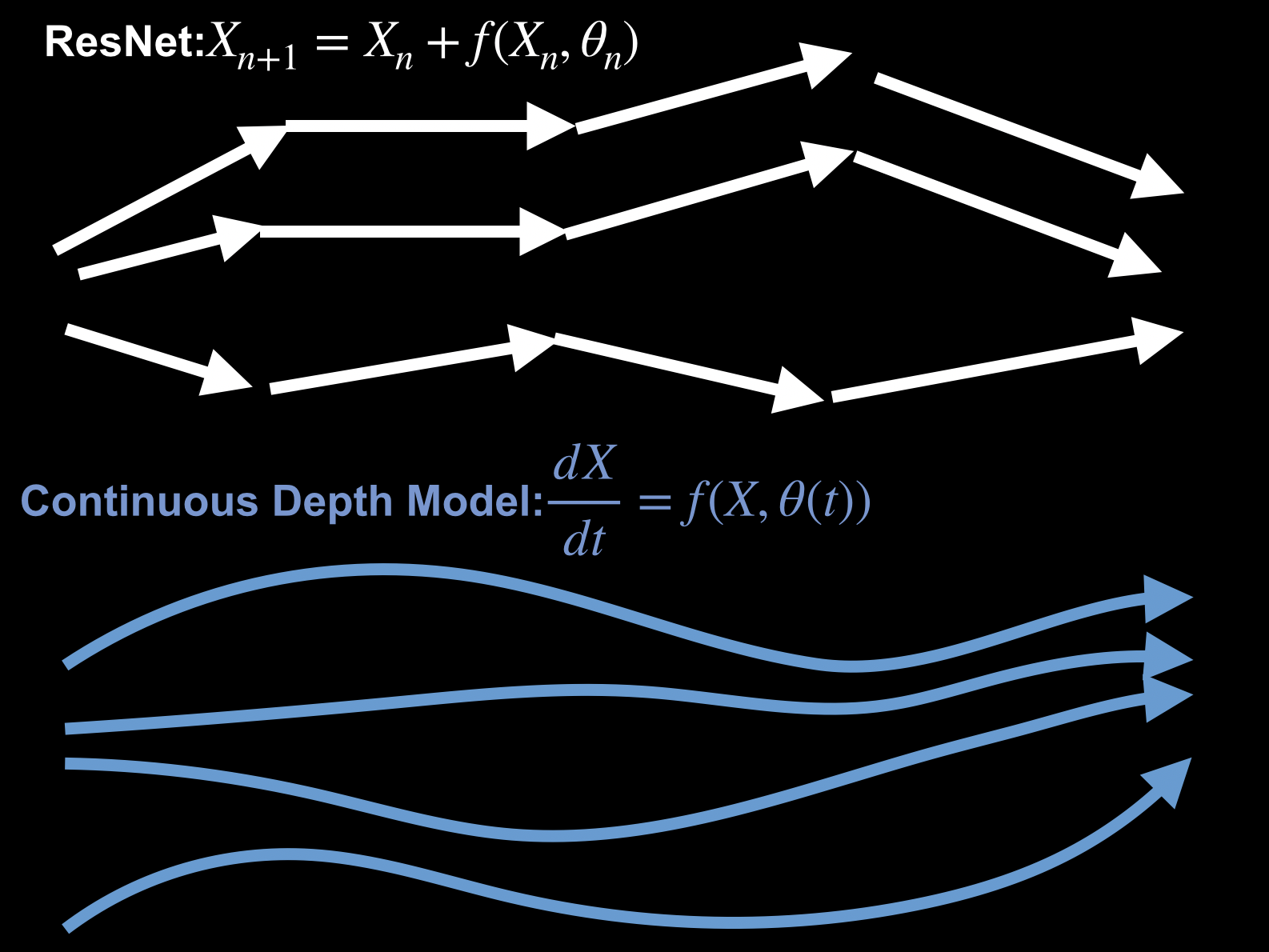

Continuous Depth Neural Network

My research bridges optimal control theory and deep neural networks and this viewpoint can fully expolit the structure of neural network. Using this understanding, we can enforce physical constraints to neural network, discover new physics and build up optimization algorithm/theory for deep neural networks.

Robust Machine Learning

My research aims to build up algorithms and thoery for designing machine learning systems robust to adversarial examples, data distribution shift and confounding variable. Tools from semi-parametric estimations, control theory and statistical machine learning thoery is the core of the research.

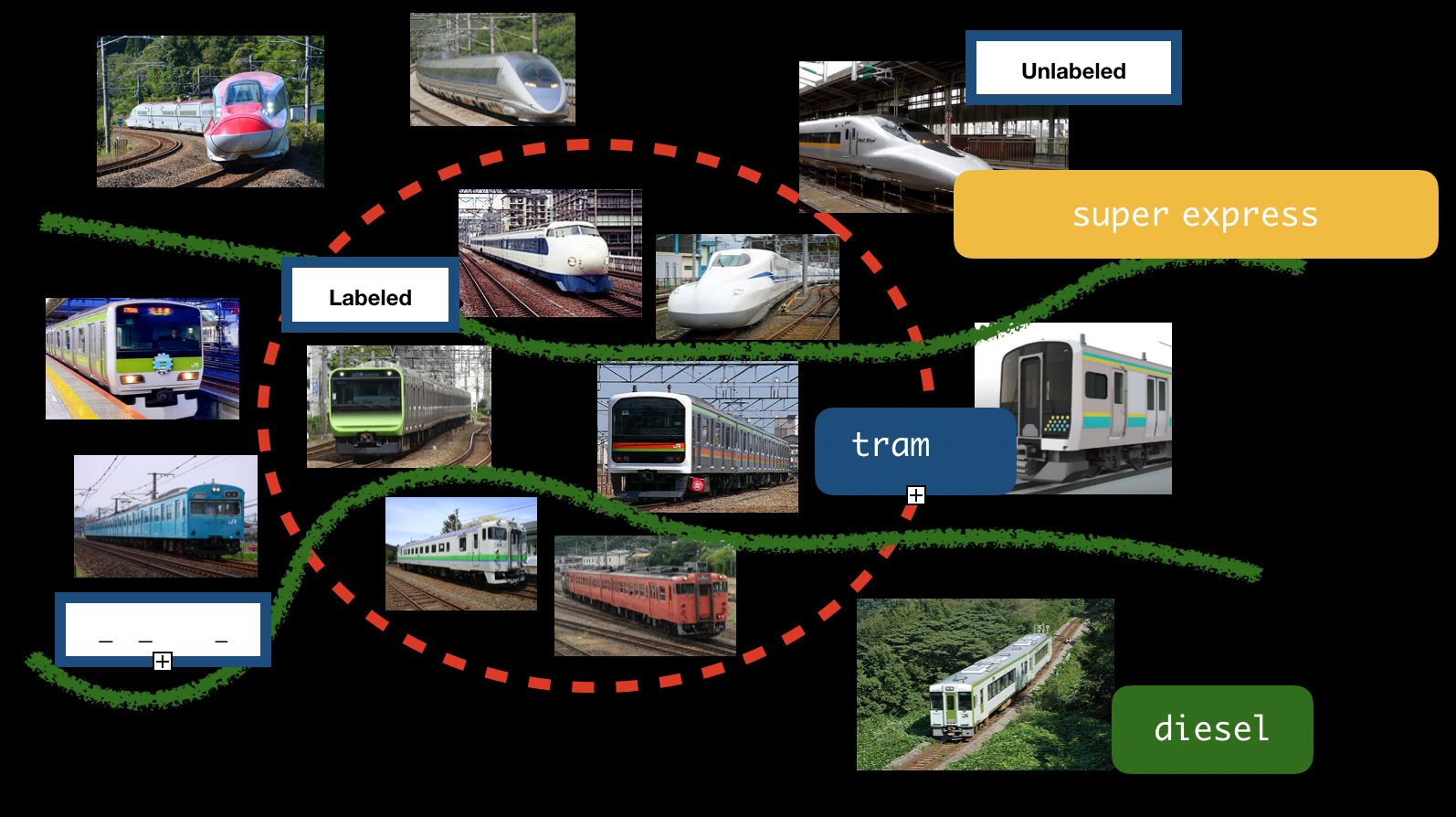

Weakly Supervised Machine Learning

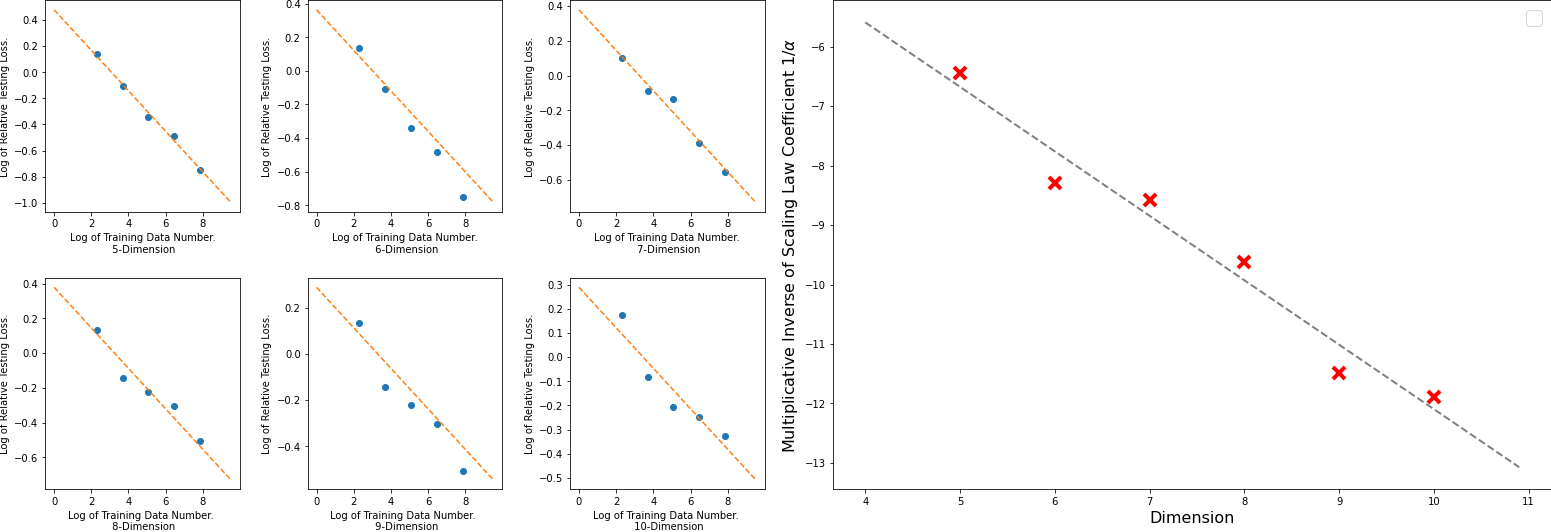

My research aims to understood the data efficiency of semi-supervised learning/self-supervised learning in high dimension from a non-parametric estimation and numerical PDE point of view.

Learning in High Dimension

I'm interested in risk estimation for high dimensional learning problem in the propotional asymptotic.

Dynamical System Modeling

I'm interested in physical law discovery and fairness in dynamical systems using tools from machine leanring and causal inference.

Inverse Problems

I'm also working on inverse problems and image processing, including

Research Highligh Video

How Continouos Depth Model Helps Understanding Optimization Of Deep Networks.

Based on Mean-field ResNet paper published at ICML2020 and YOPO paper published at NeurIPS2019.

Resent News

[2020/07/10] I will give a talk at One World Seminar Series on the Mathematics of Machine Learning Thematic day on the training of continuous ResNets.

[2021/03/01] I'm hosting a seminar on emprical process this quater: link

[2021/05/17] I'm honored to receive a Stanford Interdisciplinary Graduate Fellowship (A three year fellowship).

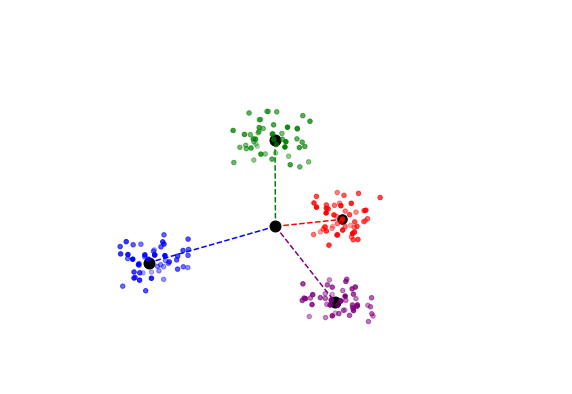

[2021/06/04] We have a new paper on theory of Neural Colloapse, Available on request!

[2021/09/15] We have a new paper on theory of Neural PDE Solver, Available on request!

Recent Highlight Publications

|

Yiping Lu*, Haoxuan Chen,

Jianfeng Lu,

Lexing Ying*,

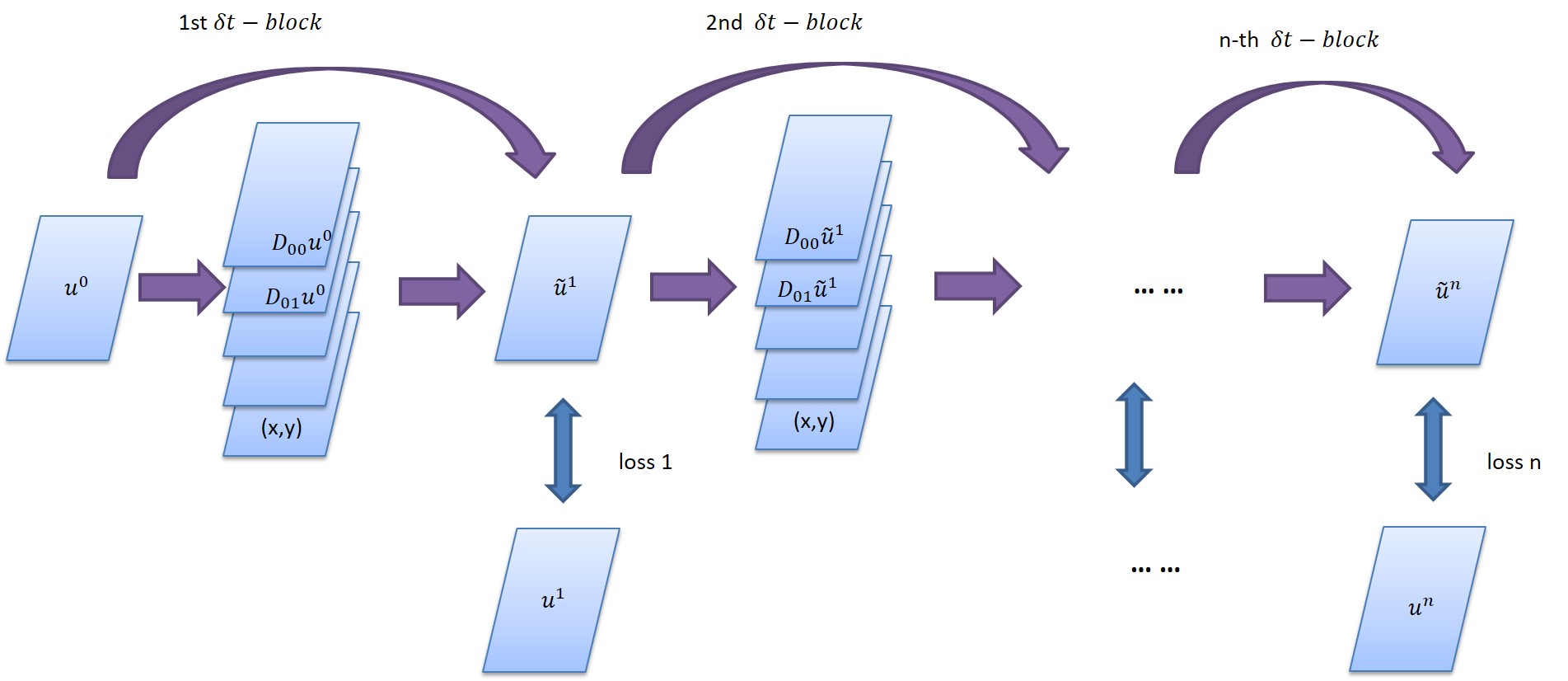

Jose Blanchet . Convergence Proof of Deep PDE Solvers (a different title),

In Submission 2021

[ paper] [ arXiv] (Available on request.)

|

|

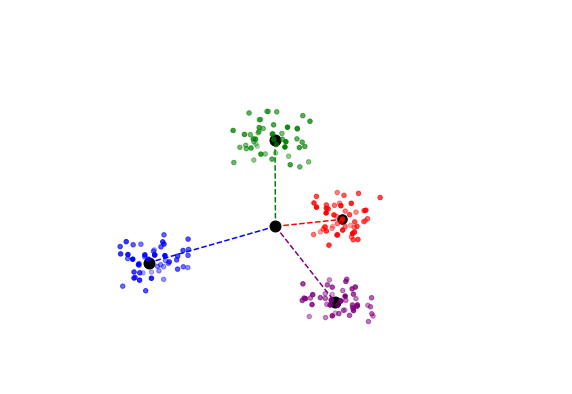

Wenlong Ji, Yiping Lu*, Yiliang Zhang, Zhun Deng, Weijie J Su. How Gradient Descent Separates Data with Neural Collapse: A

Layer-Peeled Perspective.

Submitted

[ paper] [ arXiv] [ slide] (Available on request.)

|

|

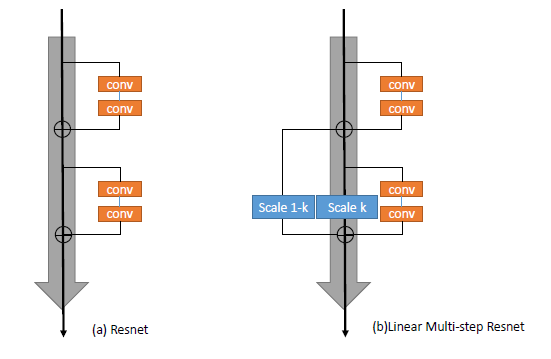

Yiping Lu*, Chao Ma, Yulong Lu, Jianfeng Lu, Lexing Ying. "A Mean-field Analysis of Deep ResNet and Beyond: Towards Provable Optimization Via Overparameterization From Depth"

Thirty-seventh International Conference on Machine Learning (ICML), 2020

Short version presented at ICLR 2020 Workshop on Integration of Deep Neural Models and Differential Equations. (Oral) [ paper] [ arXiv] [slide] [Video]

|

Publications

Continuous Depth Neural Network

|

Yiping Lu*, Chao Ma, Yulong Lu, Jianfeng Lu, Lexing Ying. "A Mean-field Analysis of Deep ResNet and Beyond: Towards Provable Optimization Via Overparameterization From Depth"

Thirty-seventh International Conference on Machine Learning (ICML), 2020

Short version presented at ICLR 2020 Workshop on Integration of Deep Neural Models and Differential Equations. (Oral) [ paper] [ arXiv] [slide] [Video]

|

|

Dinghuai Zhang*, Tianyuan Zhang*,Yiping Lu*, Zhanxing Zhu, Bin Dong. "You Only Propagate Once: Painless Adversarial Training Using Maximal Principle." (*equal contribution)

33rd Annual Conference on Neural Information Processing Systems 2019(NeurIPS2019).

[ paper] [ arXiv] [ slide] [Code] [poster]

|

|

Yiping Lu*, Zhuohan Li*, Di He, Zhiqing Sun, Bin Dong, Tao Qin, Liwei Wang, Tie-yan Liu "Understanding and Improving Transformer

From a Multi-Particle Dynamic System Point of View." (*equal contribution)

Submitted. arXiv preprint:1906.02762

[ paper] [ arXiv] [ slide] [Code]

|

|

Yiping Lu,

Aoxiao Zhong,

Quanzheng Li,

Bin Dong. "Beyond Finite Layer Neural Network:Bridging Deep Architects and Numerical Differential Equations"

Thirty-fifth International Conference on Machine Learning (ICML), 2018

[ paper] [ arXiv] [ project page] [ slide][ bibtex][ Poster]

|

Learning Physical Laws.

|

Yiping Lu*, Haoxuan Chen,

Jianfeng Lu,

Lexing Ying*,

Jose Blanchet . Convergence Proof of Deep PDE Solvers (a different title),

In Submission

[ paper] [ arXiv] (Available on request.)

|

|

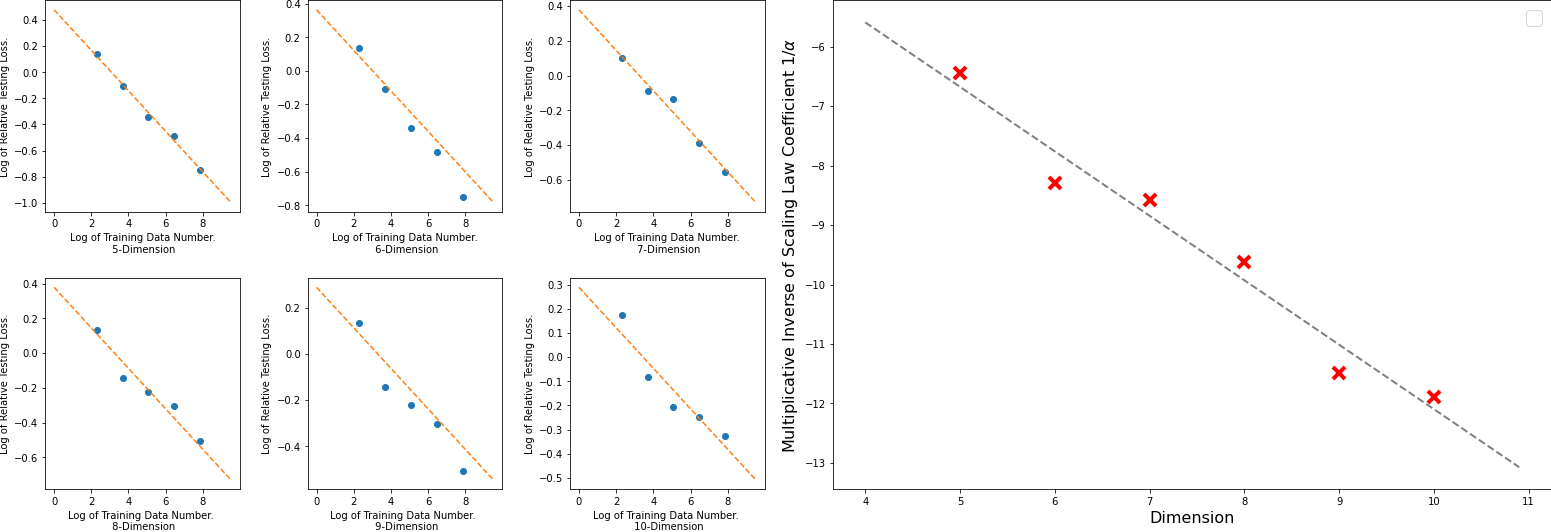

Zichao long*,

Yiping Lu*,

Xianzhong Ma*,

Bin Dong. "PDE-Net:Learning PDEs From Data",

Thirty-fifth International Conference on Machine Learning (ICML), 2018(*equal contribution)

[ paper] [ arXiv] [ code] [ Supplementary Materials][ bibtex]

|

|

Zichao Long,

Yiping Lu,

Bin Dong. " PDE-Net 2.0: Learning PDEs from Data with A Numeric-Symbolic Hybrid Deep Network"

Journal of Computational Physics, 399, 108925, 2019.(arXiv preprint:1812.04426)

[ paper] [ arXiv] [code] [ slide] [ proceeding]

|

Image and Signal Processing

|

Bin Dong, Haochen Ju,

Yiping Lu, Zuoqiang Shi. " CURE: Curvature Regularization For Missing Data Recovery."

SIAM Journal on Imaging Science, 13(4), 2169-2188, 2020

[ paper] [ arXiv] [Code] [ slide] [ project page]

|

|

Xiaoshuai Zhang*,

Yiping Lu*,

Jiaying Liu,

Bin Dong. "Dynamically Unfolding Recurrent Restorer: A Moving Endpoint Control Method for Image Restoration"

Seventh International Conference on Learning Representations(ICLR) 2019(*equal contribution)

[ paper] [ arXiv] [code] [ slide] [ project page][Open Review]

|

Inductive Bias Of Neural Network

|

Wenlong Ji, Yiping Lu*, Yiliang Zhang, Zhun Deng, Weijie J Su. How Gradient Descent Separates Data with Neural Collapse: A

Layer-Peeled Perspective.

Submitted

[ paper] [ arXiv] [ slide] (Available on request.)

|

|

Bin Dong, Jikai Hou, Yiping Lu*, Zhihua Zhang "Distillation ≈ Early Stopping? Harvesting Dark Knowledge Utilizing Anisotropic Information Retrieval For Overparameterized Neural Network"

NeurIPS2019 Workshop on ML with Guarantees. arXiv preprint:1910.01255

|

Train

I'm a Train Fan(铁道迷, 鉄道オタク,鉄道ファン), generally interested in the history of the railway systems built in Japan and China.

1 min

1 min

Beijing West--Xiong An 2020/12/27

I take the first high speed railway from Beijing to Xiong An.

1 min

1 min

Moonligh Nagara Gifu--Tokyo 2019/12/23

Moonligh Nagara is the last night seat train in Japan and it's canceled due to COVID19 during 2020 Summer.

1 min

1 min

Super Thunderbird #1 Kyoto--Knazawa 2019/12/29

Thunderbird is actually the fastest route from Osaka and Kyoto to Kanazawa, using portions of the Tokaido and Hokuriko lines. Choicing a D seat at Thunderbird #1 during winter, you can enjoy the sunrise at Lake biwa. (Kosei sen line)

1 min

1 min

Genbi Shinkansen Echigo Yuzawa--Niigata 2019/02/16

Genbi Shinkansen is the world fastest art experience! It will be canceled during 2020/12. Thanks, Genbi Shinkansen.

1 min

1 min

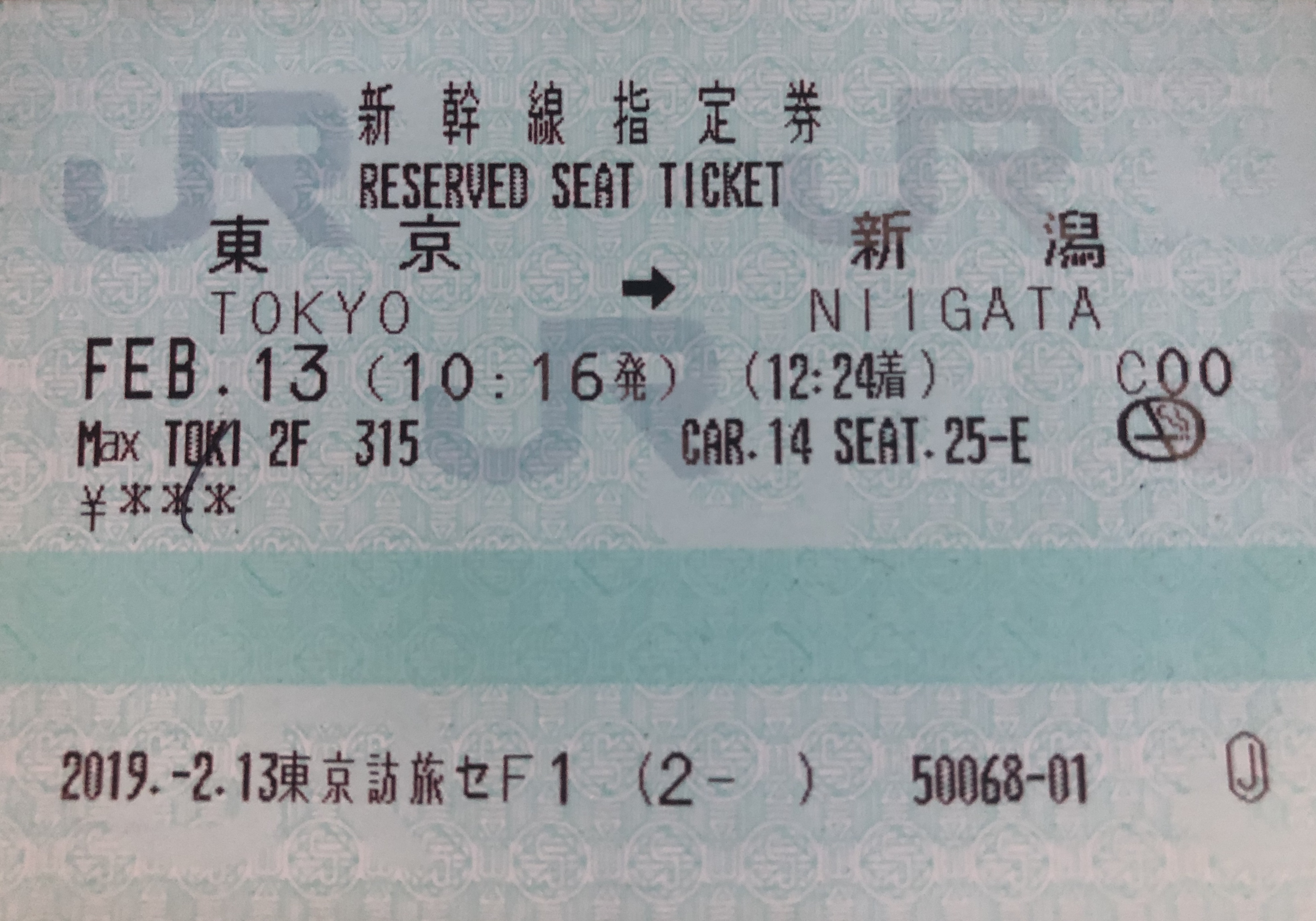

Max Toki #315 Tokyo--Niigata 2019/02/03

Max toki is the last double-decker super express in Japan. Max Toki #315 is also a song by a Niigata idol Group NGT48,

Idol

I love Japaness idol group, especially STU48, Last Idol, =Love, ≠ Me. I'm also a fan of AKB48 China Team SH(ShangHai).

1 min

1 min

≠ Me. First Live -- ≠ Me. 2019/12/30

I get the first row center ticket for ≠ Me first live, Lucky!! 大きいステージに連れて行く!!

1 min

1 min

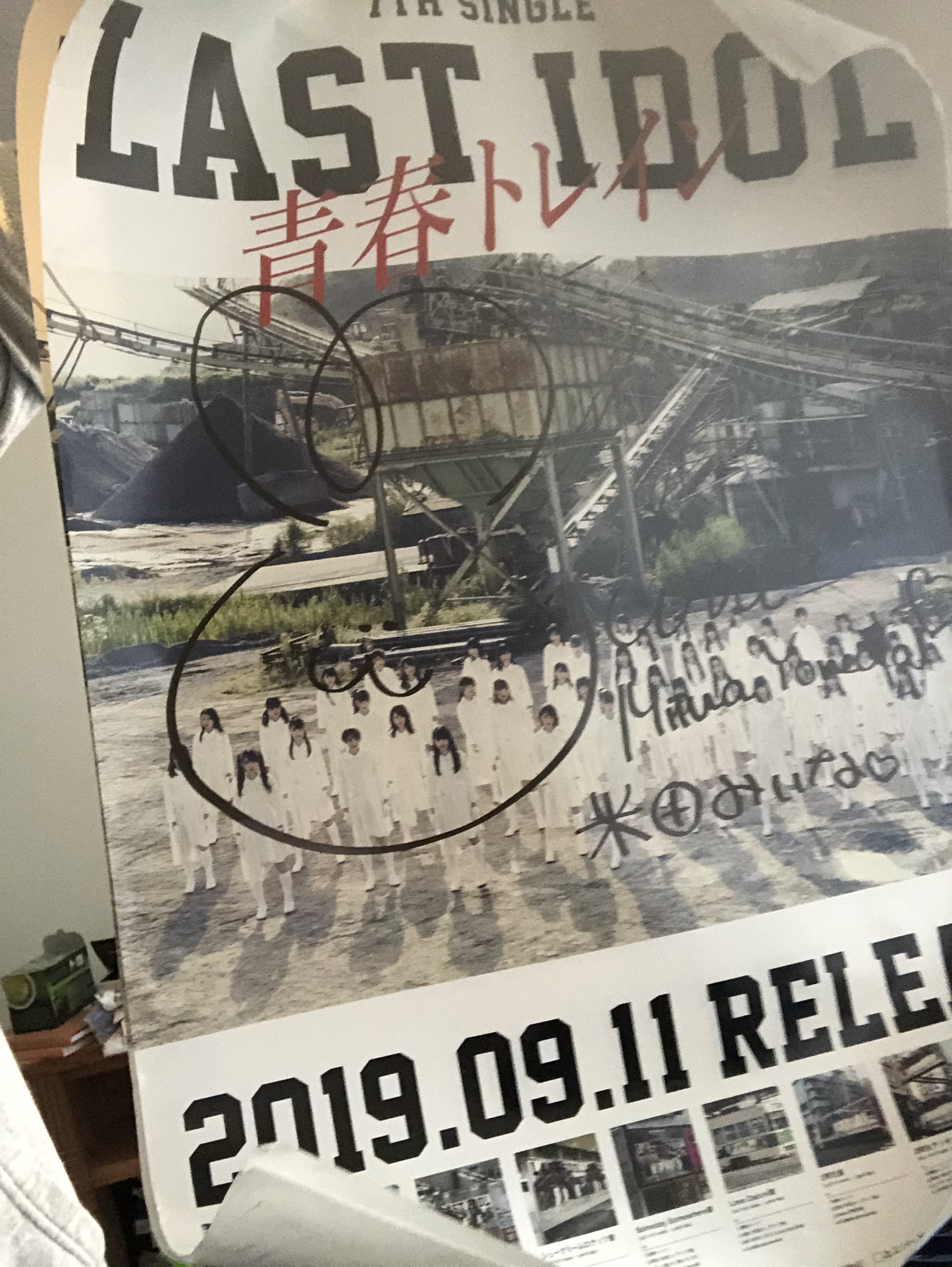

Seishun Train -- Last Idol 2019/09/05

Seishun Train is the 7th single of Last Idol. This is the CD cover at shibuya. Photo credit Denny Wu.

P.S. Luckyly, I get the signed poster by Yoneda Miina.

Contact Me

Stanford, CA, US

Phone: +86 18001847803

Email: yplu@stanford.edu

Let's get in touch. Send me a message: