Basic approach

Contents

Basic approach#

Supervised learning with a qualitative or categorical response.

Just as common, if not more common than regression:

Medical diagnosis: Given the symptoms a patient shows, predict which of 3 conditions they are attributed to.

Online banking: Determine whether a transaction is fraudulent or not, on the basis of the IP address, client’s history, etc.

Web searching: Based on a user’s history, location, and the string of a web search, predict which link a person is likely to click.

Online advertising: Predict whether a user will click on an ad or not.

Bayes classifier#

Suppose \(P(Y\mid X)\) is known. Then, given an input \(x_0\), we predict the response

The Bayes classifier minimizes the expected 0-1 loss:

This minimum 0-1 loss (the best we can hope for) is the Bayes error rate.

Basic strategy: estimate \(P(Y\mid X)\)#

If we have a good estimate for the conditional probability \(\hat P(Y\mid X)\), we can use the classifier:

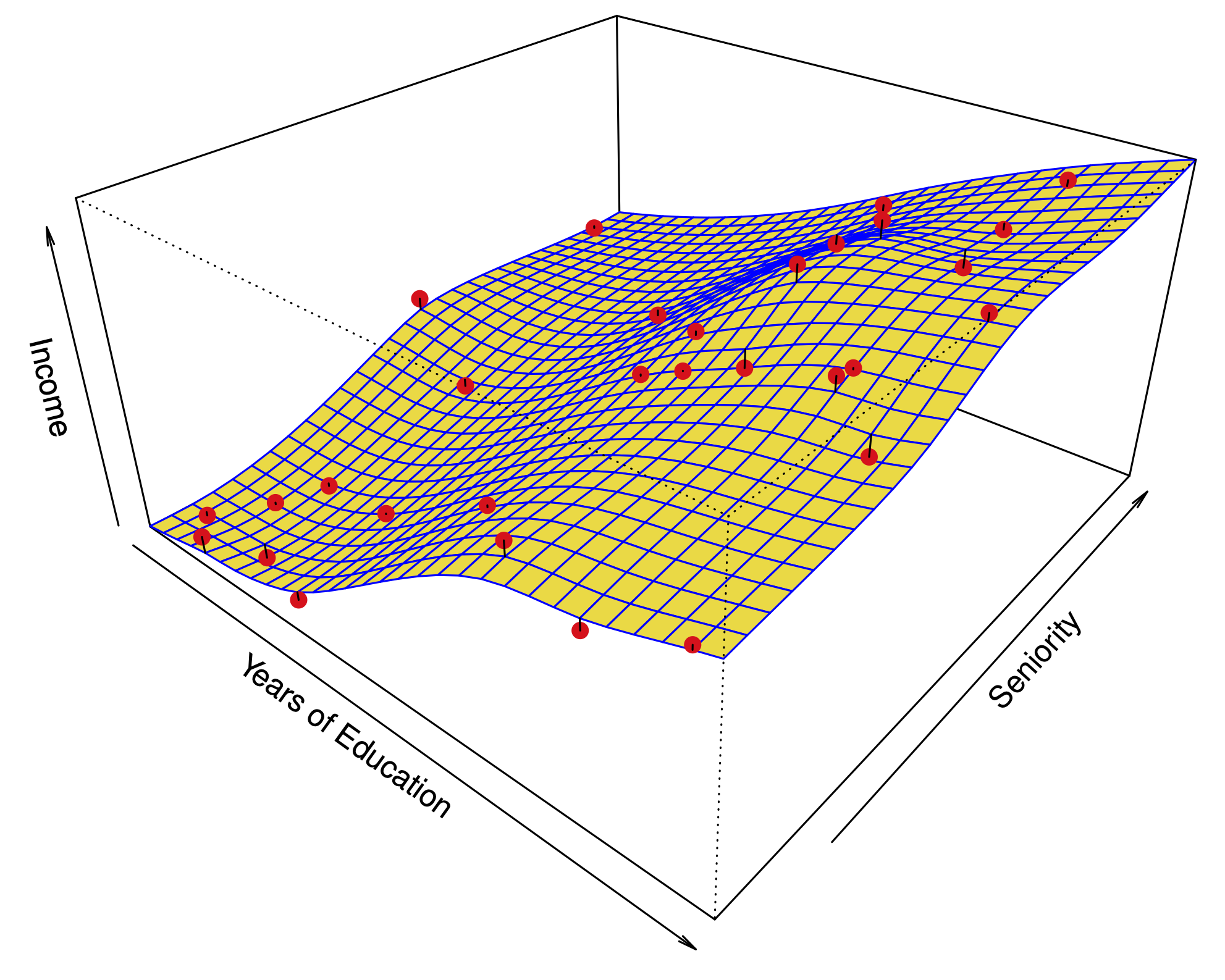

Suppose \(Y\) is a binary variable. Could we use a linear model?

Problems:

This would allow probabilities \(<0\) and \(>1\).

Difficult to extend to more than 2 categories.