Dimensionality reduction

Contents

Dimensionality reduction#

Regularization methods#

Variable selection:

Best subset selection

Forward and backward stepwise selection

Shrinkage

Ridge regression

The Lasso (a form of variable selection)

Dimensionality reduction:

Idea: Define a small set of \(M\) predictors which summarize the information in all \(p\) predictors.

Principal Component Regression#

The loadings \(\phi_{11}, \dots, \phi_{p1}\) for the first principal component define the directions of greatest variance in the space of variables.

princomp(USArrests, cor=TRUE)$loadings[,1] # cor=TRUE makes sure to scale variables

- Murder

- 0.535899474938155

- Assault

- 0.58318363490967

- UrbanPop

- 0.278190874619432

- Rape

- 0.543432091445682

Interpretation: The first principal component measures the overall rate of crime.

Principal Component Regression#

The scores \(z_{11}, \dots, z_{n1}\) for the first principal component define the deviation of the samples along this direction.

princomp(USArrests, cor=TRUE)$scores[,1] # cor=TRUE makes sure to scale variables

- Alabama

- 0.985565884503143

- Alaska

- 1.95013775033502

- Arizona

- 1.76316353972298

- Arkansas

- -0.141420289868356

- California

- 2.52398012651925

- Colorado

- 1.51456286110159

- Connecticut

- -1.35864745985436

- Delaware

- 0.0477093090600234

- Florida

- 3.01304227028722

- Georgia

- 1.63928304283591

- Hawaii

- -0.912657145897512

- Idaho

- -1.6397998521635

- Illinois

- 1.37891072442415

- Indiana

- -0.505461361080942

- Iowa

- -2.25364606966872

- Kansas

- -0.796881121217602

- Kentucky

- -0.750859074389295

- Louisiana

- 1.56481797641247

- Maine

- -2.39682948807447

- Maryland

- 1.7633693885314

- Massachusetts

- -0.486166287394261

- Michigan

- 2.10844115004568

- Minnesota

- -1.69268181440212

- Mississippi

- 0.996494459215249

- Missouri

- 0.696787329465988

- Montana

- -1.18545190586353

- Nebraska

- -1.26563654112436

- Nevada

- 2.87439453851442

- New Hampshire

- -2.3839154090396

- New Jersey

- 0.181566109647703

- New Mexico

- 1.9800237544635

- New York

- 1.68257737630865

- North Carolina

- 1.12337860637185

- North Dakota

- -2.99222561501786

- Ohio

- -0.225965422407926

- Oklahoma

- -0.311782855015387

- Oregon

- 0.0591220767886586

- Pennsylvania

- -0.888415824070836

- Rhode Island

- -0.863772063608301

- South Carolina

- 1.32072380381581

- South Dakota

- -1.98777483728934

- Tennessee

- 0.999741684462479

- Texas

- 1.35513820680531

- Utah

- -0.55056526221282

- Vermont

- -2.80141174000027

- Virginia

- -0.096334911236051

- Washington

- -0.216903378616043

- West Virginia

- -2.1085854076825

- Wisconsin

- -2.07971416891733

- Wyoming

- -0.629426663525205

Interpretation: The scores for the first principal component measure the overall rate of crime in each state.

Principal Component Regression#

Idea:

Replace the original predictors, \(X_1, X_2, \dots, X_p\), with the first \(M\) score vectors \(Z_1, Z_2, \dots, Z_M\).

Perform least squares regression, to obtain coefficients \(\theta_0,\theta_1,\dots,\theta_M\).

The model with these derived features is:

Equivalent to a linear regression onto \(X_1,\dots,X_p\), with coefficients: $\(\beta_j = \sum_{m=1}^M \theta_m \phi_{jm}\)$

This constraint in the form of \(\beta_j\) introduces bias, but it can lower the variance of the model.

Application to the Credit dataset#

A model with 11 components is equivalent to least-squares regression

Best CV error is achieved with 10 components (almost no dimensionality reduction)

The left panel shows the coefficients \(\beta_j\) estimated for each \(M\).

The coefficients shrink as we decrease \(M\)!

Relationship between PCR and Ridge regression#

Least squares regression: want to minimize

Score equation:

Solution:

Compute singular value decomposition: \(\mathbf{X} = U D^{1/2} V^T\), where \(D^{1/2} = \text{diag}(\sqrt{d_1},\dots,\sqrt{d_p})\).

Then

where \(D^{-1} = \text{diag}(1/d_1,1/d_2,\dots,1/d_p)\).

Relationship between PCR and Ridge regression#

Ridge regression: want to minimize

Score equation:

Solution:

Note $\((\mathbf{X}^T\mathbf{X}+ \lambda I)^{-1} = V D_\lambda ^{-1} V^T\)\( where \)D_\lambda ^{-1} = \text{diag}(1/(d_1+\lambda),1/(d_2+\lambda),\dots,1/(d_p+\lambda))$.

Relationship between PCR and Ridge regression#

Predictions of least squares regression:

Predictions of Ridge regression:

The projection of \(y\) onto a principal component is shrunk toward zero. The smaller the principal component, the larger the shrinkage.

Predictions of PCR:

The projections onto small principal components are shrunk to zero.

Principal Component Regression#

In each case \(n=50\), \(p=45\).

Left: response is a function of all the predictors (dense).

Right: response is a function of 2 predictors (sparse - good for Lasso).

Ridge and Lasso on dense dataset

The Lasso (—), Ridge (\(\cdots\)).

Optimal PCR seems at least comparable to optimal LASSO and ridge.

Ridge and Lasso on sparse dataset

The Lasso (—), Ridge (\(\cdots\)).

Lasso clearly better than PCR here.

Again, \(n=50\), \(p=45\) (as in ridge, LASSO examples)

The response is a function of the first 5 principal components.

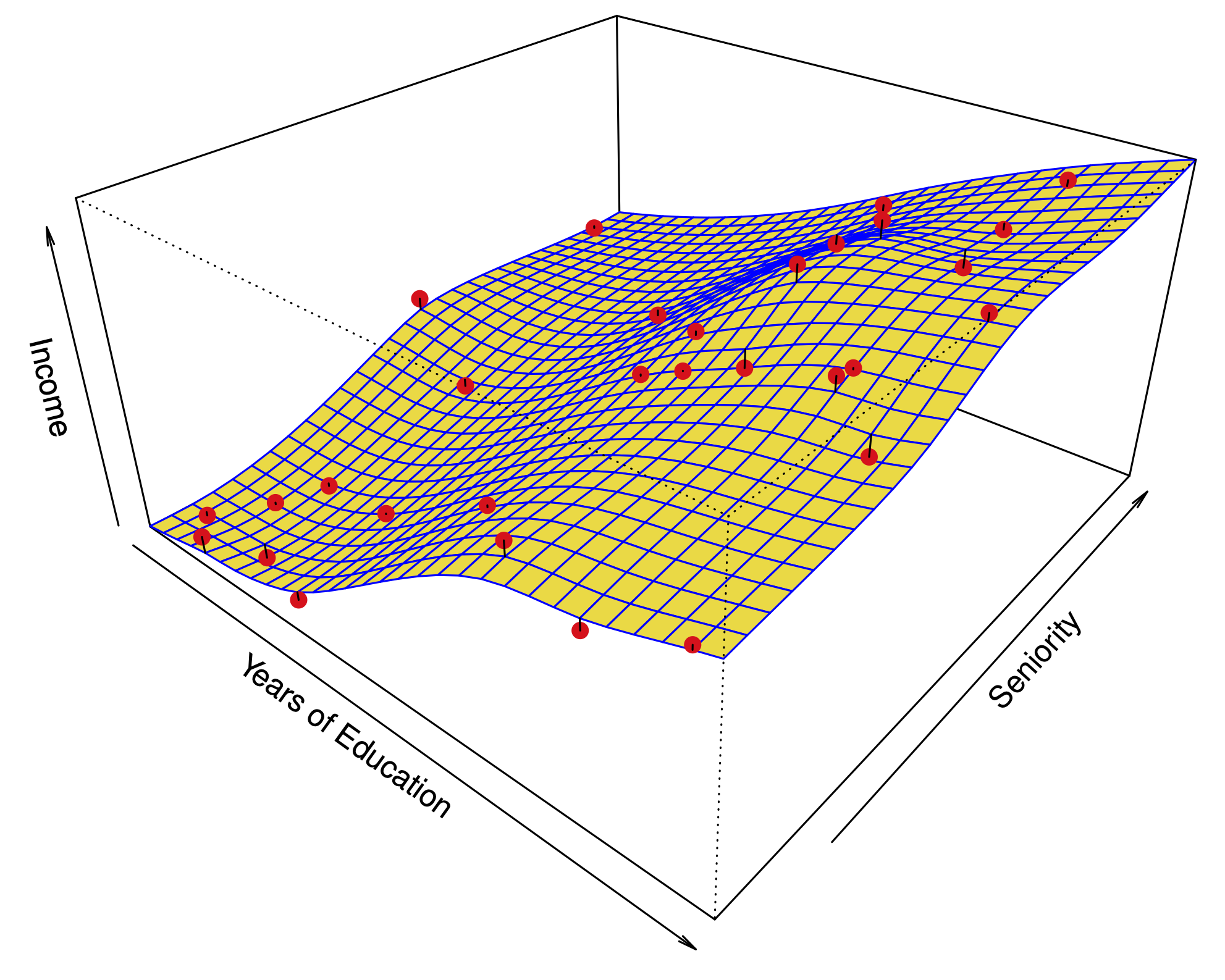

Partial least squares#

Principal components regression derives \(Z_1,\dots,Z_M\) using only the predictors \(X_1,\dots,X_p\).

In partial least squares, we use the response \(Y\) as well. (To be fair, best subsets and stepwise do as well.)

Algorithm#

Define \(Z_1 = \sum_{j=1}^p \phi_{j1} X_j\), where \(\phi_{j1}\) is the coefficient of a simple linear regression of \(Y\) onto \(X_j\).

Let \(X_j^{(2)}\) be the residual of regressing \(X_j\) onto \(Z_1\).

Define \(Z_2 = \sum_{j=1}^p \phi_{j2} X_j^{(2)}\), where \(\phi_{j2}\) is the coefficient of a simple linear regression of \(Y\) onto \(X_j^{(2)}\).

Let \(X_j^{(3)}\) be the residual of regressing \(X_j^{(2)}\) onto \(Z_2\).

Partial least squares#

At each step, we try to find the linear combination of predictors that is highly correlated to the response (the highest correlation is the least squares fit).

After each step, we transform the predictors such that they are \emph{uncorrelated} from the linear combination chosen.

Compared to PCR, partial least squares has less bias and more variance (a stronger tendency to overfit).