Shrinkage methods

Contents

Shrinkage methods#

A mainstay of modern statistics!

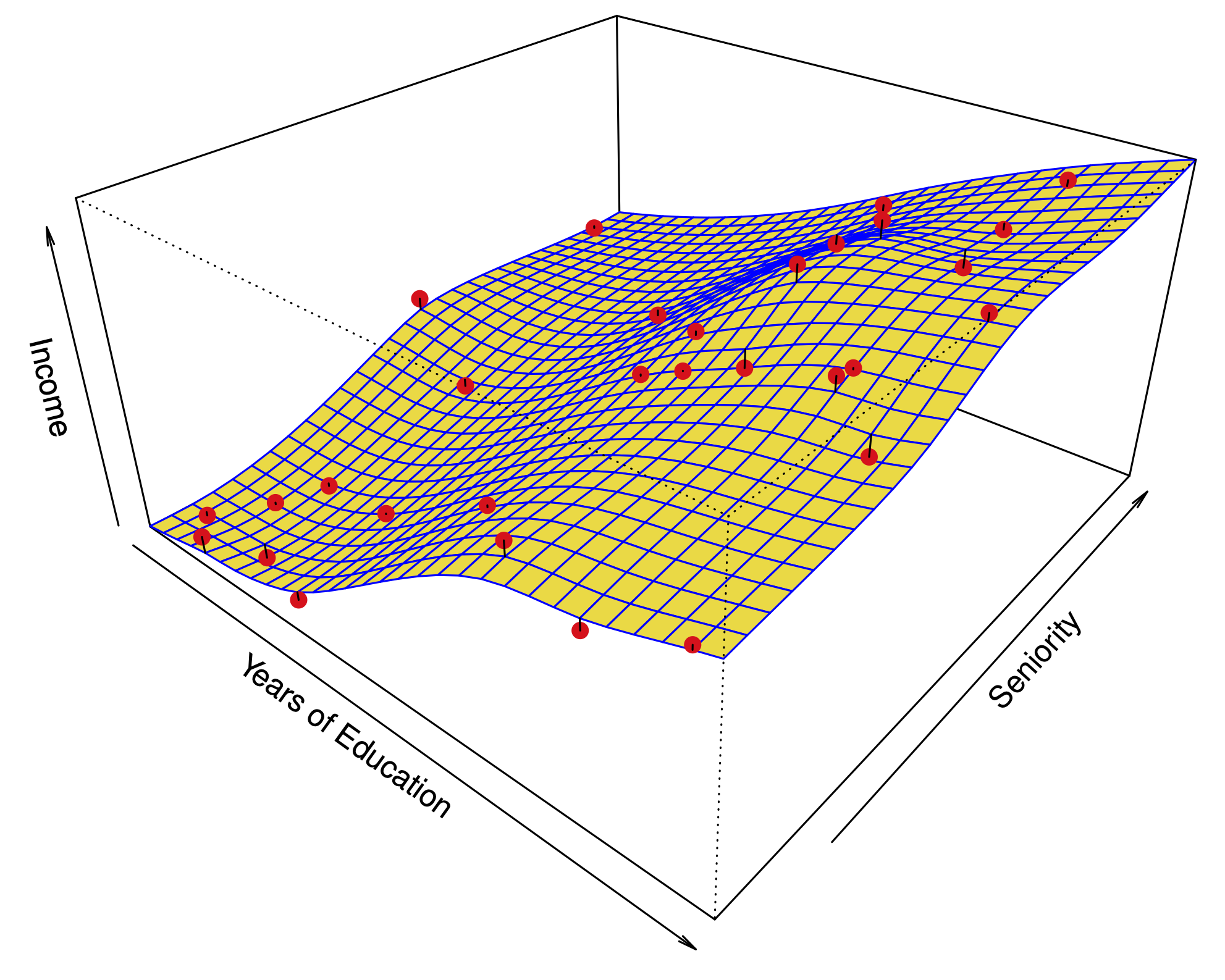

The idea is to perform a linear regression, while regularizing or shrinking the coefficients \(\hat \beta\) toward 0.

Why would shrunk coefficients be better?#

This introduces bias, but may significantly decrease the variance of the estimates. If the latter effect is larger, this would decrease the test error.

Extreme example: set \(\hat{\beta}\) to 0 – variance is 0!

There are Bayesian motivations to do this: priors concentrated near 0 tend to shrink the parameters’ posterior distribution.

Ridge regression#

Ridge regression solves the following optimization:

The RSS of the model at \(\beta\).

The squared \(\ell_2\) norm of \(\beta\), or \(\|\beta\|_2^2.\)

The parameter \(\lambda\) is a tuning parameter. It modulates the importance of fit vs. shrinkage.

We find an estimate \(\hat\beta^R_\lambda\) for many values of \(\lambda\) and then choose it by cross-validation. Fortunately, this is no more expensive than running a least-squares regression.

Ridge regression#

In least-squares linear regression, scaling the variables has no effect on the fit of the model: $\(Y = \beta_0 + \beta_1 X_1 + \beta_2 X_2 + \dots + \beta_p X_p + \epsilon.\)$

Multiplying \(X_1\) by \(c\) can be compensated by dividing \(\hat \beta_1\) by \(c\): after scaling we have the same RSS.

In ridge regression, this is not true.

In practice, what do we do?

Scale each variable such that it has sample variance 1 before running the regression.

This prevents penalizing some coefficients more than others.

Ridge regression of balance in the Credit dataset#

Ridge regression#

In a simulation study, we compute squared bias, variance, and test error as a function of \(\lambda\).

In practice, cross validation would yield an estimate of the test error but bias and variance are unobservable.

Selecting \(\lambda\) by cross-validation for ridge regression#

Lasso regression#

Lasso regression solves the following optimization:

RSS of the model at \(\beta\).

\(\ell_1\) norm of \(\beta\), or \(\|\beta\|_1.\)

The parameter \(\lambda\) is a tuning parameter. It modulates the importance of fit vs. shrinkage.

Why would we use the Lasso instead of Ridge regression?#

Ridge regression shrinks all the coefficients to a non-zero value.

The Lasso shrinks some of the coefficients all the way to zero.

A convex alternative (relaxation) to best subset selection (and its approximation stepwise selection)!

Ridge regression of balance in the Credit dataset#

A lot of pesky small coefficients throughout the regularization path

Lasso regression of balance in the Credit dataset#

Those coefficients are shrunk to zero

An alternative formulation for regularization#

Ridge: for every \(\lambda\), there is an \(s\) such that \(\hat \beta^R_\lambda\) solves:

Lasso: for every \(\lambda\), there is an \(s\) such that \(\hat \beta^L_\lambda\) solves: $\(\underset{\beta}{\text{minimize}} ~~\left\{\sum_{i=1}^n \left(y_i -\beta_0 -\sum_{j=1}^p \beta_j x_{i,j}\right)^2 \right\} ~~~ \text{subject to}~~~ \sum_{j=1}^p |\beta_j|<s.\)$

Best subset: $\(\underset{\beta}{\text{minimize}} ~~\left\{\sum_{i=1}^n \left(y_i -\beta_0 -\sum_{j=1}^p \beta_j x_{i,j}\right)^2 \right\} ~~~ \text{s.t.}~~~ \sum_{j=1}^p \mathbf{1}(\beta_j\neq 0)<s.\)$

Visualizing Ridge and the Lasso with 2 predictors#

\(\color{BlueGreen}{Diamond}: ~\sum_{j=1}^p |\beta_j|<s ~~~~~~~~~~~~~~~~~~~~ \color{BlueGreen}{Circle}:~ \sum_{j=1}^p \beta_j^2<s~~~~~\), Best subset with \(s=1\) is union of the axes…

When is the Lasso better than Ridge?#

Example 1: Most of the coefficients are non-zero.

Bias, variance, MSE

The Lasso (—), Ridge (\(\cdots\)).

The bias is about the same for both methods.

The variance of Ridge regression is smaller, so is the MSE.

When is the Lasso better than Ridge?#

Example 2: Only 2 coefficients are non-zero.

Bias, variance, MSE

The Lasso (—), Ridge (\(\cdots\)).

The bias, variance, and MSE are lower for the Lasso.

Selecting \(\lambda\) by cross-validation for lasso regression#

A very special case: ridge#

Suppose \(n=p\) and our matrix of predictors is \(\mathbf{X} = I\)

Then, the objective function in Ridge regression can be simplified: $\(\sum_{j=1}^p (y_j-\beta_j)^2 + \lambda\sum_{j=1}^p \beta_j^2\)$

We can minimize the terms that involve each \(\beta_j\) separately:

It is easy to show that

A very special case: LASSO#

Similar story for the Lasso; the objective function is:

We can minimize the terms that involve each \(\beta_j\) separately: $\((y_j-\beta_j)^2 + \lambda |\beta_j|.\)$

It is easy to show that $\(\hat \beta^L_j = \begin{cases} y_j -\lambda/2 & \text{if }y_j>\lambda/2; \\ y_j +\lambda/2 & \text{if }y_j<-\lambda/2; \\ 0 & \text{if }|y_j|<\lambda/2. \end{cases} \)$

Lasso and Ridge coefficients as a function of \(y\)#

Bayesian interpretation#

Ridge: \(\hat\beta^R\) is the posterior mean, with a Normal prior on \(\beta\).

Lasso: \(\hat\beta^L\) is the posterior mode, with a Laplace prior on \(\beta\).