Boosting

Contents

Boosting#

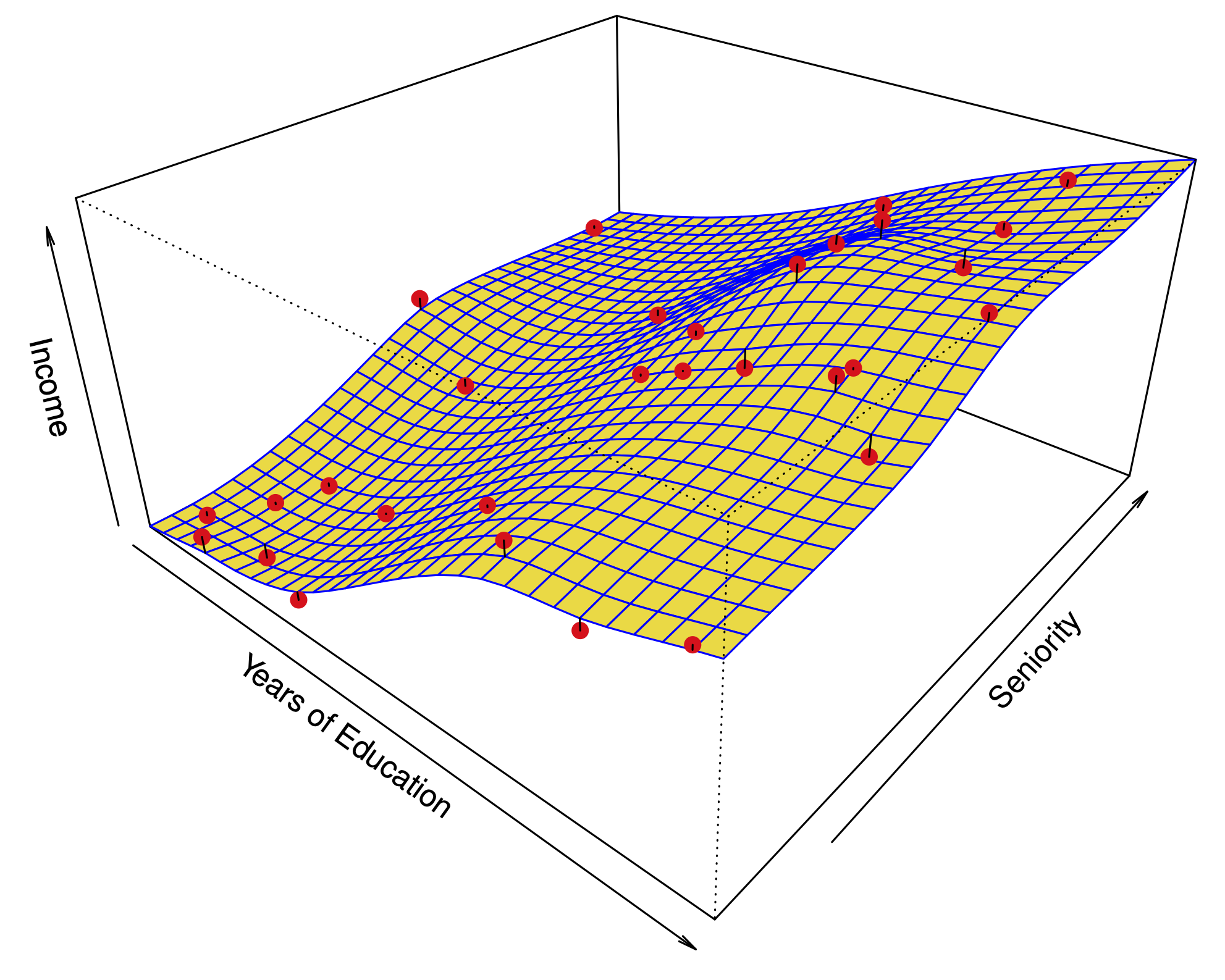

Another ensemble method (i.e. uses a collection of learners)

Instead of randomizing each learner, each learner fits to the residual (not that unlike backfitting)

Boosting regression trees#

Set \(\hat f(x) = 0\), and \(r_i=y_i\) for \(i=1,\dots,n\).

For \(b=1,\dots,B\), iterate:

Fit a regression tree \(\hat f^b\) with \(d\) splits to the response \(r_1,\dots,r_n\).

Update the prediction to:

\[\hat f(x) \leftarrow \hat f(x) + \lambda \hat f^b(x).\]Update the residuals,

\[ r_i \leftarrow r_i - \lambda \hat f^b(x_i).\]Output the final model:

\[\hat f(x) = \sum_{b=1}^B \lambda \hat f^b(x).\]

Boosting classification trees#

Can be done with appropriately defined residual for classification based on offset in log-odds.

Some intuition#

Boosting learns slowly

We first use the samples that are easiest to predict, then slowly down weigh these cases, moving on to harder samples.

The parameter \(\lambda=0.01\) in each case.

We can tune the model by CV using \(\lambda, d, B.\)