Bootstrap

Contents

Bootstrap#

Another resampling technique often seen in practice.

Cross-validation vs. the Bootstrap#

Cross-validation: provides estimates of the (test) error

The Bootstrap: provides the (standard) error of estimates

Bootstrap#

One of the most important techniques in all of Statistics.

Computer intensive method.

Popularized by Brad Efron \(\leftarrow\) Stanford pride!

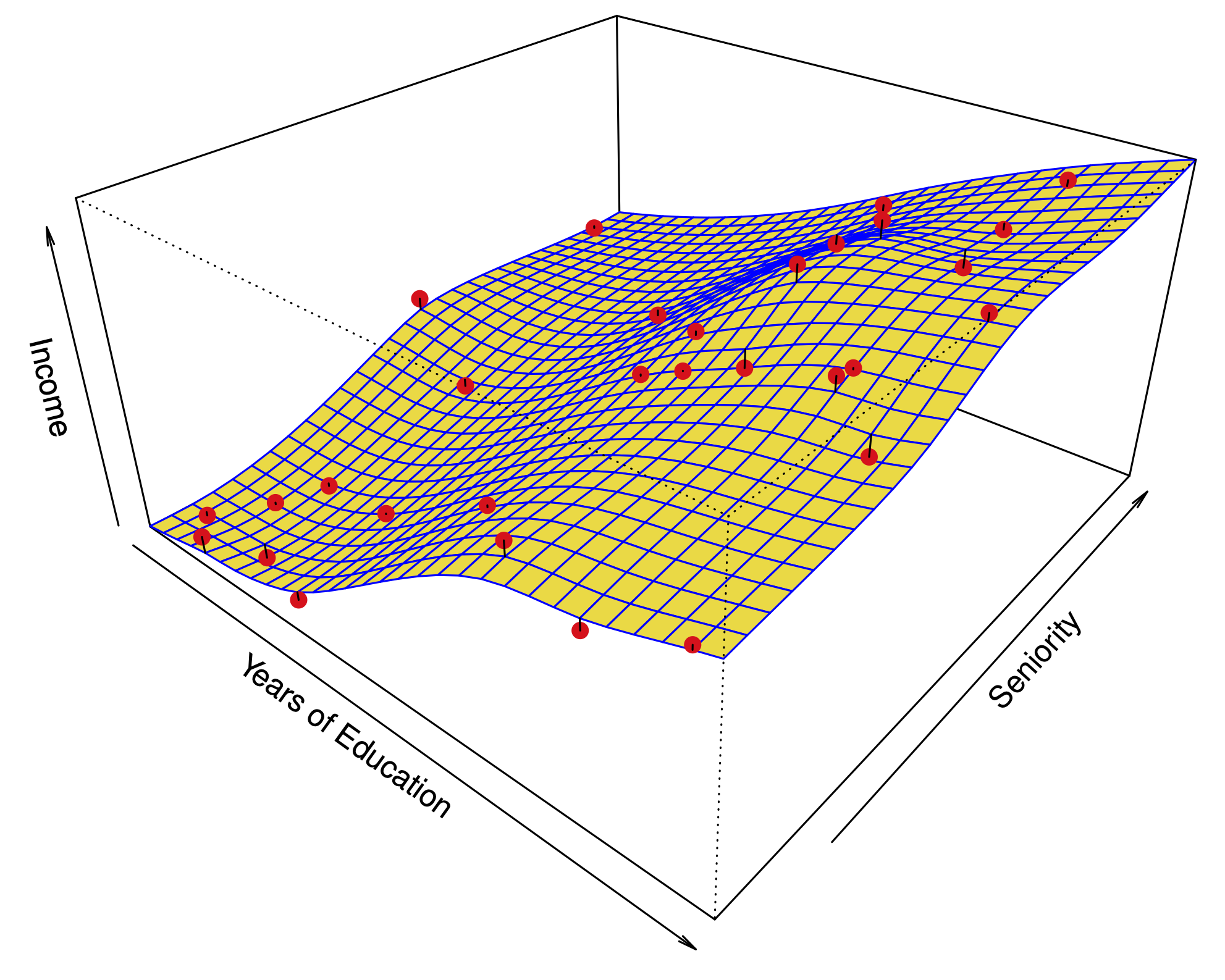

Standard errors in linear regression from a sample of size \(n\)#

Classical way to compute Standard Errors#

Example: Estimate the variance of a sample \(x_1,x_2,\dots,x_n\):

Unbiased estimate of \(\sigma^2\): $\(\hat \sigma^2 = \frac{1}{n-1}\sum_{i=1}^n (x_i-\overline x)^2.\)$

What is the Standard Error of \(\hat \sigma^2\)?

Assume that \(x_1,\dots,x_n\) are normally distributed with common mean \(\mu\) and variance \(\sigma^2\).

Then \(\hat \sigma^2(n-1)\) has a \(\chi\)-squared distribution with \(n-1\) degrees of freedom.

For large \(n\), \(\hat{\sigma}^2\) is normally distributed around \(\sigma^2\).

The SD of this sampling distribution is the Standard Error.

Limitations of the classical approach#

This approach has served statisticians well for many years; however, what happens if:

The distributional assumption — for example, \(x_1,\dots,x_n\) being normal — breaks down?

The estimator does not have a simple form and its sampling distribution cannot be derived analytically?

Bootstrap can handle these departures from the usual assumptions!

Example: Investing in two assets#

Suppose that \(X\) and \(Y\) are the returns of two assets.

These returns are observed every day: \((x_1,y_1),\dots,(x_n,y_n)\).

We have a fixed amount of money to invest and we will invest a fraction \(\alpha\) on \(X\) and a fraction \((1-\alpha)\) on \(Y\).

Therefore, our return will be

Our goal will be to minimize the variance of our return as a function of \(\alpha\).

One can show that the optimal \(\alpha\) is:

Proposal: Use an estimate:

Suppose we compute the estimate \(\widehat\alpha = 0.6\) using the samples \((x_1,y_1),\dots,(x_n,y_n)\).

How sure can we be of this value? (A little vague of a question.)

If we had sampled the observations in a different 100 days, would we get a wildly different \(\widehat \alpha\)? (A more precise question.)

Resampling the data from the true distribution#

In this thought experiment, we know the actual joint distribution \(P(X,Y)\), so we can resample the \(n\) observations to our hearts’ content.

Computing the standard error of \(\widehat \alpha\)#

We will use \(S\) samples to estimate the standard error of \(\widehat{\alpha}\).

For each sampling of the data, for \(1 \leq s \leq S\)

we can compute a value of the estimate \(\widehat \alpha^{(1)},\widehat \alpha^{(2)},\dots\).

The Standard Error of \(\widehat \alpha\) is approximated by the standard deviation of these values.

In reality, we only have \(n\) samples#

However, these samples can be used to approximate the joint distribution of \(X\) and \(Y\).

The Bootstrap: Sample from the empirical distribution:

Equivalently, resample the data by drawing \(n\) samples with replacement from the actual observations.

Why it works: variances computed under the empirical distribution are good approximations of variances computed under the true distribution (in many cases).

A schematic of the Bootstrap#

Comparing Bootstrap sampling to sampling from the true distribution#

Left panel is population distribution of \(\widehat{\alpha}\) – centered (approximately) around the true \(\alpha\).

Middle panel is bootstrap distribution of \(\widehat{\alpha}\) – centered (approximately) around observed \(\widehat{\alpha}\).