Multiple linear regression

Contents

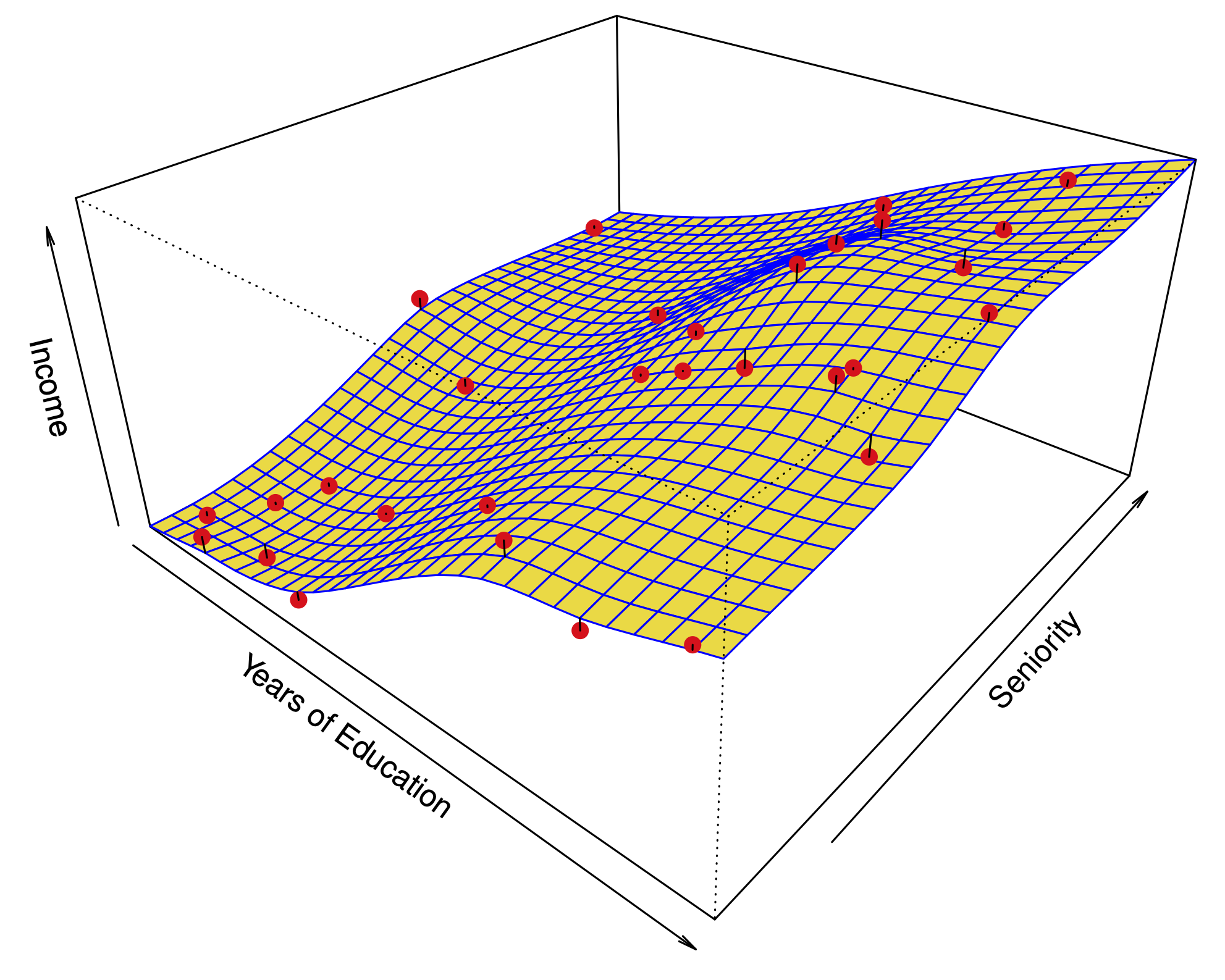

Multiple linear regression#

Fig. 11 Multiple linear regression#

Model#

Errors: \(\varepsilon_i \sim N(0,\sigma^2)\quad \text{i.i.d.}\)

Fit: the estimates \(\hat\beta_0\) and \(\hat\beta_1\) are chosen to minimize the residual sum of squares (RSS):

Matrix notation: with \(\beta=(\beta_0,\dots,\beta_p)\) and \({X}\) our usual data matrix with an extra column of ones on the left to account for the intercept, we can write

Multiple linear regression answers several questions#

Is at least one of the variables \(X_i\) useful for predicting the outcome \(Y\)?

Which subset of the predictors is most important?

How good is a linear model for these data?

Given a set of predictor values, what is a likely value for \(Y\), and how accurate is this prediction?

The estimates \(\hat\beta\)#

Our goal again is to minimize the RSS: $\( \begin{aligned} \text{RSS}(\beta) &= \sum_{i=1}^n (y_i -\hat y_i(\beta))^2 \\ & = \sum_{i=1}^n (y_i - \beta_0- \beta_1 x_{i,1}-\dots-\beta_p x_{i,p})^2 \\ &= \|Y-X\beta\|^2_2 \end{aligned} \)$

One can show that this is minimized by the vector \(\hat\beta\): $\(\hat\beta = ({X}^T{X})^{-1}{X}^T{y}.\)$

We usually write \(RSS=RSS(\hat{\beta})\) for the minimized RSS.

Which variables are important?#

Consider the hypothesis: \(H_0:\) the last \(q\) predictors have no relation with \(Y\).

Based on our model: \(H_0:\beta_{p-q+1}=\beta_{p-q+2}=\dots=\beta_p=0.\)

Let \(\text{RSS}_0\) be the minimized residual sum of squares for the model which excludes these variables.

The \(F\)-statistic is defined by: $\(F = \frac{(\text{RSS}_0-\text{RSS})/q}{\text{RSS}/(n-p-1)}.\)$

Under the null hypothesis (of our model), this has an \(F\)-distribution.

Example: If \(q=p\), we test whether any of the variables is important. $\(\text{RSS}_0 = \sum_{i=1}^n(y_i-\overline y)^2 \)$

library(MASS) # where Boston data is stored

M.Boston = lm(medv ~ ., data=Boston)

summary(M.Boston)

library(MASS) # where Boston data is stored

M0.Boston = lm(medv ~ . -indus - zn, data=Boston)

anova(M0.Boston, M.Boston)

| Res.Df | RSS | Df | Sum of Sq | F | Pr(>F) |

|---|---|---|---|---|---|

| <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> |

| 494 | 11336.29 | NA | NA | NA | NA |

| 492 | 11078.78 | 2 | 257.5076 | 5.717853 | 0.003509036 |

The \(t\)-statistic associated to the \(i\)th predictor is the square root of the \(F\)-statistic for the null hypothesis which sets only \(\beta_i=0\).

A low \(p\)-value indicates that the predictor is important.

Warning: If there are many predictors, even under the null hypothesis, some of the \(t\)-tests will have low p-values even when the model has no explanatory power.

How many variables are important?#

When we select a subset of the predictors, we have \(2^p\) choices.

A way to simplify the choice is to define a range of models with an increasing number of variables, then select the best.

Forward selection: Starting from a null model, include variables one at a time, minimizing the RSS at each step.

Backward selection: Starting from the full model, eliminate variables one at a time, choosing the one with the largest p-value at each step.

Mixed selection: Starting from some model, include variables one at a time, minimizing the RSS at each step. If the p-value for some variable goes beyond a threshold, eliminate that variable.

Choosing one model in the range produced is a form of tuning. This tuning can invalidate some of our methods like hypothesis tests and confidence intervals…

How good are the predictions?#

The function

predictin R outputs predictions and confidence intervals from a linear model:

library(ISLR)

Advertising = read.csv("https://www.statlearning.com/s/Advertising.csv", header=TRUE, row.names=1)

M.sales = lm(sales ~ TV, data=Advertising)

predict(M.sales, data.frame(TV=c(50,150,250)), interval='confidence', level=0.95)

| fit | lwr | upr |

|---|---|---|

| 9.409426 | 8.722696 | 10.09616 |

| 14.163090 | 13.708423 | 14.61776 |

| 18.916754 | 18.206189 | 19.62732 |

Prediction intervals reflect uncertainty on \(\hat\beta\) and the irreducible error \(\varepsilon\) as well.

predict(M.sales, data.frame(TV=c(50,150,250)), interval='prediction', level=0.95)

| fit | lwr | upr |

|---|---|---|

| 9.409426 | 2.946709 | 15.87214 |

| 14.163090 | 7.720898 | 20.60528 |

| 18.916754 | 12.451461 | 25.38205 |

These functions rely on our linear regression model $\( Y = X\beta + \epsilon. \)$

Dealing with categorical or qualitative predictors#

- Example: Credit dataset trying to predict $Y=$ `Balance`.

For each qualitative predictor, e.g.

Region:Choose a baseline category, e.g.

EastFor every other category, define a new predictor:

\(X_\text{South}\) is 1 if the person is from the

Southregion and 0 otherwise\(X_\text{West}\) is 1 if the person is from the

Westregion and 0 otherwise.

The model will be: $\(Y = \beta_0 + \beta_1 X_1 +\dots +\beta_7 X_7 + \color{Red}{\beta_\text{South}} X_\text{South} + \beta_\text{West} X_\text{West} +\varepsilon.\)$

The parameter \(\color{Red}{\beta_\text{South}}\) is the relative effect on

Balance(our \(Y\)) for being from the South compared to the baseline category (East).

The model fit and predictions are independent of the choice of the baseline category.

However, hypothesis tests derived from these variables are affected by the choice.

Solution: To check whether

regionis important, use an \(F\)-test for the hypothesis \(\beta_\text{South}=\beta_\text{West}=0\) by droppingRegionfrom the model. This does not depend on the coding.Note that there are other ways to encode qualitative predictors produce the same fit \(\hat f\), but the coefficients have different interpretations.

Recap#

So far, we have:

Defined Multiple Linear Regression

Discussed how to test the importance of variables.

Described one approach to choose a subset of variables.

Explained how to code qualitative variables.

Now, how do we evaluate model fit? Is the linear model any good? What can go wrong?

How good is the fit?#

To assess the fit, we focus on the residuals $\( e = Y - \hat{Y} \)$

The RSS always decreases as we add more variables.

The residual standard error (RSE) corrects this: $\(\text{RSE} = \sqrt{\frac{1}{n-p-1}\text{RSS}}.\)$

Fig. 12 Residuals#

Visualizing the residuals can reveal phenomena that are not accounted for by the model; eg. synergies or interactions:

Potential issues in linear regression#

Interactions between predictors

Non-linear relationships

Correlation of error terms

Non-constant variance of error (heteroskedasticity)

Outliers

High leverage points

Collinearity

Interactions between predictors#

Linear regression has an additive assumption: $\(\mathtt{sales} = \beta_0 + \beta_1\times\mathtt{tv}+ \beta_2\times\mathtt{radio}+\varepsilon\)$

i.e. An increase of 100 USD dollars in TV ads causes a fixed increase of \(100 \beta_2\) USD in sales on average, regardless of how much you spend on radio ads.

We saw that in Fig 3.5 above. If we visualize the fit and the observed points, we see they are not evenly scattered around the plane. This could be caused by an interaction.

One way to deal with this is to include multiplicative variables in the model:

The interaction variable

tv\(\cdot\)radiois high when bothtvandradioare high.

R makes it easy to include interaction variables in the model:

summary(lm(sales ~ TV + radio + radio:TV, data=Advertising))

M = lm(sales ~ TV + radio + radio:TV, data=Advertising)

predict(M, data.frame(TV=50, radio=40), interval='confidence')

Non-linearities#

Fig. 13 A nonlinear fit might be better here.#

Example: Auto dataset.

A scatterplot between a predictor and the response may reveal a non-linear relationship.

Solution: include polynomial terms in the model.

Could use other functions besides polynomials…

Fig. 14 Residuals for Auto data#

In 2 or 3 dimensions, this is easy to visualize. What do we do when we have too many predictors?

Correlation of error terms#

We assumed that the errors for each sample are independent:

What if this breaks down?

The main effect is that this invalidates any assertions about Standard Errors, confidence intervals, and hypothesis tests…

Example: Suppose that by accident, we duplicate the data (we use each sample twice). Then, the standard errors would be artificially smaller by a factor of \(\sqrt{2}\).

When could this happen in real life:

Time series: Each sample corresponds to a different point in time. The errors for samples that are close in time are correlated.

Spatial data: Each sample corresponds to a different location in space.

Grouped data: Imagine a study on predicting height from weight at birth. If some of the subjects in the study are in the same family, their shared environment could make them deviate from \(f(x)\) in similar ways.

Non-constant variance of error (heteroskedasticity)#

The variance of the error depends on some characteristics of the input features.

To diagnose this, we can plot residuals vs. fitted values:

If the trend in variance is relatively simple, we can transform the response using a logarithm, for example.

Outliers#

Outliers from a model are points with very high errors.

While they may not affect the fit, they might affect our assessment of model quality.

Possible solutions:#

If we believe an outlier is due to an error in data collection, we can remove it.

An outlier might be evidence of a missing predictor, or the need to specify a more complex model.

High leverage points#

Some samples with extreme inputs have an outsized effect on \(\hat \beta\).

This can be measured with the leverage statistic or self influence:

Studentized residuals#

The residual \(e_i = y_i - \hat y_i\) is an estimate for the noise \(\epsilon_i\).

The standard error of \(\hat \epsilon_i\) is \(\sigma \sqrt{1-h_{ii}}\).

A studentized residual is \(\hat \epsilon_i\) divided by its standard error (with appropriate estimate of \(\sigma\))

When model is correct, it follows a Student-t distribution with \(n-p-2\) degrees of freedom.

Collinearity#

Two predictors are collinear if one explains the other well:

Problem: The coefficients become unidentifiable.

Consider the extreme case of using two identical predictors

limit: $\( \begin{aligned} \mathtt{balance} &= \beta_0 + \beta_1\times\mathtt{limit} + \beta_2\times\mathtt{limit} + \epsilon \\ & = \beta_0 + (\beta_1+100)\times\mathtt{limit} + (\beta_2-100)\times\mathtt{limit} + \epsilon \end{aligned} \)$For every \((\beta_0,\beta_1,\beta_2)\) the fit at \((\beta_0,\beta_1,\beta_2)\) is just as good as at \((\beta_0,\beta_1+100,\beta_2-100)\).

If 2 variables are collinear, we can easily diagnose this using their correlation.

A group of \(q\) variables is multilinear if these variables “contain less information” than \(q\) independent variables.

Pairwise correlations may not reveal multilinear variables.

The Variance Inflation Factor (VIF) measures how predictable it is given the other variables, a proxy for how necessary a variable is:

Above, \(R^2_{X_j|X_{-j}}\) is the \(R^2\) statistic for Multiple Linear regression of the predictor \(X_j\) onto the remaining predictors.