Splines

Contents

Splines#

Cubic splines#

Define a set of knots \(\xi_1< \xi_2 < \dots<\xi_K\).

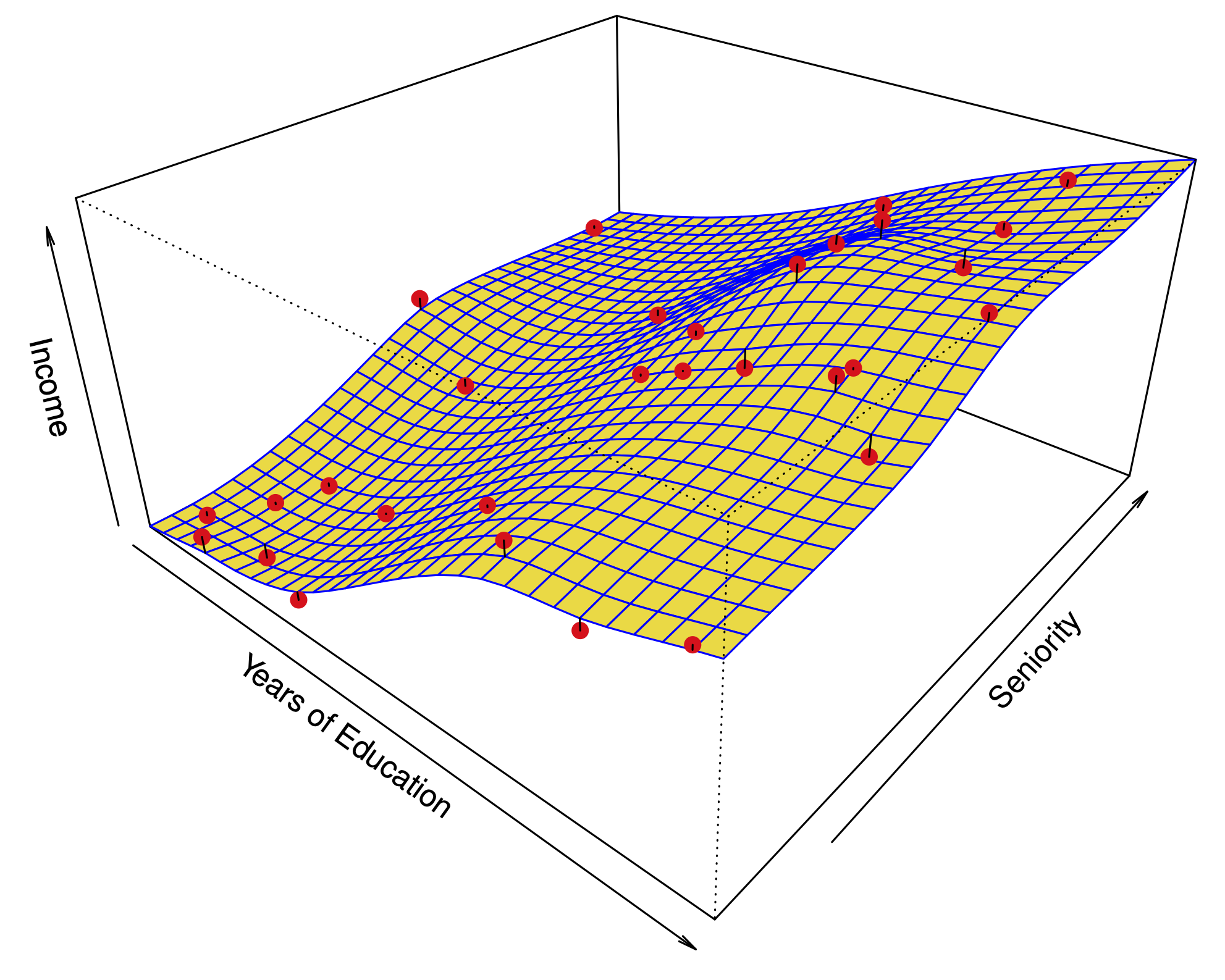

We want the function \(f\) in \(Y= f(X) + \epsilon\) to:

Be a cubic polynomial between every pair of knots \(\xi_i,\xi_{i+1}\).

Be continuous at each knot.

Have continuous first and second derivatives at each knot.

It turns out, we can write \(f\) in terms of \(K+3\) basis functions:

Above,

Natural cubic splines#

Spline which is linear instead of cubic for \(X<\xi_1\), \(X>\xi_K\).

The predictions are more stable for extreme values of \(X\).

Choosing the number and locations of knots#

The locations of the knots are typically quantiles of \(X\).

The number of knots, \(K\), is chosen by cross validation.

Natural cubic splines vs. polynomial regression#

Splines can fit complex functions with few parameters.

Polynomials require high degree terms to be flexible.

High-degree polynomials can be unstable at the edges.

Smoothing splines#

Find the function \(f\) which minimizes

The RSS when using \(f\) to predict.

A penalty for the roughness of the function.

Facts#

The minimizer \(\hat f\) is a natural cubic spline, with knots at each sample point \(x_1,\dots,x_n\).

Obtaining \(\hat f\) is similar to a Ridge regression.

Advanced: deriving a smoothing spline#

Show that if you fix the values \(f(x_1),\dots,f(x_2)\), the roughness

is minimized by a natural cubic spline.

Deduce that the solution to the smoothing spline problem is a natural cubic spline, which can be written in terms of its basis functions.

Letting \(\mathbf N\) be a matrix with \(\mathbf N(i,j) = f_j(x_i)\), we can write the objective function:

where \(\Omega_{\mathbf N}(i,j) = \int N_i''(t) N_j''(t) dt\).

By simple calculus, the coefficients \(\hat \beta\) which minimize

are \(\hat \beta = (\mathbf N^T \mathbf N + \lambda \Omega_{\mathbf N})^{-1} \mathbf N^T y\).

Note that the predicted values are a linear function of the observed values:

Degrees of freedom#

The degrees of freedom for a smoothing spline are:

Natural cubic splines vs. Smoothing splines#

Natural cubic splines |

Smoothing splines |

|---|---|

Fix the locations of \(K\) knots at quantiles of \(X\) and number of knots \(K<n\). |

Put \(n\) knots at \(x_1,\dots,x_n\). |

Find the natural cubic spline \(\hat f\) which minimizes the RSS:\(\sum_{i=1}^n (y_i - f(x_i) )^2\) with these knots. |

Find the fitted values \(\hat f(x_1),\dots,\hat f(x_n)\) through an algorithm similar to Ridge regression. |

Choose \(K\) by cross validation. |

Choose smoothing parameter \(\lambda\) by cross validation. |

Choosing the regularization parameter \(\lambda\)#

We typically choose \(\lambda\) through cross validation.

Fortunately, we can solve the problem for any \(\lambda\) with the same complexity of diagonalizing an \(n\times n\) matrix.

There is a shortcut for LOOCV: $\( \begin{aligned} RSS_\text{loocv}(\lambda) &= \sum_{i=1}^n (y_i - \hat f_\lambda^{(-i)}(x_i))^2 \\ &= \sum_{i=1}^n \left[\frac{y_i-\hat f_\lambda(x_i)}{1-\mathbf S_\lambda(i,i)}\right]^2 \end{aligned} \)$