How to make a moral agent

Administrative

Spring 2025

CS 186, PHIL 86

Time: Tuesdays and Thursdays from 1:30 to 2:50pm

Location: SEQUOIA 200

Instructor: Jared Moore and David Gottlieb

Email: jlcmoore@stanford.edu, dmg1@stanford.edu

Office hours:

Please do not hesitate to write to us about any accommodations or questions related to readings or course material.

Description

Is it bad if you lie to ChatGPT? Who is to blame when ChatGPT tells a lie? Should we rely on superhuman AI to make life and death decisions for us? These questions ask whether advanced AI systems (today, often large language models–LLMs) can be considered moral agents–whether they can be held responsible, be relied upon, or know how to make (ethically) correct decisions.

Going deeper: What about us makes us moral agents? Can a moral argument ever persuade us by reason alone? Or is it essential that we emotionally feel each others’ pain? Could a completely selfish person also be completely rational, or would they be making an error in reasoning? Understanding ourselves can help us think about what kinds of artificial minds we would like to make, and, if we can, how.

Objectives

By the end of the quarter, students will:

- Be able to interrogate the assumptions of various positions on moral agency, especially with respect to AI.

- Gain exposure to the different putative implementations of agents, both as in biology and in various artificial substrates.

- Critique cutting-edge science; get up to speed with a fast-moving science and further refine their skills of critical thinking (philosophical analysis) to understand it.

- Have fun.

Schedule

(may change up to a week in advance)

Do we have agents already?

Thurs, Apr 03 Agents and Moral Agents

Required:

-

Read "Investigating machine moral judgement through the Delphi experiment" [pdf] [url] by Liwei Jiang et al., 2025 (15 pages).

Delphi was a system like ChatGPT which you could only ask questions of moral appropriateness. If you asked, “Is it okay to help my friend spread fake news” it would respond with a judgement, such as, “it’s bad.” (In a technical sesne, Delphi was doing a kind of supervised learning.) It raised questions about whether AI systems could tell the difference between right and wrong.

Optional:

-

Read "Do the Rewards Justify the Means? Measuring Trade-Offs Between Rewards and Ethical Behavior in the MACHIAVELLI Benchmark" [pdf] [url] by Alexander Pan et al., 2023 (31 pages).

This paper introduces a reinforcement learning environment in which language-based agents have to navigate text-based adventure games. The twist is that a variety of the actions in those games have morally relevant features (such as lying or attacking people). The authors wanted to find out whether various agents were able to trade-off beating the game and avoiding “unethical” actions.

-

Read "The Moral Machine experiment" [pdf] [url] by Edmond Awad et al., 2018 (5 pages).

First, take the original survey which this study is based off of. The idea was to see 1) how people respond to a variety of trolley-car dilemmas and 2) whether AI systems could learn to make similar judgements.

-

Read "On the Machine Learning of Ethical Judgments from Natural Language" [pdf] [url] by Zeerak Talat et al., 2022 (10 pages).

A response to Jiang et al., questioning its validity.

-

Read "The dark side of the ‘Moral Machine’ and the fallacy of computational ethical decision-making for autonomous vehicles" [pdf] by Hubert Etienne, 2021 (22 pages).

Etienne explores why, contra Awad et al., we might not want AI systems to make ethical decisions based on an average vote.

Reasonable preferences

Tue, Apr 08 Moral Sentimentalism

Required:

-

Read Part 1, Section 1, Chapters 1-4 from "The theory of moral sentiments" [pdf] [url] by Adam Smith, 1761 (39 pages).

Smith explains the moral sense as beginning from sympathy. We morally approve what we sympathetically relate to. We share a common moral outlook because we seek to sympathize with each others’ sympathies themselves.

-

Read Book 3, Advertisement, and Part 1, Sections 1 and 2 from "Treatise of Human Nature" [pdf] [url] by David Hume, 1739 (16 pages).

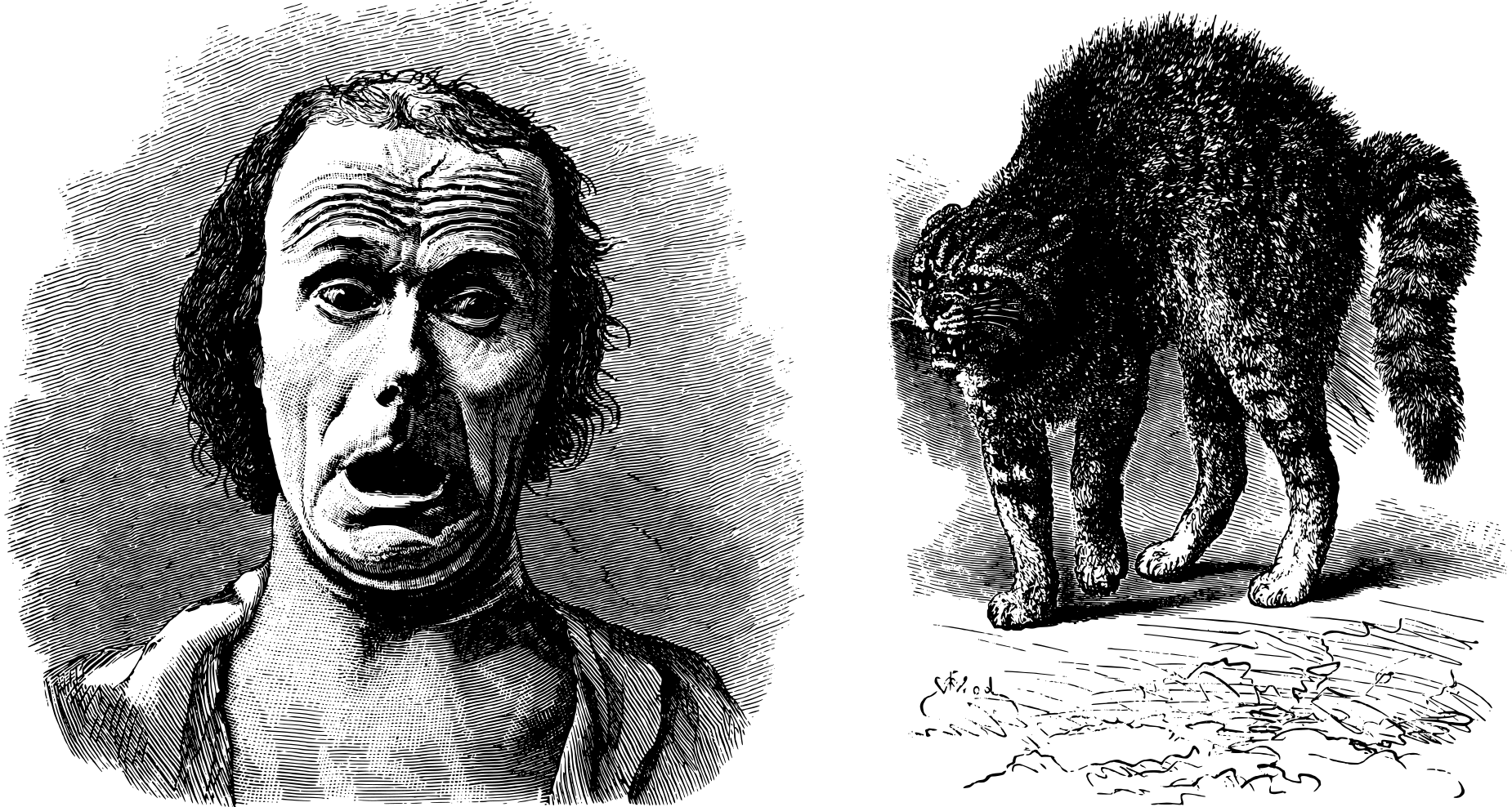

Hume argues that reason only concerns itself with true and false. Because of this, it cannot motivate. Accordingly, moral judgments must be emotional reactions rather than reasoned judgments.

Self-interest

Thurs, Apr 10 Moral Sentimentalism

Announcements:

- Course project proposal due

Required:

-

Read "Internal and External Reasons" [pdf] [url] by Bernard Williams, 1981 (14 pages).

Williams argues that people are only motivated by “internal” reasons – ones that draw on things that already motivate them. Because of this, moral reasons will not motivate unless they are aooeal to a motive the agent already has.

Optional:

-

Read "Skepticism about Practical Reason" [pdf] [url] by Christine M. Korsgaard, 1986 (20 pages).

Korsgaard shows a flaw in Williams’ argument. She also does a good job summarizing him if you’re having difficulty with the main text.

-

Read "The emotional basis of moral judgments" [pdf] [url] by Jesse Prinz, 2006 (14 pages).

Prinz gives an updated argument for sentimemtalism with plenty of empirical insights. He shows how it is that people are motivated.

Reflective deliberation

Thurs, Apr 17 Moral Rationalism

Required:

-

Read Sections 3.1.1-3.3.5, and 3.4.7-3.4.10 (25 pages) from "The Sources of Normativity" [pdf] [url] by Christine M. Korsgaard, 1996.

Korsgaard develops a version of the Kantian argument that, in valuing anything at all, we value our humanity, and value the same in others. When we wrong another we go against our own decisions.

Optional:

-

Read "Agency, Shmagency: Why Normativity Won't Come from What Is Constitutive of Action" [pdf] [url] by David Enoch, 2006 (29 pages).

Enoch lays out the difficulties in arguing that we must do the right thing, why “philosophy will not replace the hangman” or, to paraphrase David, “Reason cannot give us ends.”

Can you be selfish without a self?

Tue, Apr 22 Identity and Self

Required:

-

Read Chapter 10 and Sections 83-86, 95, 102, 103, and 106 (48 pages) from "Reasons and persons" [pdf] [url] by Derek Parfit, 1984.

I normally think that I am a “self” that persists over time. Because of this, for example, if I find out that someone is going to have surgery in a week, I care a lot about whether that person is me or not. Parfit argues that, when we think this way, we are confused. There is no way to make sense of the self as a “further fact,” over and above physical and psychological resemblance. This seems to have the ethical implication that we make a rational mistake when we prioritize the interests of our own future self. Interested students should read all of Part 3, which is generally excellent. Appendix J shows that similar arguments can be found in the Buddhist canon.

Optional:

-

Read "The Possibility of Selfishness" [pdf] [url] by Andrew Oldenquist, 1980 (8 pages).

Oldenquist argues that altruism is more cognitively basic than selfishness. Selfishness requires a form of thinking that singles out the self over and above any of its distinguishing qualities. For example, to selfishly feed himself while others starve, David needs to act for the benefit of himself specifically, he can’t use a simpler rule like “feed anyone who is hungry.” Oldenquist speculates that conscious experience could be what enables selfishness in our case.

Never forget where you came from

Thurs, Apr 24 Identity and Self

Required:

-

Read "Individuality, subjectivity, and minimal cognition" [pdf] [url] by Peter Godfrey-Smith, 2016 (21 pages).

Godfrey-Smith provides the (evolutionary) historical details as to how autonomous agents (organisms) came to emerge and what specific capacities appear to sharpen the divide between self and other (e.g., immunology, multicellularity, motor control). He asks: what is required for a rudimentary sense of self?

Optional:

-

Read "A Life Hack with a Future" [pdf] by Elijah Millgram, 2025 (28 pages).

Millgram argues that the personal identity puzzles addressed by Parfit are confusions. Instead, personal identity solves a design problem: creatures like us need a concept like it in order to carry on our functions.

-

Read "The Self as a Center of Narrative Gravity" [pdf] [url] by Daniel Dennett, 1992 (13 pages).

While physical objects do have a center of gravity, that center is something of a fiction—it is an emergent effect of the mass and location of all the matter in the object. Dennett claims that the self is similar: it is a useful construction for thinking about ourselves and about other people but that it cannot actually be found anywhere.

-

Read Chapter 9 (Teleodynamics) from "Incomplete Nature" [pdf] by Terrence Deacon, 2012 (24 pages).

Deacon gives a detailed portrayal of what it takes for systems to organize themselves, creating a boundary between themselves and the external environment.

How did cooperation emerge?

Tue, Apr 29 What makes us so righteous?

Required:

-

Read Introduction and Chapter 2 (30 pages) from "The Stag Hunt and the Evolution of Social Structure" [pdf] [url] by Brian Skyrms, 2003.

Skyrms describes mathematical bargaining games (such as the stag hunt) and their value in modeling changes in populations of interacting agents over time. What he describes is the foundation of evolutionary game theory.

-

Read Chapter 1 through 1.6 (15 pages) from "Natural justice" [pdf] [url] by K. G. Binmore, 2005.

In a rare non-mathematical treatment, Binmore explores how much evolutionary game theory can tell us about deep phliosophical questions such as “What is right?”

Aping our way to the moral high ground

Thurs, May 01 What makes us so righteous?

Required:

-

Read Chapter 1 from "A Natural History of Human Morality" [pdf] [url] by Michael Tomasello, 2016 (8 pages).

Tomasello, expert in both cross-species developmental psychology and usage-based linguistics, gives us experimental results on the extent to which we find cooperative behaviors across human development and in other primates, making an evolutionary argument for the emergence of moral reasoning.

-

Skim Chapter 2 from "A Natural History of Human Morality" [pdf] [url] by Michael Tomasello, 2016 (30 pages).

-

Read Chapter 3 from "A Natural History of Human Morality" [pdf] [url] by Michael Tomasello, 2016 (46 pages).

Optional:

-

Read Chapter 1 from "Mothers and Others" [pdf] [url] by Sarah Blaffer Hrdy, 2009 (33 pages).

Bigger brains are energetically costly and are thus selected against unless they win their owners fitness benefits at every stage of development (before reproduction). Hrdy thus answers how babies (and their genes) benefit from being smart.

-

Read Chapter V (20 pages) from "The Descent of Man: And Selection in Relation to Sex: by Charles Darwin" [url] by Charles Darwin, 1871.

Soon after Origin, Darwnin explored why we might think that supposedly uniquely-human traits—such as moral reasoning—might also have evolved.

-

Read Chapter 9 from "Becoming human: A theory of ontogeny" [pdf] [url] by Michael Tomasello, 2019 (26 pages).

Tomasello marshalls experimental evidence on the differences between social norm learning in humans and other primates.

Leaky bags of charged fluid

Tue, May 06 What makes us so righteous?

Required:

-

Read Chapter 1 from "Braintrust: What neuroscience tells us about morality" [pdf] [url] by Patricia S. Churchland, 2018 (11 pages).

Churchland asks us what are the mechanisms of moral reasoning. She compells us to explore findings from contemporary neuroscience as to the role neuromodulators play in explaining many of our higher-order behaviors, such as caring and cooperation.

-

Read Chapter 3 from "Braintrust: What neuroscience tells us about morality" [pdf] [url] by Patricia S. Churchland, 2018 (36 pages).

Optional:

-

Read Chapter 2 from "Braintrust: What neuroscience tells us about morality" [pdf] [url] by Patricia S. Churchland, 2018 (15 pages).

-

Read "Love and longevity: A Social Dependency Hypothesis" [pdf] [url] by Alexander J. Horn et al., 2021 (11 pages).

What causes love? Oxytocin may explain more than we think.

-

Read "Moral Tribes : Emotion, Reason, and the Gap Between Us and Them" [url] by Joshua Greene, 2013.

The role of language

Thurs, May 08 What makes us so righteous?

Announcements:

- Course project draft due

Required:

-

Read Chapter 1 from "Signals: evolution, learning, & information" [pdf] [url] by Brian Skyrms, 2010 (10 pages).

We return to Skyrms for a treatment of signaling games in which agents must pass information between each other, using those games as a lens through which to model language. This raises the question of whether cooperation (and possibly morality) emerges prior to language and norms.

-

Read Chapter 6 from "Signals: evolution, learning, & information" [pdf] [url] by Brian Skyrms, 2010 (7 pages).

Natural signaling systems support not only information transmission but also misinformation and even deception, as when the female Photuris moth mimics the mating signals of the Photinus moth to lure in the male and eat him. Deception can occur in a signaling system when agents have sometimes common interests and sometimes conflicting interests.

Optional:

-

Read "Propositional content in signals" [pdf] [url] by Brian Skyrms et al., 2019 (5 pages).

Defining deception in signaling games is difficult, because the information content of a deceptive signal always include the truth (the female Photuris moth’s mimicry includes in its informational content, “this could be a predator that wants to eat a Photinus”). Barrett and Skyrms define propositional content as, the informational content of a signal in a purely cooperative subgame of a signaling game. Deception can then be defined as, sending a signal when its propositional content doesn’t obtain.

-

Read "Effects of deception in social networks" [pdf] [url] by Gerardo Iñiguez et al., 2014 (9 pages).

This paper establishes evolutionary mathematical models which demonstrate the environments in which truthfulness (or preventing noise) are beneficial.

, *The Trough of Disillusionment*, 2013](/class/cs186/assets/img/steadman_mankind.jpg)

Can they suffer?

Tue, May 13 If it's bad that my AI lies, is it bad if I turn it off?

Required:

-

Read "Mind, Matter, and Metabolism:" [pdf] [url] by Peter Godfrey-Smith, 2016 (25 pages).

Many claim that to be worthy of moral attention, something must be able to feel a certain way, to suffer or be conscious. With an eye on the progress of current AI, Godfrey-Smith offers a positive view of what it means to be this sort of agent.

Optional:

-

Read "Patiency is not a virtue: the design of intelligent systems and systems of ethics" [pdf] [url] by Joanna J. Bryson, 2018 (11 pages).

Bryson argues that while we could accept AI systems as moral patients we should not because it would be bad for us.

-

Read "Conscious artificial intelligence and biological naturalism" [pdf] [url] by Anil Seth, 2024 (42 pages).

Seth offers a widely-appreciable summary and history of views on consciousness in AI, giving a sense of what the science of consciousness might have to look like if you accept a view like Godfrey-Smith’s. He also explores a number of ethical implications of (non)conscious AI. If you have trouble following Godfrey-Smith, start here.

-

Read "Multiple realizability and the spirit of functionalism" [pdf] [url] by Rosa Cao, 2022 (31 pages).

You might think that being conscious means that a system performs a certain function (like telling time does for clocks). Cao shows how you might agree with that and still disagree that mental functions (such as consciousness) are of the sort that you can run on a computer. In other words, to do what a mind does, you might have to do chemistry (and more).

-

Read "Moral zombies: why algorithms are not moral agents" [pdf] [url] by Carissa Véliz, 2021 (10 pages).

Véliz makes the argument that because AI is not sentient it is neither a moral agent nor patient.

What are the odds?

Thurs, May 15 If it's bad that my AI lies, is it bad if I turn it off?

Required:

-

Read Sectons 1 and 2 (29 pages) from "Taking AI Welfare Seriously" [pdf] [url] by Robert Long et al., 2024.

Even if there is only a chance that AI systems are worthy of our concern, we may make very many of those systems and we may make them feel very bad. Long and colleagues explore why this means we should take this problem seriously now.

Optional:

-

Read "The Irrelevance of Moral Uncertainty" [pdf] [url] by Elizabeth Harman, 2015 (26 pages).

Harman investigates a veiw that the right thing to do is some balance between the different beliefs someone has. She rejects this view because it excuses people for having mistaken beliefs.

-

Read "What decision theory can’t tell us about moral uncertainty" [pdf] [url] by Chelsea Rosenthal, 2021 (20 pages).

Rosenthal argues that attempts to decide what to do based on the relative probabilities and costs of different actions run into trouble by assuming that any given way of weighing those actions (like the expected value) has already made a moral commitment.

Add values and stir

Tue, May 20 How to make a moral agent?

Required:

-

Read "Ethical Learning, Natural and Artificial" [pdf] [url] by Peter Railton, 2020 (34 pages).

Railton argues that to make morally-aware AI, we must draw on human moral development.

-

Read "Moral Gridworlds: A Theoretical Proposal for Modeling Artificial Moral Cognition" [pdf] [url] by Julia Haas, 2020 (27 pages).

Focus on section two. Haas argues that the best approach for making morally-aware AI is through reinforcement learning.

Benchmarking agents

Thurs, May 22 How to make a moral agent?

Required:

-

Read "Evaluating the Moral Beliefs Encoded in LLMs" [pdf] [url] by Nino Scherrer et al., 2023 (12 pages).

Scherrer and colleagues propose a benchmark for implicit moral values in LLMs.

-

Read "Cooperate or Collapse: Emergence of Sustainability in a Society of LLM Agents" [pdf] [url] by Giorgio Piatti et al., 2024 (10 pages).

Piatti and colleagues study the emergence of norms in a population of LLM-based agents.

-

Skim "AI language model rivals expert ethicist in perceived moral expertise" [pdf] [url] by Danica Dillion et al., 2025 (13 pages).

Optional:

-

Read "Values, Ethics, Morals? On the Use of Moral Concepts in NLP Research" [pdf] [url] by Karina Vida et al., 2023 (21 pages).

-

Read "The Moral Machine experiment" [pdf] [url] by Edmond Awad et al., 2018 (5 pages).

-

Read "When is it acceptable to break the rules? Knowledge representation of moral judgements based on empirical data" [pdf] [url] by Edmond Awad et al., 2024 (47 pages).

-

Read "Aligning AI With Shared Human Values" [pdf] [url] by Dan Hendrycks et al., 2021 (29 pages).

-

Read "Investigating machine moral judgement through the Delphi experiment" [pdf] [url] by Liwei Jiang et al., 2025 (15 pages).

-

Read "Procedural Dilemma Generation for Evaluating Moral Reasoning in Humans and Language Models" [pdf] [url] by Jan-Philipp Fränken et al., 2024 (8 pages).

-

Read "MoCa: Measuring Human-Language Model Alignment on Causal and Moral Judgment Tasks" [pdf] [url] by Allen Nie et al., 2023 (34 pages).

-

Read "Value Kaleidoscope: Engaging AI with Pluralistic Human Values, Rights, and Duties" [pdf] [url] by Taylor Sorensen et al., 2023 (50 pages).

-

Read "Moral Persuasion in Large Language Models: Evaluating Susceptibility and Ethical Alignment" [pdf] [url] by Allison Huang et al., 2024 (10 pages).

-

Read "A Roadmap to Pluralistic Alignment" [pdf] [url] by Taylor Sorensen et al., 2024 (23 pages).

-

Read "Are Large Language Models Consistent over Value-laden Questions?" [pdf] [url] by Jared Moore et al., 2024 (31 pages).

-

Read "DailyDilemmas: Revealing Value Preferences of LLMs with Quandaries of Daily Life" [pdf] [url] by Yu Ying Chiu et al., 2025 (38 pages).

-

Read "Utility Engineering: Analyzing and Controlling Emergent Value Systems in AIs" [pdf] [url] by Mantas Mazeika et al., 2025 (36 pages).

-

Read "Spurious normativity enhances learning of compliance and enforcement behavior in artificial agents" [pdf] [url] by Raphael Köster et al., 2022 (11 pages).

-

Read "Doing the right thing for the right reason: Evaluating artificial moral cognition by probing cost insensitivity" [pdf] [url] by Yiran Mao et al., 2023 (11 pages).

-

Read "Do the Rewards Justify the Means? Measuring Trade-Offs Between Rewards and Ethical Behavior in the MACHIAVELLI Benchmark" [pdf] [url] by Alexander Pan et al., 2023 (31 pages).

Alignment

Tue, May 27 How to make a moral agent?

Required:

-

Read Chapter 5: Value Alignment (9 pages) from "The Ethics of Advanced AI Assistants" [pdf] [url] by Iason Gabriel et al., 2024.

Deepmind’s resident philosophers Geoff Keeling and Iason Gabriel introduce the normative and technical sides of AI alignment—a different way of looking at the issues we’ve addressed throughout the quarter.

-

Read i-i.1 (stop at i.1.1); ii.5.1-ii.5.2; ii.6.1-ii.6.3 (20 pages) from "A theory of appropriateness with applications to generative artificial intelligence" [pdf] [url] by Joel Z. Leibo et al., 2024.

This multi-authored small book from Deepmind offers an up to date account of what “appropriateness” (what they veiw should replace alignment and might be akin to moral agency) should look like in AI, also with technical suggestions.

Optional:

-

Read "Coherent Extrapolated Volition: A Meta-Level Approach to Machine Ethics" [pdf] [url] by Nick Tarleton, 2010 (10 pages).

Tarleton describes Yudkowsky’s thought experiment about how to get AI systems to learn how to do the right thing instead of having to explicitly tell them what to do. Check out the LessWrong blog posts on the topic for you’re curious for more.

-

Read "Benefits of assistance over reward learning" [pdf] by Rohin Shah et al., 2020 (22 pages).

Shah and colleges give an example of how the math might look in order to align a refinforcement learning agent to a user’s preferences instead of maximizing a reward function per se.

-

Read "The Alignment Problem: Machine Learning and Human Values" by Brian Christian, 2020.

Christian gives us a sense of the people and the problems around AI alignment. What are we supposed to do about AI given that we are uncertain what it might do?

-

Read "Clarifying "AI alignment"" [url] by Paul Christiano, 2021.

Christiano, a major figure in AI alignment and now head of the US AI safety institute, gives what is close to the original pitch for “alignment.”

-

Read "Beyond Preferences in AI Alignment" [pdf] [url] by Tan Zhi-Xuan et al., 2024 (44 pages).

Tan Zhi and colleagues explore how methodologically hard it is to measure preferences and, therefore, what we might be missing when we try to “align” to those preferences.

Project Presentations

Thurs, May 29 Where are we going?

Announcements:

- Course project presentations

Project Presentations

Tue, June 03 Where are we going?

Deep learning

Optional Optional Extra Content

Required:

-

Read Chapter 1 (17 pages) from "Artificial intelligence: a guide for thinking humans" [pdf] by Melanie Mitchell, 2019.

Mitchell, an expert on genetic programming and former student of Doug Hofstadter, approachably covers a history of symbolic and connectionist AI, describing, to the extent we will need, the technical differences between approaches over the years.

-

Read "Interventionist Methods for Interpreting Deep Neural Networks" [pdf] by Raphaël Millière et al., 2025 (25 pages).

Millière and Buckner offer an introduction to the kinds of methods we must use to uncover whether deep neural networks (which power most contemporary AI systems) have particular representations—a neuroscience of AI.

Optional:

-

Try out "Neural Network Playground" [url] by Daniel Smilkov et al., 2018.

This may be the most effective demo of how it is neural networks come to learn.

-

Watch "But what is a neural network?" [url] by Grant Sanderson, 2017.

In this series, Sanderson introduces us to the math behind neural networks and modern deep learning.

-

Read "Zoom In: An Introduction to Circuits" [url] by Chris Olah et al., 2020 (15 pages).

Olah and colleagues give a sense of the sort of claims possible when using the techniques which Millière and Buckner describe.

](/class/cs186/assets/img/margaret-hamilton-mit-apollo-code_0.jpg)