Chapter 1¶

Key distributions for categorical data: Poisson, Binomial, Multinomial.

Examples of exponential families.

Inference for sampling from such families.

Chapter 2¶

Contingency tables.

Sampling models: independent rows / columns; Poisson; case-control.

Odds ratios.

Conditional independence in three-way tables.

Chapter 3¶

Inference for two-way tables.

Tests of independence (Pearson / Fisher)

Residuals: Pearson and deviance.

Ordinal variables.

Bayesian models

Chapter 4¶

Generalized Linear Models

Basic definitions and examples: binary, counts

Likelihood / Score / Hessian (Information)

Deviance: likelihood ratio tests

Residuals

Algorithm: Fisher scoring / Newton-Raphson

Chapter 5¶

Odds ratios

Retrospective studies

Inference

A little bit of inference under misspecification: e.g. bootstrap

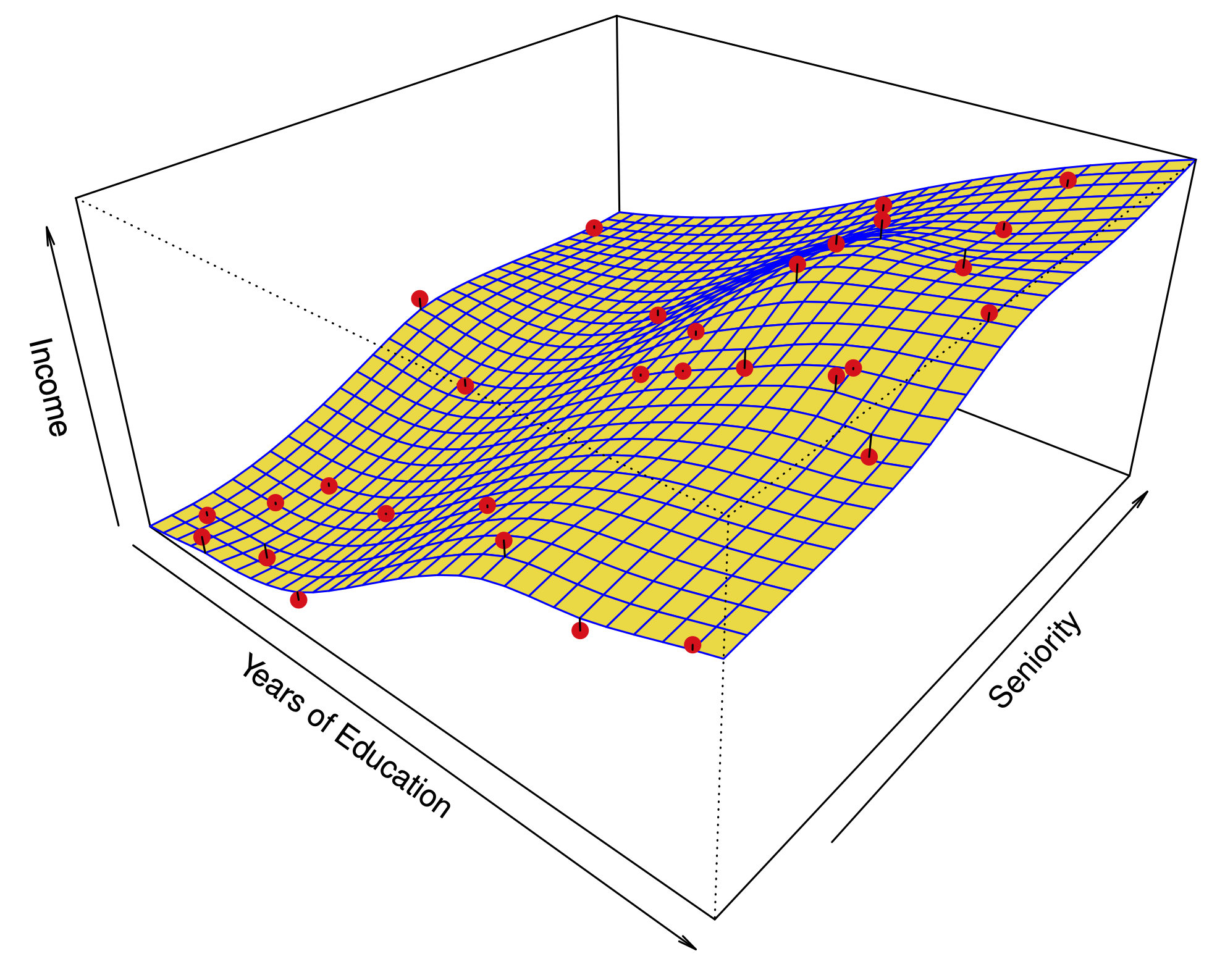

Multiple logistic regression

Chapter 6¶

Model selection and diagnostics (deviance / Pearson residuals) for logistic regression

Analogs of \(R^2\)

Mantel-Haenszel tests for stratified 2x2 tables

Power in logistic regression

Bayesian GLMs (Touched on in Chapter 7 of Agresti)¶

Brief description of Metropolis-Hastings / Gibbs sampling

Simple implementations in STAN

Chapter 8¶

Multinomial regression

Multicategory logit

Cumulative logit

Latent variable representations (data augmentation / Bayesian)

Ordinal responses

Conditional independence in 3-way tables

Chapter 9¶

Loglinear models

Homogeneous association (interactions up to 2-way only)

Graphical models

Inference (deviance, Wald)

Connection to logistic regression

Lindsey’s method: density modeling as GLM

Chapter 10¶

Model selection for loglinear models

Deviance tests

Residuals for diagnostics

Chapter 11¶

Matched pairs and square contingency tables

McNemar’s test

Subject specific models / conditonal inference

Symmetry and quasi-symmetry (Bradley-Terry)

Quasi-independence (agreement between raters)

Regularization¶

For GLMs we started off talking about LASSO: $\( {\cal P}(\beta) = \lambda \|\beta\|_1 = \lambda \sum_{j=1}^p |\beta_j|. \)$

KKT conditions: solving LASSO yields pair \((\hat{\beta}, \widehat{u}=-\nabla L(\hat{\beta}))\)

Regularization¶

Solving LASSO: coordinate descent¶

Sort of analogous to Gibbs sampling but instead of “drawing from dbn” we find its mode.

Regularization¶

Solving LASSO: proximal gradient¶

\(S_{\lambda}\) is soft-threshold operator (proximal operator of \(\ell_1\) penalty)

Updates all coordinates at once

Survival analysis¶

Lifetime data, often right-censored.

Observations are \((O_i, \delta_i)\) with \(O_i=\min(T_i, C_i)\) and $\( \delta_i = \begin{cases} 1 & T_i \leq C_i \\ 0 & \text{otherwise.} \end{cases} \)$

Goal: understand distribution of \(T|X\) based on response \((O,C)\).

Survival analysis¶

Hazard¶

Basic object modeled in survival analysis

Presuming density of \(T\) $\( 1 - F(t) = S(t) = \exp(-H(t)), \qquad H(t) = \int_{[0,t]} h(s) \; ds \)$

Estimates w/o features: Nelson-Aalen for hazard, Kaplan-Meier for survival

Log-rank tests to compare populations