Assignment 3#

You may discuss homework problems with other students, but you have to prepare the written assignments yourself.

Please combine all your answers, the computer code and the figures into one PDF file, and submit a copy to your folder on Gradescope.

Grading scheme: 10 points per question, total of 50.

Due date: 11:59 PM Friday May 30, 2025

Download RMarkdown#

Part A#

The sleepstudy dataset in lme4 provides a simple example of a mixed effects model. In this problem we’ll explore some aspects of fitting this model below:

library(lme4)

data(sleepstudy)

M = lmer(Reaction ~ Days + (1|Subject) + (0+Days|Subject), sleepstudy, subset=Days>=2)

M

Loading required package: Matrix

Linear mixed model fit by REML ['lmerMod']

Formula: Reaction ~ Days + (1 | Subject) + (0 + Days | Subject)

Data: sleepstudy

Subset: Days >= 2

REML criterion at convergence: 1404.626

Random effects:

Groups Name Std.Dev.

Subject (Intercept) 28.843

Subject.1 Days 6.285

Residual 25.747

Number of obs: 144, groups: Subject, 18

Fixed Effects:

(Intercept) Days

245.10 11.44

You can download it to python with

import statsmodels.api as sm

df = sm.datasets.get_rdataset(dataname='sleepstudy', package='lme4').data

Each of 18 subjects has 10 reaction times recorded, call these \(Y_{ij}, 1 \leq i \leq 18, 1 \leq j \leq 10\) and set \(D_{ij}\) the corresponding

days. The model specified by the above formula has a random intercept for Subject as well as a random slope for Days, the number of days of sleep

deprivation. The formula is specified so that these random effects are assumed independent. That is, the model fit is

with all groups of errors independent above.

Assume

Yhas theReactiontimes of each subject stacked together. Give a design matrixXdescribing the fixed effects of this model and a separate design matrixZdescribing the random effects so that we can make sense of

Above, \(\Gamma\) should be a concatenation of the \(\alpha_0\)’s and \(\alpha_D\)’s. What dimension is \(X\)? What dimension is \(Z\)?

Considering \(X\) and \(Z\) as fixed, non-random matrices, express \(\text{Cov}(Y)\) as a non-negative linear combination of three fixed matrices.

Write out the log-likelihood \(\ell\) of \(Y|X\) in terms of \(\theta=(\beta_0, \beta_D, \sigma^2_{\epsilon}, \sigma^2_0, \sigma^2_D)\).

Treating the \(\alpha_0\)’s and \(\alpha_D\)’s as latent variables, write out the complete log-likelihood \(\ell_c\) as well as its conditional expectation (given \(Y\)) at a specified value of \(\theta\).

Verify Fisher’s (also known as Louis’) identity (don’t worry too much about what is needed for interchange of expectation and differentiation):

Try to verify that \(\hat{\theta}\), the estimated values in

Mindeed satisfy \(\nabla \ell(\hat{\theta};Y)=0\).

Part B#

This problem will consider some aspects of Kernel PCA. Given a data matrix \(X \in \mathbb{R}^{n \times p}\), let \(K\) be a covariance kernel on \(\mathbb{R}^p\).

Describe how (with centering but no scaling) the PCA scores of \(X\) can be found from an eigen-decomposition of

Suppose you have an orthonormal basis \((\phi_i)_{1 \leq i \leq \text{dim}({\cal H})}\) of \({\cal H}\) the (assumed separable) reproducing kernel Hilbert space associated to \(K\). Verify that for every \(x,y \in \mathbb{R}^p\) the following holds

Let’s take our orthonormal basis and make a new (possibly very large) data matrix

with \(W_{ij} = \phi_j(X_i)\). Compute \(WW'_{n \times n}\). How does this matrix depend on your choice of basis? What is \((WW')_{ij}\)?

Using \(WW'\) and its centering

describe how to find the first \(m\) PCA scores \(Z_i \in \mathbb{R}^n, 1 \leq i \leq m\) (with centering but no scaling) for the data matrix \(W\).

Instead of a loading vector (which would depend on our basis), we might consider finding

with

being the sample variance of \(h(X)\). Show that \(\hat{h}_1\) takes on the expected form, i.e. there exists \(\hat{\alpha}^1 \in \mathbb{R}^n\) such that for every \(x \in \mathbb{R}^p\)

Having found \(\hat{h}_1\), we know that should have \(Z_{1,i} = \left(I_n - n^{-1} 1_n 1_n'\right)\hat{h}_1(X_i)\) for \(1 \leq i \leq n\). Check this. Can you find \(\hat{\alpha}^1\)?

Denoting the functions \(\hat{h}_j\) for \(1 \leq j \leq n-1\), can you find the others? Verify that

\(\langle \hat{h}_i, \hat{h}_j \rangle_{\cal H}=\delta_{ij}\) (for \(i,j\) less than the rank of the matrix in 4.).

(Hint: verify that that both the \(WW'\hat{\alpha}^j\)’s and \(Z_j\)’s are in the column space of

the matrix in 4.)

(Hint: verify that the \(\hat{\alpha}^j\)’s are centered, then check ESL’s suggested solutions indeed solve the problem in the sense that the centered fit yields \(Z_{1,j}\) and the \({\cal H}\) norm is 1. To verify they are centered, write candidate \(\alpha\) as \(R\gamma + b\) where \(R=I_n-n^{-1}1_n1_n'\) and the decomposition is “orthogonal” in \({\cal H}\).)

When using the linear kernel \(K(x,y)=x'y\), verify that \(\hat{h}_1(x)=V_1'x\) where \(V_1\) is the usual first loading vector in PCA.

Apply kernel PCA to the digits data from Assignment #2. Use a few different kernels and look at the 1st two principal components. Is kernel PCA any better than regular PCA (i.e. using a linear kernel) at separating the classes?

Part C#

Let \((X, Y)\) be a pair of random vectors on \(\mathbb{R}^p \times \{1, \dots, K\}\). Their joint law is parameterized by a mixed undirected graphical model. That is, its density proportional to

Show this density model is essentially the LDA (Linear Discriminant Analysis) model:

Express the parameters \(\mu_k, \pi, \Sigma\) in terms of \(\Theta\) above (the map may many to 1).

Modify the original joint density to get a model that yields QDA (Quadratic Discriminant Analysis). Keeping with the notation \(\Theta\) with a superscript indicating the interaction, which parameters have you added? (By interaction we mean \(\Theta^{XX}\) is paired with terms of the form \(xx'\) and \(\Theta^{XY}\) is paired with \(xy'\)).

The work of Hastie and Lee (2015) include penalties on \(\Theta\) to induce structure in the parameters. Using penalties or linear constraints, modify your solution to 2. to arrive at the Gaussian Naive Bayes estimator.

Impose constraints on your \(\Theta\) to arrive at Diagonal LDA (c.f. Section 18.2 of ESL). Using the group LASSO-like penalties suggested in Hastie and Lee (2015) would introduced certain sparsity patterns in \(\Theta\). Sketch how you could fit this model, using either a pseudo-likelihood or a more direct parameterization such as the LDA model in 1. above. Are these the same as the sparsity patterns in Nearest Shrunken Centroid (also in 18.2 of ESL)?

Part D#

Lindsey’s method is a method used for flexible exponential family modeling to model a density as

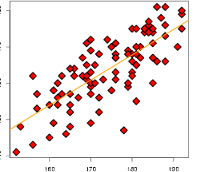

It is used, among other places, in Efron’s local FDR modeling, from which this figure is taken:

Modify the mixed graphical model of Part C so that within each

componentclass \(X\) is drawn from a user specified exponential family (i.e. a specification of a set of sufficient statistics \(t_i:\mathbb{R}^p \to \mathbb{R}\)). Write down a likelihood that fits separate models in each class.The fit for Lindsey’s method is based on a discretization of feature space and reduction to a Poisson GLM (see Section 2 of the Efron-Tibshirani paper cited above). Suppose we had 3 sufficient statistics

T_1,T_2andT_3and the carrier measure has been estimated at the prototype point for each element of the discretization and stored inmu_0. Write a stylized call toglmthat would fit this model. Assume the labels are stored asY.Mixture Discriminant Analysis (c.f. Section 12.7 of ESL is another method that fits a non-Gaussian density within each class. Unlike the proposal in 1., it often shares structure in the form of the covariance matrix. Modify your stylized call to

glmso thatT_1is shared across classes.