Assignment 2#

You may discuss homework problems with other students, but you have to prepare the written assignments yourself.

Please combine all your answers, the computer code and the figures into one file, and submit a copy in your dropbox on Gradescope.

Due date: Apr 26, 2024, 11:59PM.

Grading scheme: 10 points per question, total of 40.

Building PDF#

If you have not installed LaTeX on your computer. After running the below commands (once is enough), then using either Quarto or RMarkdown formats should hopefully be sufficient to build directly to PDF.

install.packages('tinytex', repos='http://cloud.r-project.org')

tinytex::install_tinytex()

Download#

Question 1#

In order to investigate the feasibility of starting a Sunday edition for a large metropolitan newspaper, information was obtained from a sample of 34 newspapers concerning their daily and Sunday circulation (in thousands). The data are in the file NewspapersData.txt.

Load the data into

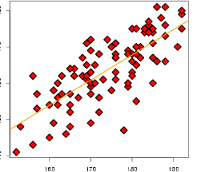

R(useread.tablerather thanread.csvand use a separator ‘\t’).Construct a scatter plot of Sunday circulation versus daily circulation. Does the plot suggest a linear relationship between daily and Sunday circulation? Do you think this is a plausible relationship?

Fit a regression line predicting Sunday circulation from daily circulation and add the regression line to the plot in 2. State the equation of the estimated regression line.

Obtain 95% confidence intervals for \(\beta_0\) and \(\beta_1\). Do this in two ways: using the function

confint, and using thesummaryof your fitted model. Check that the answers match.An industry veteran says: “I bet that if you increase daily circulation by 100 you’ll see an increase of more than 100 in Sunday circulation.” Test this (one-sided) hypothesis at level 5% using this data. State your hypothesis, test statistic and conclusion.

What proportion of the variability in Sunday circulation is accounted for by daily circulation?

Provide an interval estimate (based on 95% level) for the average Sunday circulation of newspapers with daily circulation of 500,000.

The particular newspaper that is considering a Sunday edition has a daily circulation of 500,000. Provide an interval estimate (based on 95% level) for the Sunday circulation of this paper. How does this interval differ from that given in 7.?

Question 2#

The article Tomasetti and Vogelstein attempt to explain why cancer incidence varies drastically across tissues (e.g. why one is much more likely to develop lung cancer rather than pelvic bone cancer). The authors show that a higher average lifetime risk for a cancer in a given tissue correlates with the rate of replication of stem cells in that tissue. The main inferential tool for their statistical analysis was a simple linear regression, which we will replicate here.

The dataset is available

here. The dataset

contains information about 31 tumour types. The Lscd (Lifetime stem

cell divisions) column refers to the total number of stem cell

divisions during the average lifetime, while Risk refers to the

lifetime risk for cancer of that tissue type.

Fit a simple linear regression model to the data with

log(Risk)as the dependent variable andlog(Lscd)as the independent variable. Plot the estimated regression line.Add upper and lower 95% prediction bands for the regression line on the plot, using

predict. That is, produce one line for the upper limit of each interval over a sequence of densities, and one line for the lower limits of the intervals. Interpret these bands at aLscdof \(10^{10}\).Test whether the slope in this regression is equal to 0 at level \(\alpha=0.05\). State the null hypothesis, the alternative, the conclusion and the \(p\)-value.

Give a 95% confidence interval for the slope of the regression line. Interpret your interval.

Report the \(R^2\) and the adjusted \(R^2\) of the model, as well as an estimate of the variance of the errors in the model.

Provide an interpretation of the \(R^2\) you calculated above. According to a Reuters article “Plain old bad luck plays a major role in determining who gets cancer and who does not, according to researchers who found that two-thirds of cancer incidence of various types can be blamed on random mutations and not heredity or risky habits like smoking.” Is this interpretation of \(R^2\) correct?

Question 3#

Let \(Y\) and \(X\) denote variables in a simple linear regression of Y on X that you’ve run for

part of your research in your honors thesis.

Suppose the model

$\(

Y = \beta_0 + \beta_1 X + \epsilon

\)$

satisfies the usual regression assumptions.

The table below is a table similar to the output of anova when passed a simple linear regression model.

Response: Y

Df Sum Sq Mean Sq F value Pr(>F)

X 1 NA 492 NA NA

Residuals 32 NA 119

Compute the missing values of in the above table.

Test the null hypothesis \(H_0 : \beta_1 = 0\) at level \(\alpha = 0.05\) using the above table. Can you test the hypothesis \(H_0 : \beta_1 < 0\) using Table 1?

Compute the \(R^2\) for this simple linear regression.

If \(Y\) and \(X\) were reversed in the above regression, what would you expect \(R^2\) to be?

Question 4#

Consider the Wage data we saw in Assignment 1.

Fit a linear model of

wageonage. At a 5% level, is there evidence for a linear relationship betweenwageandage? Would you characterize the evidence as strong or weak?What proportion of the variation in

wageis explained byage? Is this a large amount of the total variation? Does this contradict your characterization of the evidence in 1.? Explain.Based on the usual diagnostic plots as discussed in Chapter 8 of the textbook, does this model seem adequate?

Consider adding a quadratic term to the fit by using the formula

wage ~ poly(age, 2)in fittinglm. Does this model explain appreciably more variation than your initial model?Replot the diagnostics you used in 3. for this model. Have any of them changed appreciably?