Multiple Linear Regression#

Download#

Outline#

Case studies:

A. Effect of light on meadowfoam flowering

B. Studying the brain sizes of mammals

Specifying the model.

Fitting the model: least squares.

Interpretation of the coefficients.

\(F\)-statistic revisited

Matrix approach to linear regression.

Investigating the design matrix

Case study A:#

url = 'https://raw.githubusercontent.com/StanfordStatistics/stats191-data/main/Sleuth3/light_flowering.csv'

flower = read.table(url, sep=',', header=TRUE)

head(flower)

| Flowers | Time | Intensity | |

|---|---|---|---|

| <dbl> | <int> | <int> | |

| 1 | 62.3 | 1 | 150 |

| 2 | 77.4 | 1 | 150 |

| 3 | 55.3 | 1 | 300 |

| 4 | 54.2 | 1 | 300 |

| 5 | 49.6 | 1 | 450 |

| 6 | 61.9 | 1 | 450 |

Researchers manipulate timing and intensity of light to investigate effect on number of flowers.

Case study B:#

url = 'https://raw.githubusercontent.com/StanfordStatistics/stats191-data/main/Sleuth3/brains.csv'

brains = read.csv(url, header=TRUE, row.names=1)

head(brains)

| Brain | Body | Gestation | Litter | |

|---|---|---|---|---|

| <dbl> | <dbl> | <int> | <dbl> | |

| Aardvark | 9.6 | 2.20 | 31 | 5.0 |

| Acouchis | 9.9 | 0.78 | 98 | 1.2 |

| African elephant | 4480.0 | 2800.00 | 655 | 1.0 |

| Agoutis | 20.3 | 2.80 | 104 | 1.3 |

| Axis deer | 219.0 | 89.00 | 218 | 1.0 |

| Badger | 53.0 | 6.00 | 60 | 2.2 |

How are litter size, gestation period associated to brain size in mammals?

A model for the brains data#

{height=400 fig-align=”center”}

{height=400 fig-align=”center”}

Figure depicts our model: to generate \(Y_i\):#

First fix \(X=(X_1,\dots,X_p)\), form the mean (\(\beta_0 + \sum_j \beta_j X_{j}\)), add an error \(\epsilon\)

A model for brains#

Multiple linear regression model#

brains.lm = lm(Brain ~ Body + Gestation + Litter, data=brains)

summary(brains.lm)$coef

summary(brains.lm)$fstatistic

| Estimate | Std. Error | t value | Pr(>|t|) | |

|---|---|---|---|---|

| (Intercept) | -225.2921328 | 83.05875218 | -2.712443 | 7.971598e-03 |

| Body | 0.9858781 | 0.09428263 | 10.456624 | 2.517636e-17 |

| Gestation | 1.8087434 | 0.35444885 | 5.102974 | 1.790007e-06 |

| Litter | 27.6486394 | 17.41429351 | 1.587698 | 1.157857e-01 |

- value

- 130.729924871479

- numdf

- 3

- dendf

- 92

Another model for brains#

simpler.lm = lm(Brain ~ Body + Litter, data=brains)

summary(simpler.lm)$coef

summary(simpler.lm)$fstatistic

| Estimate | Std. Error | t value | Pr(>|t|) | |

|---|---|---|---|---|

| (Intercept) | 145.028380 | 45.51865579 | 3.186131 | 1.963384e-03 |

| Body | 1.301621 | 0.08014619 | 16.240583 | 6.755835e-29 |

| Litter | -29.022067 | 15.11200472 | -1.920464 | 5.786311e-02 |

- value

- 144.23837946366

- numdf

- 2

- dendf

- 93

Fitting a multiple linear regression model#

Just as in simple linear regression, model is fit by minimizing

\[\begin{split}\begin{aligned} SSE(\beta_0, \dots, \beta_p) &= \sum_{i=1}^n\left(Y_i - \left(\beta_0 + \sum_{j=1}^p \beta_j X_{ij} \right) \right)^2 \\ &= \|Y - \widehat{Y}(\beta)\|^2 \end{aligned}\end{split}\]Minimizers: \(\widehat{\beta} = (\widehat{\beta}_0, \dots, \widehat{\beta}_p)\) are the “least squares estimates”: are also normally distributed as in simple linear regression.

Estimating \(\sigma^2\)#

As in simple regression

\[\widehat{\sigma}^2 = \frac{SSE}{n-p-1} \sim \sigma^2 \cdot \frac{\chi^2_{n-p-1}}{n-p-1}\]independent of \(\widehat{\beta}\).

Why \(\chi^2_{n-p-1}\)? Typically, the degrees of freedom in the estimate of \(\sigma^2\) is \(n-\# \text{number of parameters in regression function}\).

Interpretation of \(\beta_j\) in brains.lm#

Take \(\beta_1=\beta_{\tt Body}\) for example. This is the amount the average

Brainweight increases for one kg of increase inBody, keeping everything else constant.We refer to this as the effect of

Bodyallowing for or controlling for the other variables.

Example#

Let’s take

Beaked whaleand artificially add a kg to itsBodyand compute the predicted weight

case1 = brains['Beaked whale',]

case2 = case1

case2['Body'] = case1['Body'] + 1

coef(brains.lm)

predict(brains.lm, rbind(case1, case2))

- (Intercept)

- -225.292132761766

- Body

- 0.985878094591525

- Gestation

- 1.808743390259

- Litter

- 27.6486394142719

- Beaked whale

- 505.043355493964

- Beaked whale1

- 506.029233588555

Same example in simpler.lm#

To emphasize the parameters depend on the other variables, let’s redo in the

simpler.lmmodel

case1 = brains['Beaked whale',]

case2 = case1

case2['Body'] = case1['Body'] + 1

coef(simpler.lm)

predict(simpler.lm, rbind(case1, case2))

- (Intercept)

- 145.028380229758

- Body

- 1.30162092388685

- Litter

- -29.0220669146293

- Beaked whale

- 418.193890755138

- Beaked whale1

- 419.495511679024

\(R^2\) for multiple regression#

\(R^2\) is now called the multiple correlation coefficient of the model, or the coefficient of multiple determination.

The sums of squares and \(R^2\) are defined analogously to those in simple linear regression.

Computing \(R^2\) by hand#

Y = brains$Brain

SST = sum((Y - mean(Y))^2)

SSE = sum(resid(brains.lm)^2)

SSR = SST - SSE

1 - SSE/SST

summary(brains.lm)$r.squared

Adjusted \(R^2\)#

As we add more and more variables to the model – even random ones, \(R^2\) will increase to 1.

Adjusted \(R^2\) tries to take this into account by replacing sums of squares by mean squares

Computing \(R^2_a\) by hand#

n = length(Y)

MST = SST / (n - 1)

MSE = SSE / brains.lm$df.residual

MSR = SSR / (n - 1 - brains.lm$df.residual)

1 - MSE/MST

summary(brains.lm)$adj.r.squared

\(F\)-test in summary(brains.lm)#

Full model:

Reduced model:

\[\texttt{Brain}_i = \beta_0 + \varepsilon_i\]Statistic:

\[F=\frac{(SSE_R - SSE_F) / (df_R - df_F)}{SSE_F / df_F} = \frac{SSR/df(SSR)}{SSE/df(SSE)} = \frac{MSR}{MSE}.\]

Right triangle again#

{height=300 fig-align=”center”}

{height=300 fig-align=”center”}

Sides of the triangle: \(df_R-df_F=3\), \(df_F=92\)

Hypotenuse: \(df_R=95\)

summary(brains.lm)$fstatistic

- value

- 130.729924871479

- numdf

- 3

- dendf

- 92

Matrix formulation#

\({X}\) is called the design matrix of the model

\({\varepsilon} \sim N(0, \sigma^2 I_{n \times n})\) is multivariate normal

\(SSE\) in matrix form#

Design matrix#

The design matrix is the \(n \times (p+1)\) matrix with entries

n = nrow(brains)

X = with(brains, cbind(rep(1,n), Body, Gestation, Litter))

colnames(X)[1] = '(Intercept)'

head(X)

| (Intercept) | Body | Gestation | Litter |

|---|---|---|---|

| 1 | 2.20 | 31 | 5.0 |

| 1 | 0.78 | 98 | 1.2 |

| 1 | 2800.00 | 655 | 1.0 |

| 1 | 2.80 | 104 | 1.3 |

| 1 | 89.00 | 218 | 1.0 |

| 1 | 6.00 | 60 | 2.2 |

The matrix X is the same as formed by R

head(model.matrix(brains.lm))

| (Intercept) | Body | Gestation | Litter | |

|---|---|---|---|---|

| Aardvark | 1 | 2.20 | 31 | 5.0 |

| Acouchis | 1 | 0.78 | 98 | 1.2 |

| African elephant | 1 | 2800.00 | 655 | 1.0 |

| Agoutis | 1 | 2.80 | 104 | 1.3 |

| Axis deer | 1 | 89.00 | 218 | 1.0 |

| Badger | 1 | 6.00 | 60 | 2.2 |

Math aside: least squares solution#

Normal equations

\[\frac{\partial}{\partial \beta_j} SSE \biggl|_{\beta = \widehat{\beta}_{}} = -2 \left({Y\ } - {X} \widehat{\beta}_{} \right)^T {X}_j = 0, \qquad 0 \leq j \leq p.\]Equivalent to

Distribution: \(\widehat{\beta} \sim N(\beta, \sigma^2 (X^TX)^{-1}).\)

Math aside: multivariate normal#

To obtain the distribution of \(\hat{\beta}\) we used the following fact about the multivariate Normal.

Suppose \(Z \sim N(\mu,\Sigma)\). Then, for any fixed matrix \(A\)

Math aside: how did we derive the distribution of \(\hat{\beta}\)?#

Above, we saw that \(\hat{\beta}\) is equal to a matrix times \(Y\). The matrix form of our model is

Therefore,

Math aside: checking the equation#

beta = as.numeric(solve(t(X) %*% X) %*% t(X) %*% Y)

names(beta) = colnames(X)

beta

coef(brains.lm)

- (Intercept)

- -225.292132761768

- Body

- 0.985878094591526

- Gestation

- 1.808743390259

- Litter

- 27.648639414272

- (Intercept)

- -225.292132761766

- Body

- 0.985878094591525

- Gestation

- 1.808743390259

- Litter

- 27.6486394142719

Categorical variables#

Recall case study A: the

flowerexperiment

flower1.lm = lm(Flowers ~ factor(Time), data=flower)

summary(flower1.lm)$coef

| Estimate | Std. Error | t value | Pr(>|t|) | |

|---|---|---|---|---|

| (Intercept) | 50.05833 | 3.615510 | 13.845440 | 2.433418e-12 |

| factor(Time)2 | 12.15833 | 5.113104 | 2.377877 | 2.652620e-02 |

Design matrix with categorical variables#

Rhas used a binary column forfactor(Time).

model.matrix(flower1.lm)

| (Intercept) | factor(Time)2 | |

|---|---|---|

| 1 | 1 | 0 |

| 2 | 1 | 0 |

| 3 | 1 | 0 |

| 4 | 1 | 0 |

| 5 | 1 | 0 |

| 6 | 1 | 0 |

| 7 | 1 | 0 |

| 8 | 1 | 0 |

| 9 | 1 | 0 |

| 10 | 1 | 0 |

| 11 | 1 | 0 |

| 12 | 1 | 0 |

| 13 | 1 | 1 |

| 14 | 1 | 1 |

| 15 | 1 | 1 |

| 16 | 1 | 1 |

| 17 | 1 | 1 |

| 18 | 1 | 1 |

| 19 | 1 | 1 |

| 20 | 1 | 1 |

| 21 | 1 | 1 |

| 22 | 1 | 1 |

| 23 | 1 | 1 |

| 24 | 1 | 1 |

How categorical variables are encoded#

We can change the columns in the design matrix:

model.matrix(lm(Flowers ~ factor(Time) - 1, data=flower))

| factor(Time)1 | factor(Time)2 | |

|---|---|---|

| 1 | 1 | 0 |

| 2 | 1 | 0 |

| 3 | 1 | 0 |

| 4 | 1 | 0 |

| 5 | 1 | 0 |

| 6 | 1 | 0 |

| 7 | 1 | 0 |

| 8 | 1 | 0 |

| 9 | 1 | 0 |

| 10 | 1 | 0 |

| 11 | 1 | 0 |

| 12 | 1 | 0 |

| 13 | 0 | 1 |

| 14 | 0 | 1 |

| 15 | 0 | 1 |

| 16 | 0 | 1 |

| 17 | 0 | 1 |

| 18 | 0 | 1 |

| 19 | 0 | 1 |

| 20 | 0 | 1 |

| 21 | 0 | 1 |

| 22 | 0 | 1 |

| 23 | 0 | 1 |

| 24 | 0 | 1 |

Design matrix with categorical variables#

By default,

Rdiscards one of the columns. Why?

flower2.lm = lm(Flowers ~ factor(Intensity), data=flower)

model.matrix(flower2.lm)

| (Intercept) | factor(Intensity)300 | factor(Intensity)450 | factor(Intensity)600 | factor(Intensity)750 | factor(Intensity)900 | |

|---|---|---|---|---|---|---|

| 1 | 1 | 0 | 0 | 0 | 0 | 0 |

| 2 | 1 | 0 | 0 | 0 | 0 | 0 |

| 3 | 1 | 1 | 0 | 0 | 0 | 0 |

| 4 | 1 | 1 | 0 | 0 | 0 | 0 |

| 5 | 1 | 0 | 1 | 0 | 0 | 0 |

| 6 | 1 | 0 | 1 | 0 | 0 | 0 |

| 7 | 1 | 0 | 0 | 1 | 0 | 0 |

| 8 | 1 | 0 | 0 | 1 | 0 | 0 |

| 9 | 1 | 0 | 0 | 0 | 1 | 0 |

| 10 | 1 | 0 | 0 | 0 | 1 | 0 |

| 11 | 1 | 0 | 0 | 0 | 0 | 1 |

| 12 | 1 | 0 | 0 | 0 | 0 | 1 |

| 13 | 1 | 0 | 0 | 0 | 0 | 0 |

| 14 | 1 | 0 | 0 | 0 | 0 | 0 |

| 15 | 1 | 1 | 0 | 0 | 0 | 0 |

| 16 | 1 | 1 | 0 | 0 | 0 | 0 |

| 17 | 1 | 0 | 1 | 0 | 0 | 0 |

| 18 | 1 | 0 | 1 | 0 | 0 | 0 |

| 19 | 1 | 0 | 0 | 1 | 0 | 0 |

| 20 | 1 | 0 | 0 | 1 | 0 | 0 |

| 21 | 1 | 0 | 0 | 0 | 1 | 0 |

| 22 | 1 | 0 | 0 | 0 | 1 | 0 |

| 23 | 1 | 0 | 0 | 0 | 0 | 1 |

| 24 | 1 | 0 | 0 | 0 | 0 | 1 |

Some additional models#

~ Intensity#

flower3.lm = lm(Flowers ~ Intensity, data=flower)

summary(flower3.lm)$coef

| Estimate | Std. Error | t value | Pr(>|t|) | |

|---|---|---|---|---|

| (Intercept) | 77.38500000 | 4.161186164 | 18.596861 | 6.059011e-15 |

| Intensity | -0.04047143 | 0.007123293 | -5.681562 | 1.029503e-05 |

Some additional models#

~ Intensity + factor(Time)#

flower4.lm = lm(Flowers ~ Intensity + factor(Time), data=flower)

summary(flower4.lm)$coef

| Estimate | Std. Error | t value | Pr(>|t|) | |

|---|---|---|---|---|

| (Intercept) | 71.30583333 | 3.27377202 | 21.780940 | 6.767274e-16 |

| Intensity | -0.04047143 | 0.00513237 | -7.885525 | 1.036787e-07 |

| factor(Time)2 | 12.15833333 | 2.62955696 | 4.623719 | 1.463776e-04 |

Some additional models#

~ factor(Intensity) + factor(Time)#

flower5.lm = lm(Flowers ~ factor(Intensity) + factor(Time), data=flower)

summary(flower5.lm)$coef

| Estimate | Std. Error | t value | Pr(>|t|) | |

|---|---|---|---|---|

| (Intercept) | 67.19583 | 3.628693 | 18.517916 | 1.049173e-12 |

| factor(Intensity)300 | -9.12500 | 4.751074 | -1.920618 | 7.171506e-02 |

| factor(Intensity)450 | -13.37500 | 4.751074 | -2.815153 | 1.191898e-02 |

| factor(Intensity)600 | -23.22500 | 4.751074 | -4.888368 | 1.384982e-04 |

| factor(Intensity)750 | -27.75000 | 4.751074 | -5.840784 | 1.965699e-05 |

| factor(Intensity)900 | -29.35000 | 4.751074 | -6.177550 | 1.012760e-05 |

| factor(Time)2 | 12.15833 | 2.743034 | 4.432440 | 3.648933e-04 |

Interactions#

Suppose we believe that

Flowersvaries linearly withIntensitybut the slope depends onTime.We’d need two parameters for

Intensity

flower6.lm = lm(Flowers ~ Intensity + factor(Time) + factor(Time):Intensity, data=flower)

summary(flower6.lm)$coef

| Estimate | Std. Error | t value | Pr(>|t|) | |

|---|---|---|---|---|

| (Intercept) | 71.623333333 | 4.343304599 | 16.4905158 | 4.143572e-13 |

| Intensity | -0.041076190 | 0.007435051 | -5.5246682 | 2.083392e-05 |

| factor(Time)2 | 11.523333333 | 6.142360270 | 1.8760432 | 7.532164e-02 |

| Intensity:factor(Time)2 | 0.001209524 | 0.010514750 | 0.1150312 | 9.095675e-01 |

What is the regression line when

Time==1? AndTime==2?

Different models across groups#

Set \(\beta_1=\beta_{\tt Intensity}\), \(\beta_2=\beta_{\tt Time2}\), \(\beta_3=\beta_{\tt Time2:Intensity}\).

In

Time==1group, one unit change ofIntensityleads to \(\beta_1\) units of change inFlower.In

Time==2group, one unit change ofIntensityleads to \(\beta_1 + \beta_3\) units of change inFlower.Test \(H_0\) slope is the same within each group.

summary(flower6.lm)$coef

| Estimate | Std. Error | t value | Pr(>|t|) | |

|---|---|---|---|---|

| (Intercept) | 71.623333333 | 4.343304599 | 16.4905158 | 4.143572e-13 |

| Intensity | -0.041076190 | 0.007435051 | -5.5246682 | 2.083392e-05 |

| factor(Time)2 | 11.523333333 | 6.142360270 | 1.8760432 | 7.532164e-02 |

| Intensity:factor(Time)2 | 0.001209524 | 0.010514750 | 0.1150312 | 9.095675e-01 |

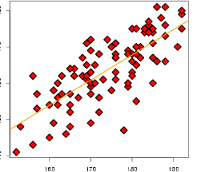

Visualizing interaction#

with(flower, plot(Intensity, Flowers, ylim=c(40, 80)))

cur.lm = lm(Flowers ~ Intensity + factor(Time) + factor(Time):Intensity, pch=Time, data=flower)

Ival = seq(100, 1000, length=10)

Yhat1 = predict(cur.lm, list(Time=rep(1, 10), Intensity=Ival))

Yhat2 = predict(cur.lm, list(Time=rep(2, 10), Intensity=Ival))

lines(Ival, Yhat1, col='red')

lines(Ival, Yhat2, col='blue')

Warning message:

“In lm.fit(x, y, offset = offset, singular.ok = singular.ok, ...) :

extra argument ‘pch’ will be disregarded”