Assignment 4#

You may discuss homework problems with other students, but you have to prepare the written assignments yourself.

Please combine all your answers, the computer code and the figures into one file, and submit a copy in your dropbox on Gradescope.

Due date: 11:59 PM, May 28, 2024.

Grading scheme: 10 points per question, total of 30.

Building PDF#

If you have not installed LaTeX on your computer. After running the below commands (once is enough), then using either Quarto or RMarkdown formats should hopefully be sufficient to build directly to PDF.

install.packages('tinytex', repos='http://cloud.r-project.org')

tinytex::install_tinytex()

Download#

Question 2 (Model selection for multiple linear regression)#

Use the Carseats data we saw in Assignment 3 for this problem.

Parts#

Fit a full model

Sales ~ .to the data.Extract the design matrix from your model in 1., discarding the column for the intercept.

Use

leapsto select a model using adjusted \(R^2\) as a criterion, storing the 5 best models of each size.Use

leapsto select a model using Mallow’s \(C_p\) as a criterion, storing the 5 best models of each size.Use

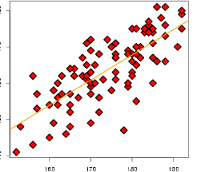

stepto select a model usingdirection='forward'starting fromSales ~ 1with the upper model being the model in 1. Do the models in 3., 4. and 5. agree?Make a plot of \(C_p\) versus model size. Is your selected model clearly better than all others or are there other models of similar quality?

Question 3#

Consider the Wage data we saw in Assignment 1.

Parts#

Create an outcome variable

highwhich isTRUEifwage > 250andFALSEotherwise.Fit a logistic regression model

highwith featuresmaritl,age,health,health_ins,educationandrace.At a 5% level, test the null hypothesis that the effect of

maritlis 0 in the model in part 2.Split the data into two sets of equal size using this snippet of code to form a half for model selection and a second half for inference. We’ll focus on students with at least

HS:

set.seed(1)

atleast_HS = Wage$education != '1. < HS Grad'

selection = rep(TRUE, nrow(Wage))

selection[sample(1:nrow(Wage), 0.5*nrow(Wage), replace=FALSE)] = FALSE

inference = !selection

selection = selection & atleast_HS

inference = inference & atleast_HS

Using

subset=selection, select a model using forward selection usingstepwith the largest model being the model in 2. Call this modelselected.glmFit the same model selected in 5. on

subset=inference. Call this modelsplit.glm. Compare thesummary()ofsplit.glmandselected.glm. In terms of overall signficance of estimated effects, would your conclusions be very different betweenselected.glmandsplit.glm? Do any effects that were significant at level 5% in theselect.glmswitch to not significant insplit.glm?