Data snooping#

Download#

Inference after selection#

Most model selection procedures will choose features that have large \(T\)-statistics when testing whether they are 0 or not…

Even when nothing is happening some features will have large \(T\)-statistics!

Consequences#

Using \(p\)-values from

summary()of a selected model is misleading.Using confidence intervals from

confint()of a selected model is misleading.

Null example#

Let’s make some data where there is no relation between

YandX.

n = 100

p = 40

set.seed(0)

X = matrix(rnorm(n*p), n, p)

Y = rnorm(n)

Behavior of \(p\)-values#

pval = summary(lm(Y ~ X))$coef[,4]

min(pval)

Best single variable model#

Repeat 100 times, taking

minP = c()

for (i in 1:100) {

X = matrix(rnorm(n*p), n, p)

Y = rnorm(n)

minP = c(minP, min(summary(lm(Y ~ X))$coef[,4]))

}

What proportion below 5%?

mean(minP < 0.05)

80% of the time we’ll falsely declare a true relationship between

YandX!80% of our confidence intervals won’t cover 0 (truth)…

Inference after AIC selection#

Let’s look at a selection procedure we have used…

We’ll build up 100 null data sets and store them for a few analyses

In practice, there will likely be some signals – here there are none…

k = 100

Y_list = list()

X_list = list()

set.seed(1)

for(i in 1:k) {

Y_list[[i]] = rnorm(n)

X_list[[i]] = matrix(rnorm(n*p), n, p)

}

Behavior of \(p\)-values from AIC selected model#

pval = c()

for (i in 1:k) {

X = X_list[[i]]

Y = Y_list[[i]]

D = data.frame(X, Y)

F = lm(Y ~ ., D)

# select a model

M = step(lm(Y ~ 1, D),

list(upper=F),

trace=FALSE,

direction='forward')

# capture the p-values

pvalM = summary(M)$coef[,4]

pval = c(pval, pvalM[2:length(pvalM)])

}

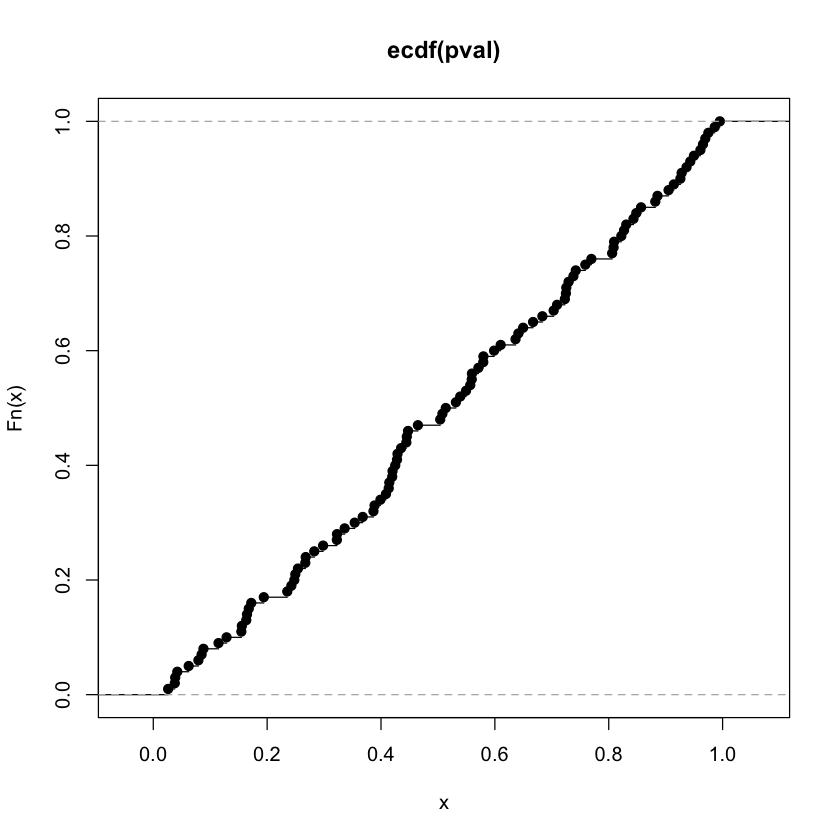

Distribution of \(p\)-values#

Distribution function here should be diagonal…

50% of our 95% confidence intervals will not cover 0 (truth)

plot(ecdf(pval))

mean(pval < 0.05)

Data splitting#

Randomly select some % to select, remaining to construct CIs, compute \(p\)-values

pval = c()

train_percent = .6

for (i in 1:k) {

X = X_list[[i]]

Y = Y_list[[i]]

select = sample(1:n,

train_percent*n,

replace=FALSE)

inference = c(1:n)[-select]

D = data.frame(X, Y)

F = lm(Y ~ ., D, subset=select)

# select a model

M = step(lm(Y ~ 1, D, subset=select),

list(upper=F),

trace=FALSE,

direction='forward')

# capture the included variables

sel_X = attr(M$terms, "term.labels")

best_X = D[sel_X]

# refit on the data held for inference

M_inf = lm(Y ~ best_X[,1], subset=inference)

# capture the p-values

pvalM = summary(M_inf)$coef[,4]

pval = c(pval, pvalM[2:length(pvalM)])

}

Data splitting \(p\)-values#

plot(ecdf(pval))

mean(pval < 0.05)